Key Highlights:

Few-Shot Learning: Learn how Llama 3 leverages advanced techniques like few-shot prompting to perform tasks with minimal data, enabling efficient model performance in NLP applications.

Prompt Engineering: Crafting precise, task-specific prompts is crucial for maximizing the performance of large language models (LLMs) like Llama 3. This technique ensures accurate, context-aware, and relevant model responses.

Zero-Shot vs. Few-Shot Prompting: Zero-shot prompting allows models to perform tasks without prior examples, while few-shot prompting provides minimal examples to guide the model and enhance accuracy.

Llama 3 Implementation: With Novita AI’s Llama API, developers can easily integrate Llama 3 into their applications, utilizing prompt engineering and few-shot learning to optimize model outputs with minimal data.

LangChain and Novita AI: Integration of LangChain with Novita AI enables developers to implement few-shot learning efficiently. LangChain simplifies prompt creation, query set management, and streamlines the learning process with Llama 3.

Practical Applications: Novita AI and LangChain enable scalable, flexible AI solutions for NLP tasks like sentiment analysis and natural language understanding, providing developers with reliable, accurate, and context-sensitive outputs.

Table Of Contents

In the field of natural language processing (NLP), few-shot learning has garnered significant attention for enabling models to perform tasks with minimal training data. Llama 3, a state-of-the-art language model, employs advanced techniques such as prompt engineering and few-shot learning to enhance performance, particularly in data-scarce scenarios. This guide explores key concepts, provides a step-by-step implementation using LangChain, and highlights how Novita AI facilitates seamless integration with Llama 3 to optimize performance.

Key Concepts in Few-Shot Learning

1. The Importance of Prompt Engineering for LLMs

Prompt engineering is a critical technique in the field of natural language processing (NLP) that significantly enhances the performance of large language models (LLMs) such as Llama 3. This process involves carefully designing input prompts to provide the model with the necessary context, specific instructions, and illustrative examples to improve its understanding of a task.

Effective prompt engineering is essential for several reasons:

Clarity and Precision: Well-crafted prompts help reduce ambiguity by clearly articulating user intentions. This clarity enables LLMs to generate responses that are not only relevant but also accurate, aligning closely with user expectations.

Contextual Relevance: By supplying sufficient context, prompt engineering aids models in interpreting tasks more effectively. This is particularly important when dealing with complex queries or nuanced topics, as it guides the model toward more pertinent outputs.

Few-shot Learning: One of the unique challenges with models like Llama 3 is their ability to perform well with minimal training data. Few-shot prompting allows users to provide a few examples within the prompt, enabling the model to generalize from these instances and produce quality responses without extensive retraining.

Instruction Following: Effective prompts often include explicit instructions, which can lead to better results than open-ended queries. For instance, specifying the format or style of the desired output can greatly influence the model’s response.

In summary, prompt engineering is not just about crafting any input; it’s about creating strategic prompts that maximize the potential of LLMs. By focusing on clarity, context, and instruction specificity, users can harness these models more effectively for a variety of applications, from summarization to complex problem-solving.

Optimizing Few-Shot Prompts

Few-Shot Prompting provides a few examples (typically 2–5) to guide the model in understanding the desired output format and task. This method helps improve the accuracy and consistency of the model’s output, especially when the task requires more context or structure.

Example:

For sentiment analysis, we can provide several labeled examples to teach the model how to respond. This helps the model better understand the task’s requirements and format.

Sentiment Analysis Task:

# Few-shot sentiment analysis prompt

few_shot_prompt = """

You are a sentiment classifier. For each message, give the percentage of positive/neutral/negative sentiment.

Here are some samples:

Text: I liked it

Sentiment: 70% positive 30% neutral 0% negative

Text: It could be better

Sentiment: 0% positive 50% neutral 50% negative

Text: It's fine

Sentiment: 25% positive 50% neutral 25% negative

Now, analyze the following text:

Text: I thought it was okay

"""

# Generate output from the model

response = llama.generate(few_shot_prompt)

print(response) # Expected output: Sentiment: 10% positive 50% neutral 40% negative

Explanation:

In this few-shot example, we provided 3 sentiment analysis examples that help the model understand the task’s structure and the expected output format. The model then applies the learned structure to analyze a new text, providing a sentiment breakdown in percentages.

To improve the effectiveness of few-shot prompting, careful and strategic design of the prompts is key. Here are best practices to optimize your few-shot prompts:

1.Clear Task Instructions

- Explicitly define the task within the prompt. Clear instructions allow the model to understand the task’s objectives and expected output more easily. For example, in a sentiment analysis task, make it clear that the model should classify the sentiment as positive, neutral, or negative.

2.Provide Relevant Context

- Provide any background information or relevant context to help the model better understand the task. This is especially crucial in few-shot learning, where examples guide the model. A clear context helps the model discern the boundaries of the task and generate more accurate responses.

3.Structured Examples

- Use structured examples that showcase the expected output. For instance, providing consistent formats like “Text: [example] → Sentiment: [label]” ensures the model knows how to format its response. Well-structured examples improve the model’s understanding of both the task and the expected outcome.

4.Diverse Examples

- Include a variety of examples that cover different aspects of the task. A diverse set of examples helps the model generalize better to new, unseen inputs, improving its ability to handle different variations of the task when it’s applied in real-world scenarios.

By following these guidelines, you can significantly enhance the performance of your few-shot prompts, making the model more accurate and reliable even with limited examples.

How to Implement Few-Shot Learning with Llama 3 in LangChain?

Setting Up Your Environment

Step 1: Prerequisites

Before starting, ensure you have Python installed (preferably Python 3.7+). You’ll need basic knowledge of Python programming and familiarity with LangChain and Llama 3.

Step 2: Installation of LangChain and Llama 3

Install the necessary libraries using pip:

pip install langchain

pip install llama3

Step 3: Configuration and Imports

Once the libraries are installed, import them into your Python environment:

import langchain

from llama3 import LlamaModel

Ensure you have access to the Llama 3 API key if you are integrating with a hosted model.

Building a Few-Shot Learning Framework

Step 1: Define Your Task

Start by defining the specific task you want the model to perform. For example, text summarization, translation, or sentiment analysis.

Step 2: Create a Prompt Template

Create a template for your prompt that includes the task description and any necessary examples.

prompt_template = """

Translate the following English sentences into French:

1. 'Hello' → 'Bonjour'

2. 'How are you?' → 'Comment ça va?'

Now, translate: '{sentence}'

"""

Step 3: Construct Support and Query Sets

Create a set of examples (support set) and the query (the instance the model will work on).

Step 4: Implement Few-Shot Learning Chain

Use LangChain to define a chain that will apply the few-shot prompt and get results from Llama 3.

from langchain.chains import LLMChain

chain = LLMChain(llm=LlamaModel(), prompt=prompt_template)

response = chain.run({'sentence': 'What is your name?'})

print(response)

Running the Few-Shot Learner

Predict Outcomes for Query Instances

Once the few-shot learning framework is set up, you can run predictions. The model will generate answers based on the few-shot examples you’ve provided.

Interpreting and Analyzing Results

Review the model’s output and analyze whether it closely matches the expected results. If the output is not as expected, consider refining your prompt or providing more relevant examples.

Evaluating Model Performance

Step 1: Define Evaluation Metrics

Common evaluation metrics for few-shot tasks include accuracy, precision, and recall, depending on the task type.

Step 2: Implement Evaluation Methodology

To test the model, run the few-shot learner on a test dataset (with known answers) and evaluate its performance based on the defined metrics.

Step 3: Refine the Model

Refine your prompt templates, adjust few-shot examples, or try different hyperparameters to improve the model’s performance.

Advanced Techniques and Customization

Step 1: Continuous Learning

Implement mechanisms where the model can learn from new data continuously without retraining from scratch.

Step 2: Transfer Learning

Leverage pre-trained models for specific tasks and fine-tune them for your application.

Step 3: Experiment with Different Architectures

Test different prompt formats, architectures, and settings to optimize performance.

Step 4: Data Augmentation

Generate synthetic data to improve the model’s ability to generalize across tasks.

Leveraging Novita AI for Llama 3 and Few-Shot Learning

Llama API Integration and Enhancing Performance through Prompt Engineering

Novita AI offers seamless access to Llama 3, simplifying integration and enabling developers to tap into the full potential of this powerful language model. By utilizing the Llama API provided by Novita AI, developers can create highly optimized prompts that significantly enhance model performance. Prompt engineering plays a crucial role in guiding Llama 3 to produce more accurate, context-aware responses. With techniques like few-shot prompting, Llama 3 can effectively handle diverse tasks, even when minimal training data is provided. This enables developers to scale AI-driven applications without requiring extensive datasets, making it an ideal solution for a variety of real-world use cases.

Key Benefits of Novita AI’s Llama API Integration:

Effortless Integration with Novita AI

Novita AI is fully compatible with the OpenAI API standard, making it exceptionally easy to integrate into existing LangChain applications. This compatibility allows developers to seamlessly adapt their projects and leverage Novita AI’s powerful language models without needing significant changes to workflows. For those new to integration, the Get Started Guide on Novita AI’s website provides a step-by-step walkthrough, ensuring a smooth onboarding experience.

Access to Advanced Models

With Novita AI’s API key, developers gain access to a diverse range of cutting-edge language models, including Llama, Mistral, Qwen, Gemma, and Mythomax. This extensive selection empowers developers to select the most suitable model for their specific tasks, guaranteeing optimal performance. To test these models in real time and refine your use cases, you can explore Novita AI’s interactive LLM Playground, which allows for hands-on experimentation with various models and prompt setups.

Cost-Effective AI Solutions

Novita AI stands out as a more affordable alternative to other API providers. It offers developers and businesses the opportunity to reduce costs while maintaining high-quality AI-driven outputs. This cost-efficiency makes Novita AI an ideal solution for those looking to optimize their AI development budget without compromising on performance.

Scalability and Reliability

Designed with scalability in mind, Novita AI’s infrastructure efficiently handles high-volume requests, ensuring that LangChain applications built with its models can scale effortlessly as demand increases. Whether you’re developing a chatbot, a classification tool, or a document processing application, Novita AI ensures reliable performance even as your application grows.

For developers aiming to maximize the potential of their applications, Novita AI’s resources, such as the LLM Playground and Get Started Guide, provide everything needed to build, test, and scale with confidence.

Novita AI and LangChain: Enabling Few-Shot Learning

LangChain, when integrated with Novita AI, offers developers a powerful framework to implement few-shot learning with Llama 3. LangChain’s capabilities allow for flexible prompt definition, query set construction, and management of the learning process — all while leveraging the immense capabilities of Llama 3 via Novita AI. This integration is particularly beneficial for developers looking to experiment with various task formats, optimize model behavior, and scale AI applications efficiently.

Follow these steps to use Novita AI’s API key with LangChain:

Step 1: Register and Log in to Novita AI

- Visit Novita.ai and create an account.

2.You can log in using your Google or Github account for convenience.

3.Upon registration, Novita AI provides a $0.5 credit to get you started.

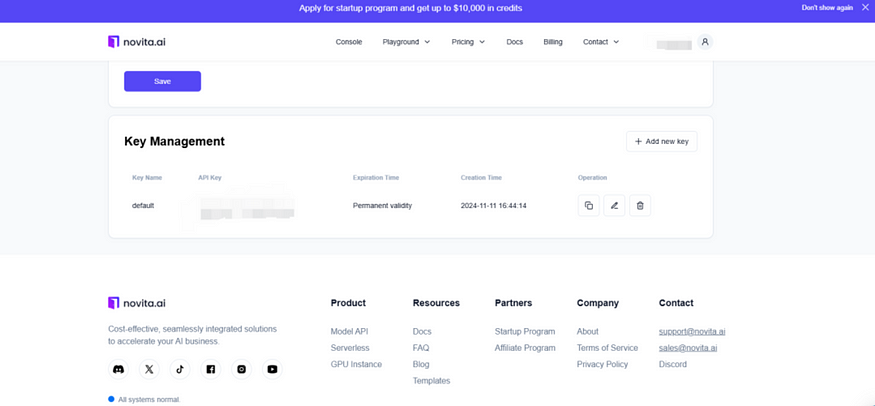

Step 2: Obtain the API Key

- Navigate to Novita AI’s key management page.

2.Create a new API key and copy it for use in your LangChain project.

Step 3: Set Up Your LangChain Project

- Install the necessary LangChain packages:

npm install @langchain/community

2.Initialize the Novita AI model in your JavaScript code:

const { ChatNovitaAI } = require("@langchain/community/chat_models/novita");

const llm = new ChatNovitaAI({

model: "meta-llama/llama-3.1-8b-instruct",

apiKey: process.env.NOVITA_API_KEY

});

3.Use the model in your application:

const aiMsg = await llm.invoke([

[

"system",

"You are a helpful assistant that translates English to French. Translate the user sentence.",

],

["human", "I love programming."],

]);

console.log(aiMsg);

Step 4: Customize and Expand

With the basic integration in place, you can now leverage LangChain’s full capabilities to build more complex applications, such as chatbots, question-answering systems, or document analysis tools.

How LangChain and Novita AI Power Few-Shot Learning:

Simple Integration: LangChain’s integration with Novita AI simplifies the setup process, allowing developers to quickly start building few-shot learning applications.

Customizable Prompts: LangChain provides developers with the tools to define and manage dynamic prompts tailored to specific tasks, ensuring Llama 3 generates the most relevant outputs.

Streamlined Query Handling: LangChain’s structure for organizing support and query sets ensures that developers can experiment with and refine few-shot learning techniques without complex setup processes.

Scalable AI Solutions: By using LangChain alongside Novita AI’s Llama API, developers can scale their applications, enhancing model responses with minimal data input while ensuring flexibility for future tasks.

Conclusion

In this guide, we’ve explored how to implement few-shot prompting using Llama 3 with tools like LangChain and Novita AI. By understanding the key concepts of prompt engineering and few-shot learning, and utilizing the right tools and frameworks, developers can build highly effective NLP applications with minimal data. As the technology continues to evolve, integrating platforms like Novita AI will provide developers with even more powerful ways to optimize their models and push the boundaries of AI performance.

Frequently Asked Questions

When to use few-shot prompting?

Use few-shot prompting when you have limited labeled data or when you need the model to perform tasks it has not been specifically trained for.

How to write a prompt for Llama 3?

Write clear, task-specific instructions. Include examples of inputs and desired outputs if using few-shot prompting.

What is the chain of thought prompting in Llama 3?

Chain of thought prompting involves structuring the prompt to guide the model through reasoning steps before providing an answer, which improves accuracy in tasks requiring logical reasoning.

originally from Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommend Reading

1.Chain-of-Thought Prompting Elicits Reasoning in Large Language Models

2.How to Create Your LLM With LangChain: a Step-by-Step Guide

3.Leveraging Novita AI API Key with LangChain: A Comprehensive Guide