Key Highlights

Tokens are the building blocks of language models, representing fragments of words or punctuation.

Token count directly impacts AI model costs and the length of text you can process.

Python offers robust tools like the Tiktoken library for efficient tokenization and counting.

Optimizing your input text and prompts can help reduce token usage and lower costs.

Novita AI stands out for its transparent pricing and efficient token handling.

In AI and natural language processing (NLP), it is important to understand "tokens." Tokens are the basic pieces of text that AI models handle. This blog post will show you how to get token count in python. Learning this skill can help you save money and make your AI applications run better.

Understanding Tokenization

Have you ever thought about how AI models understand human language? The answer is tokenization. Tokenization means breaking text into smaller parts called tokens. These tokens can be one character or a full word. Python is a popular language for AI. It offers many tools and libraries for easy tokenization.

The Basics of Tokenization

Tokenization is about breaking down a text string into smaller parts called tokens. These tokens are the basic pieces that help language models understand and create text.

It's good to remember that tokenization does more than just split words apart with spaces. It also looks at punctuation marks, special symbols, and even parts of words as individual tokens. How tokenization works can change based on the language model and the tokenizer used.

Tokenization Example

To visualize how text is tokenized, you can use the Tokenizer tool. For example,the sentence "Natural-language processing is key." is tokenized as: ['Natural', '-', 'language', ' processing', ' is', ' key', '.']. This breakdown is crucial for understanding how your input translates into token count.

Using the TikTok library in Python, you can quickly break down text strings into tokens. For example, by using the tokenize function, you can make a list of tokens from any text string. This method is useful for many different tasks, like generative AI and NLP. By grasping the idea of tokens and using libraries like NLTK, you can manage tokenization processes well. This helps in saving costs.

Importance of Token Count for Cost Optimization

Direct Impact on API Costs:The number of tokens in an input and output directly influences the cost when using AI models. More tokens mean higher costs, so managing token usage is crucial for optimizing expenses.

Model Limitations:Each model has a token limit (e.g., GPT-3.5 might have a limit of 4,096 tokens). Keeping track of token count ensures that inputs don't exceed this limit, avoiding errors and unnecessary costs for longer responses.

Efficient Use of Resources:By understanding tokenization, you can write more concise prompts and manage how much text is processed, making sure you don’t waste tokens on irrelevant information. This leads to more efficient use of resources.

Controlling Output Length:By controlling the number of tokens requested in the model's output (e.g., via the

max_tokensparameter), you can limit the response size, keeping costs predictable and under control.Batch Processing:Token count helps when splitting large datasets into smaller, manageable batches. This way, you can avoid token limits in individual API calls, optimizing both cost and performance.

When your token count is higher, your costs go up too. So, finding ways to use tokens more efficiently is not just a technical issue; it's also about saving money.

Step-by-Step Guide on How to Get Token Count in Python

Counting tokens in Python is simple.

Step1: Tools and Libraries for Tokenization

Python has many tools and libraries to make tokenization easier for natural language processing tasks. A well-known option is the Natural Language Toolkit (NLTK). This library has several tokenizers to fit different needs, like NLTK's word tokenizer, which breaks text into single words.

Another great choice is the Tiktoken library from OpenAI. Tiktoken is a fast tokenizer made for OpenAI's models. This includes GPT-3 and GPT-4. It uses an efficient Byte Pair Encoding (BPE) algorithm, making it very effective for these models.

Installing these libraries is simple with pip, which is Python's package manager. For example, to install Tiktoken, just type the command pip install tiktoken.

Step2: Implementing Token Count in Your Python Code

Incorporating token counting into your Python projects is easy. First, you need to import a library, like Tiktoken: import tiktoken. Then, load the right encoding model for your OpenAI project. For example, if you are using GPT-3.5-turbo, write: encoding = tiktoken.encoding_for_model("gpt-3.5-turbo").

To count the tokens in your text, use the encode method from the encoding object. For example: tokens = encoding.encode("Your text string"). After that, find the number of tokens by checking the length of the tokens list: token_count = len(tokens).

Make sure to replace "Your text string" with your actual text. By adding this simple code, you can easily check and improve token usage in your Python AI applications.

For convenience, you can directly copy the following code

import tiktoken

# Select the encoding model (e.g., "gpt-3.5-turbo")

encoding = tiktoken.get_encoding("gpt-3.5-turbo")

# Input text

text = "This is a sample sentence to count tokens."

# Count tokens

tokens = encoding.encode(text)

token_count = len(tokens)

print(f"Token count: {token_count}")

Token Optimization Strategies to Reduce AI Costs

Optimizing your token usage is very important for saving costs in AI development. You can take the following approaches

Simplify Input Text: One great way to optimize tokens is by making your input text simpler. Often, text has extra details, repeats, or hard-to-understand phrases that increase the token count without really helping.Also, see if you can say the same information with less use of metadata or long descriptions. Keep in mind that shorter and clearer text not only lowers token count but usually leads to better communication with the AI model. This can give you more accurate and useful outputs.

Optimize Prompt Design: The way you create prompts for generative AI models really matters. Good prompts can lead to better responses and help use fewer tokens.Use simple language and make sure there is no confusion in your prompts. Or try different ways of structuring your prompts to find the best method to share your ideas. Remember, a well-made prompt helps the model work better. This reduces unnecessary long responses that increase the token count.

Use More Efficient Tokenization: The choice of tokenizer and how it encodes can greatly affect your token usage. It’s important to look at different tokenizer options and try out their settings to reduce your token count. For instance, if you mainly use OpenAI's GPT models, it’s best to use their special tokenizer called Tiktoken. Tiktoken uses Byte Pair Encoding (BPE), which is made for these models. This often makes it better at tokenization than more general options.

If Token Usage Can't Be Reduced, Choose a Cheaper API

When you have tried all optimization methods and your AI application still needs too many tokens, it’s time to look for a more budget-friendly API option.

How to Find a More Affordable API

Finding a low-cost API for your AI needs takes careful research.

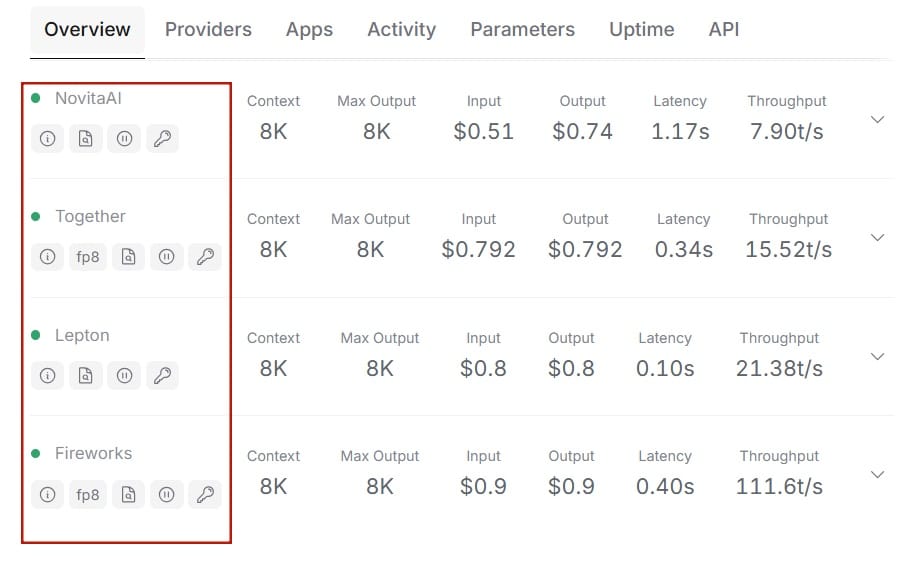

Step1: Look at the API Documentation from Different Providers:

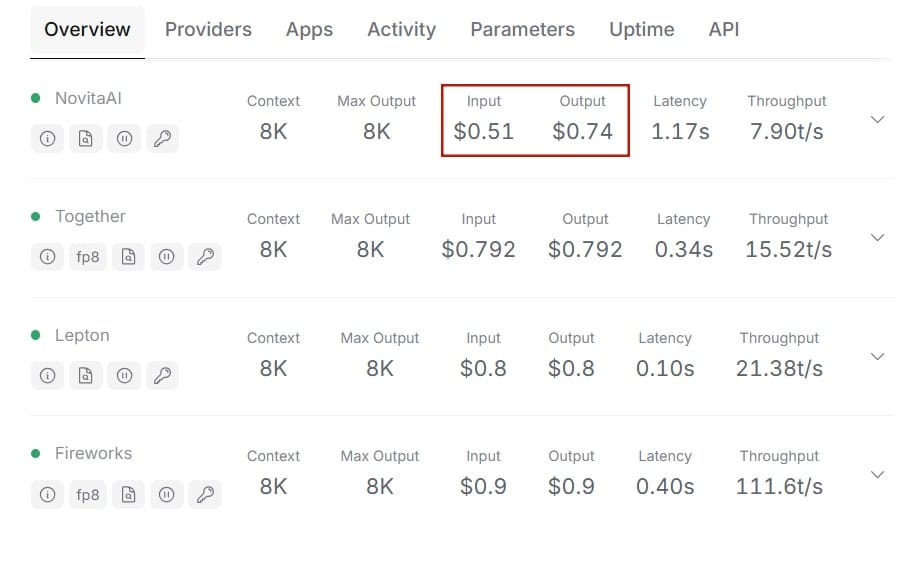

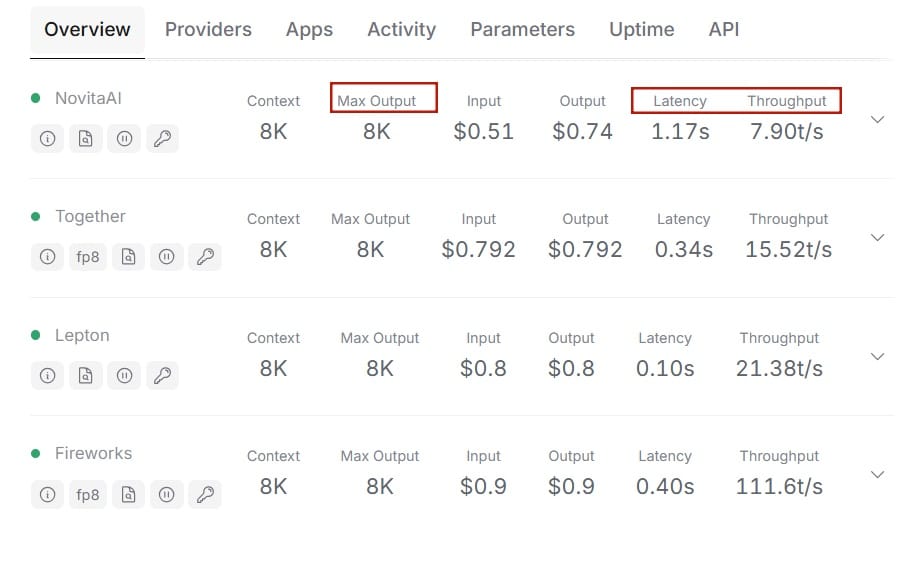

Check their pricing methods and see how token usage affects your overall costs.By comparing the input and output token costs, you can effectively evaluate the cost-effectiveness of an API.

Input costs: Cost per million tokens for the prompt.

Output costs: Cost per million tokens for the completion.

Step2:Compare Other Details to the Pricing to Find the Best Deal:

You can assess a model's performance by evaluating its Max Output, Latency and Throughput.

Max Output: This endpoint can generate 4,096 tokens

Latency: Average time for the provider to send the first token

Throughput: Average tokens transmitted per second for streaming requests

Step3: Do not be Afraid to Try Free Trials from some Providers:

This way, you can test how well the API works and if it meets your project's needs before choosing a paid plan.

A Great Choice: Novita AI

Novita AI is a high-performance LLM API model that stands out for its strong throughput, cost-effectiveness, and reliability.It exceeds the market average in throughput, ensures 99.9% API stability, and offers competitive pricing ($0.25-$0.35).

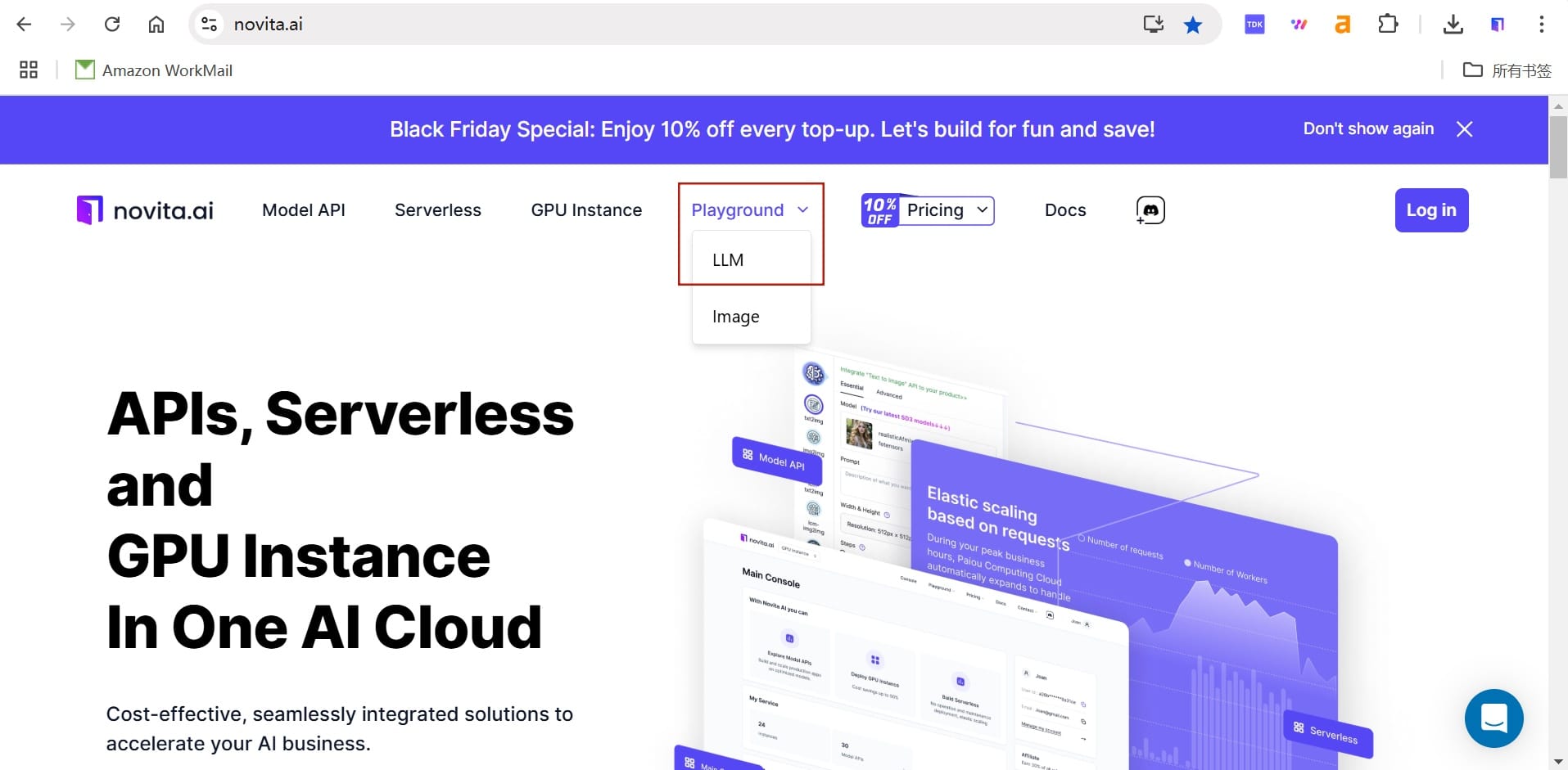

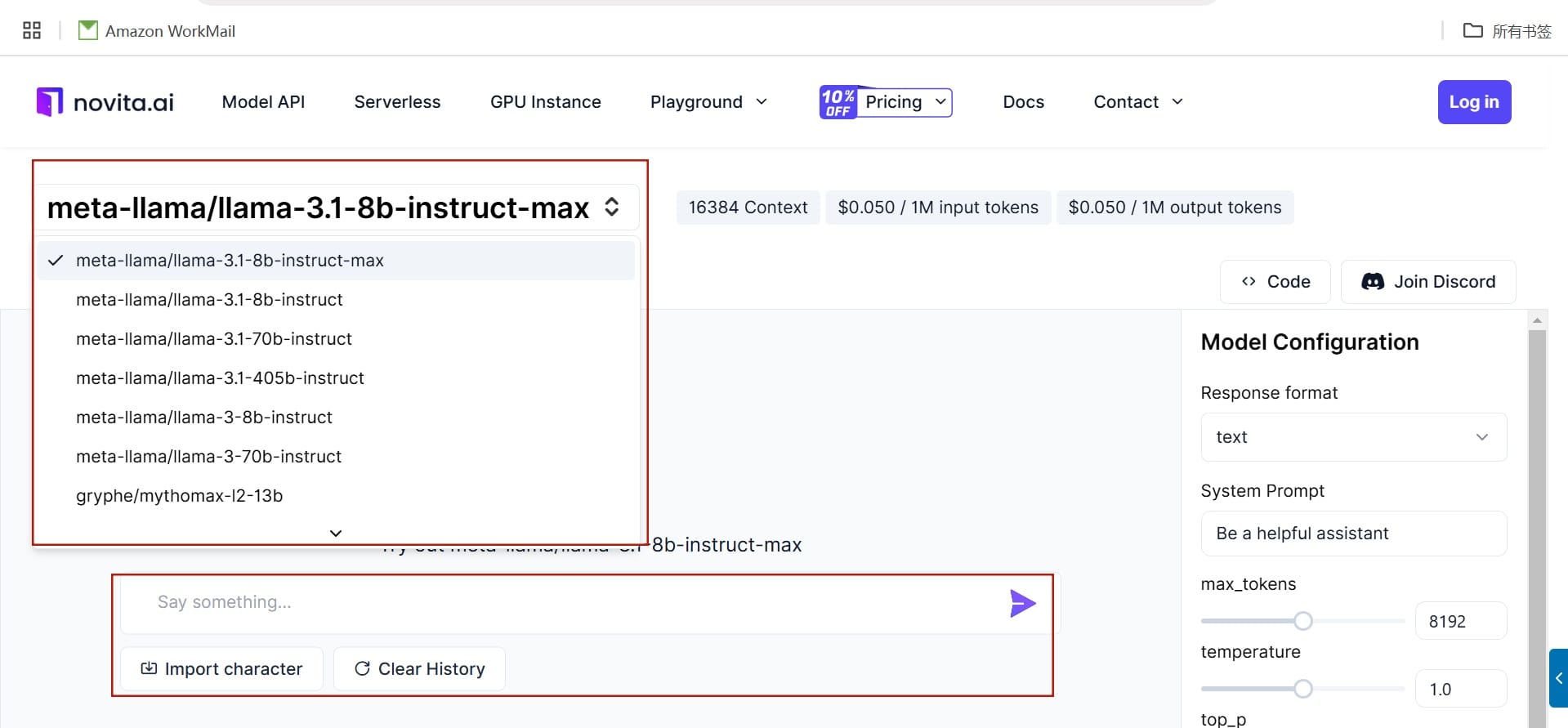

And you can find LLM Playground page of Novita AI for a free trial! This is the test page we provide specifically for developers!

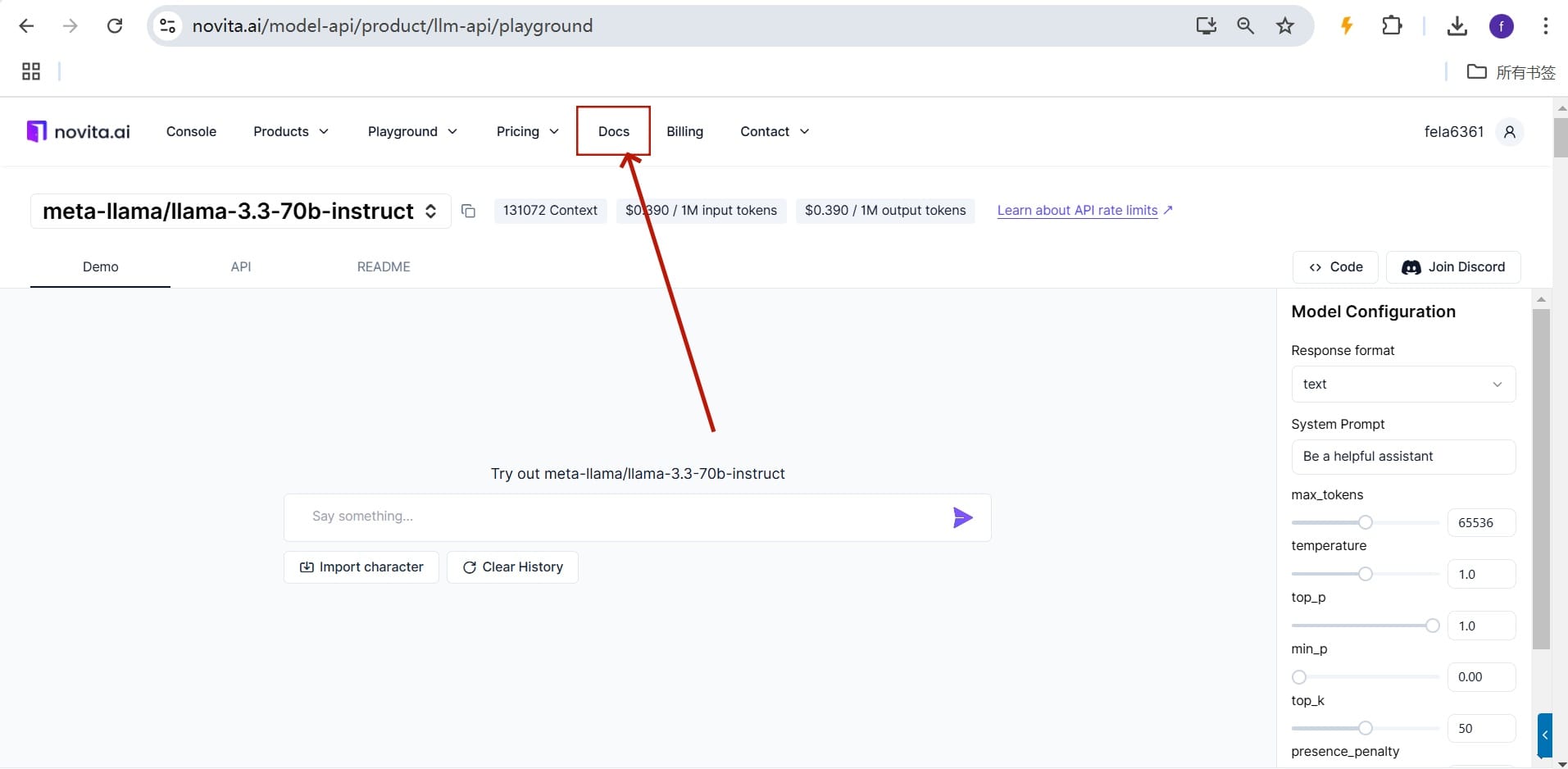

If you think the test results meet your needs, you can go to the Docs page to make an API call!

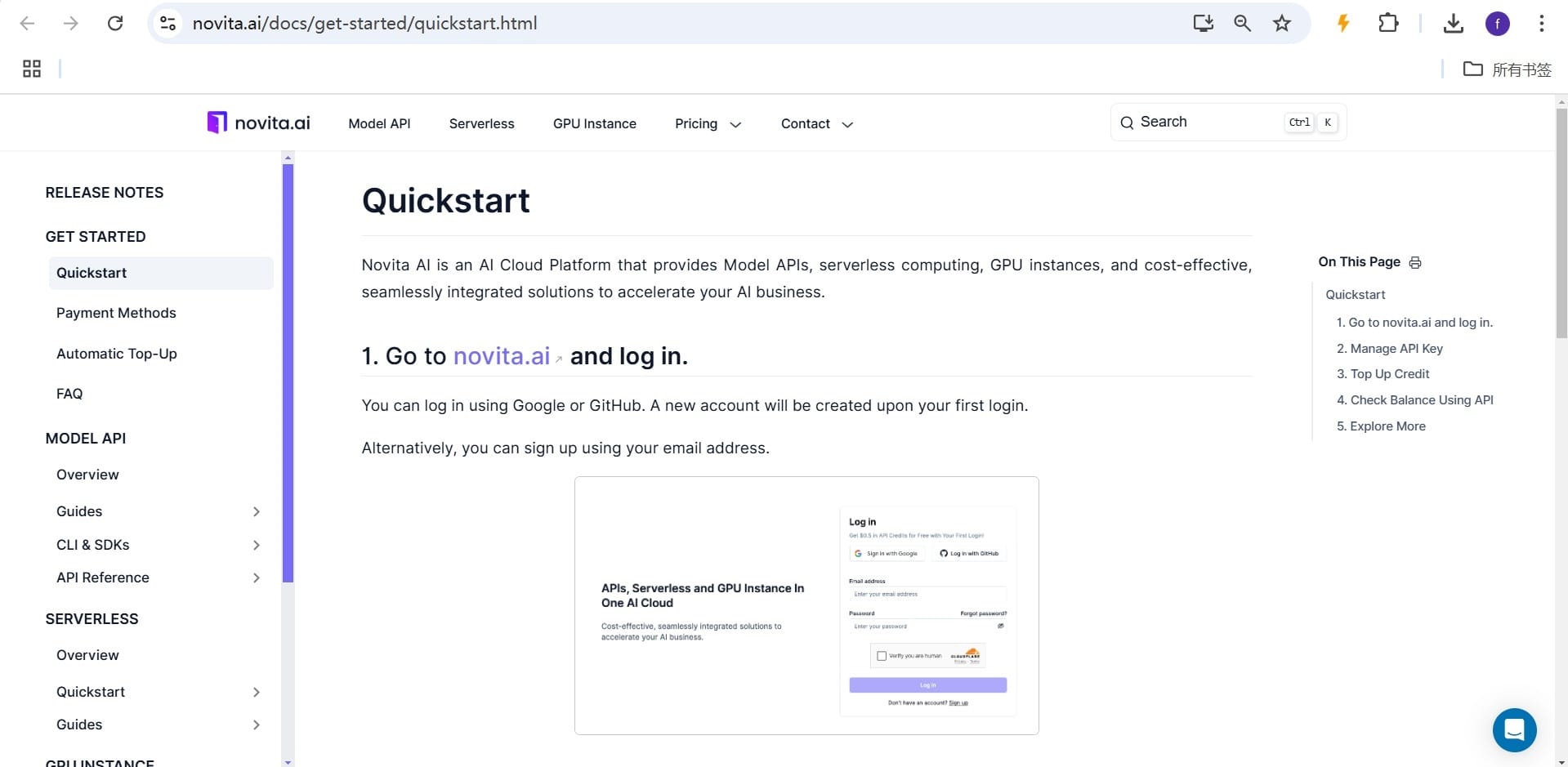

Fortunately, Novita AI provides free credits to every new user, just log in to get it! Upon registration, Novita AI provides a $0.5 credit to get you started! If the free credits is used up, you can pay to continue using it.

Conclusion

Understanding token count in Python is important for saving money on AI projects. By managing how you use tokens, you can lower costs and improve how your applications perform. You can apply methods like making input text simpler, designing prompts better, and choosing affordable APIs like Novita AI. These choices can help your project's budget a lot. Stay updated on the tools and libraries for tokenization. Also, follow the guide to accurately count tokens in Python. By using these tips, you can make your AI work easier and save money on your projects.

Frequently Asked Questions

How many tokens are in Python?

In AI and NLP, you do not count tokens within Python directly. Instead, you count tokens in the text strings that your Python code works with. The number of tokens in a text string depends on how long the text is and the type of tokenizer you use.

How Can Token Counting Lead to Cost Optimization?

Many AI APIs charge based on how many tokens they handle. By keeping track of the tokens you use and trying to use them wisely, you can manage and lower your AI costs.

How do you count tokens?

You can count tokens using Python libraries such as Tiktoken or NLTK. These libraries help you split text into tokens. This way, you can easily know their count with simple code.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommend Reading

1.LLM Foundation: Tokenization&Trianing

2.How Usage Tier 2 Affects OpenAI Tokens and Limits: Your Fit

3.What Are Large Language Model Settings: Temperature, Top P And Max Tokens