Llama 3.2 90B vs Qwen 2.5 72b: A Comparative Analysis of Coding and Image Reasoning Capabilities

Key Highlights

Llama 3.2 90B Strengths:

a multimodal large language model (LLM) that excels in image reasoning and understanding while also performing well in text-based tasks.

Qwen 2.5 72B Strengths:

a text-based LLM, focusing on strong performance in coding, mathematics, instruction following, and handling long text.

Supported 29 languages

If you’re looking to evaluate the Qwen 2.5 72B on your own use-cases — Upon registration, Novita AI provides a $0.5 credit to get you started!

In the rapidly evolving landscape of large language models (LLMs), two notable contenders have emerged: Meta’s Llama 3.2 90B and Qwen’s Qwen 2.5 72B. While both models represent significant advancements in AI, they cater to different needs and use cases. This article provides a practical, informational, and technical comparison of these models, examining their architecture, capabilities, performance, and resource requirements. This comparison aims to help developers and researchers make informed decisions about which model best suits their specific projects.

Basic Introduction of Model

To begin our comparison, we first understand the fundamental characteristics of each model.

Llama 3.2 90B

Release Date: September 25, 2024

Other Models:

Key Features:

Multimodal model, supports both text and image inputs

Supports English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai

Qwen 2.5 72B

Release Date: September 19, 2024 (Qwen 2.5 series)

Model Scale:

Key Features:

Improved performance in coding and mathematics

enhanced instruction following

long text generation capabilities up to 8K tokens

strong multilingual support for over 29 languages

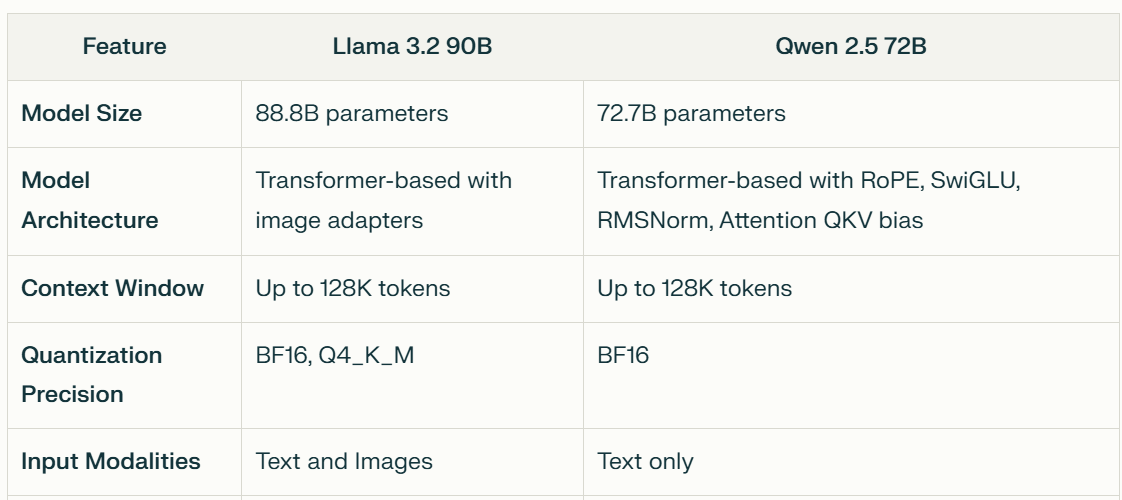

Model Comparison

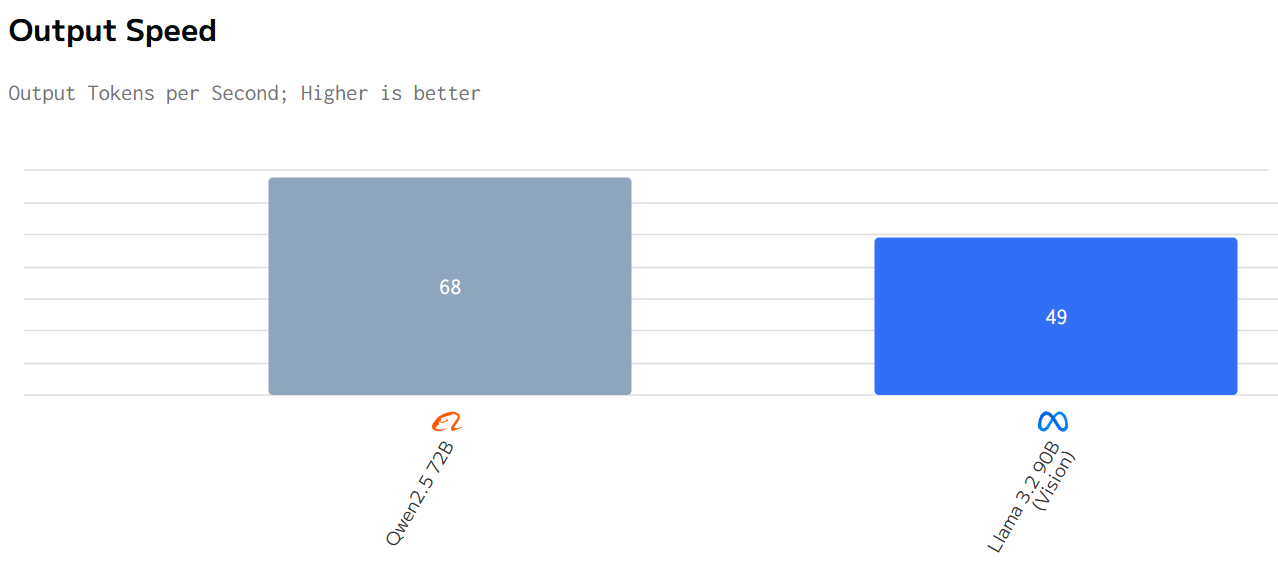

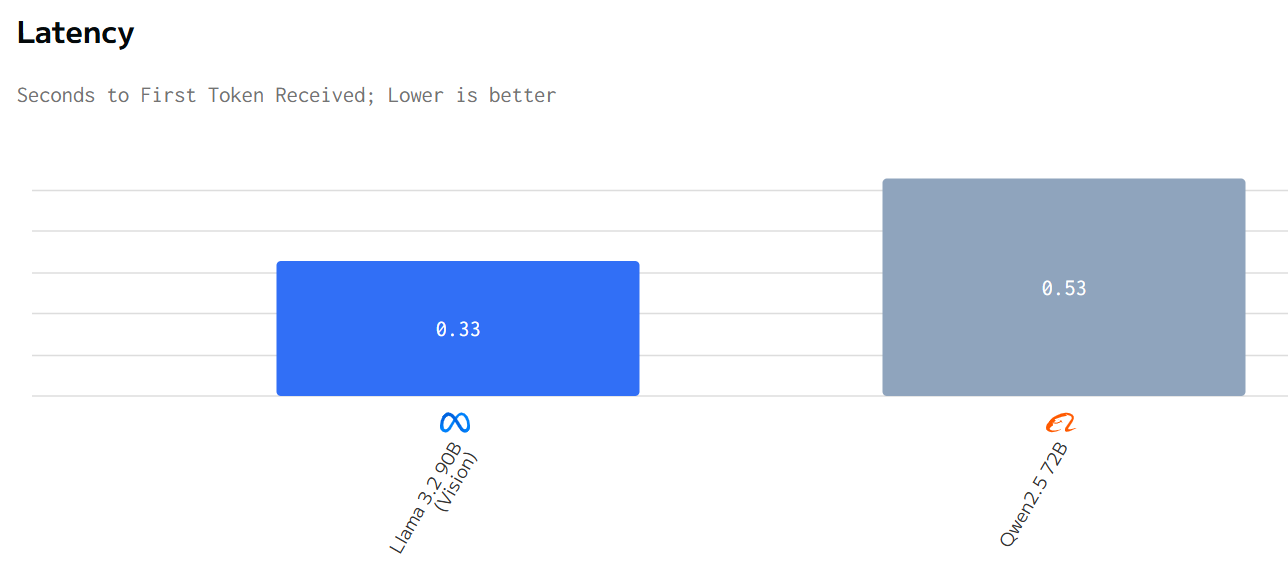

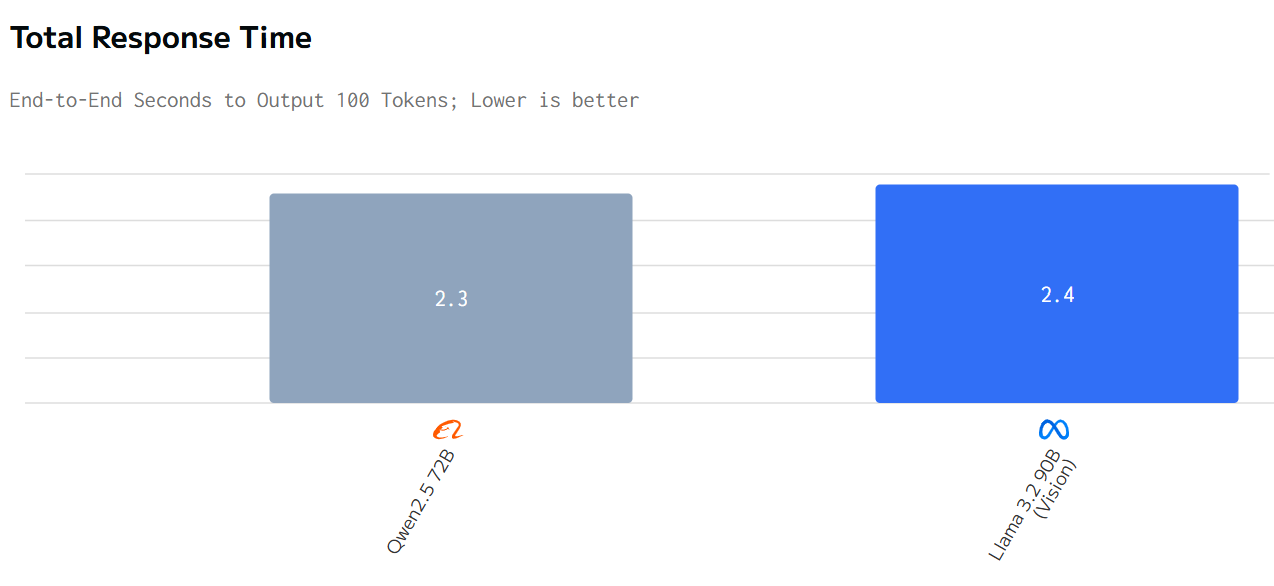

Speed Comparison

If you want to test it yourself, you can start a free trial on the Novita AI website.

Speed Comparison

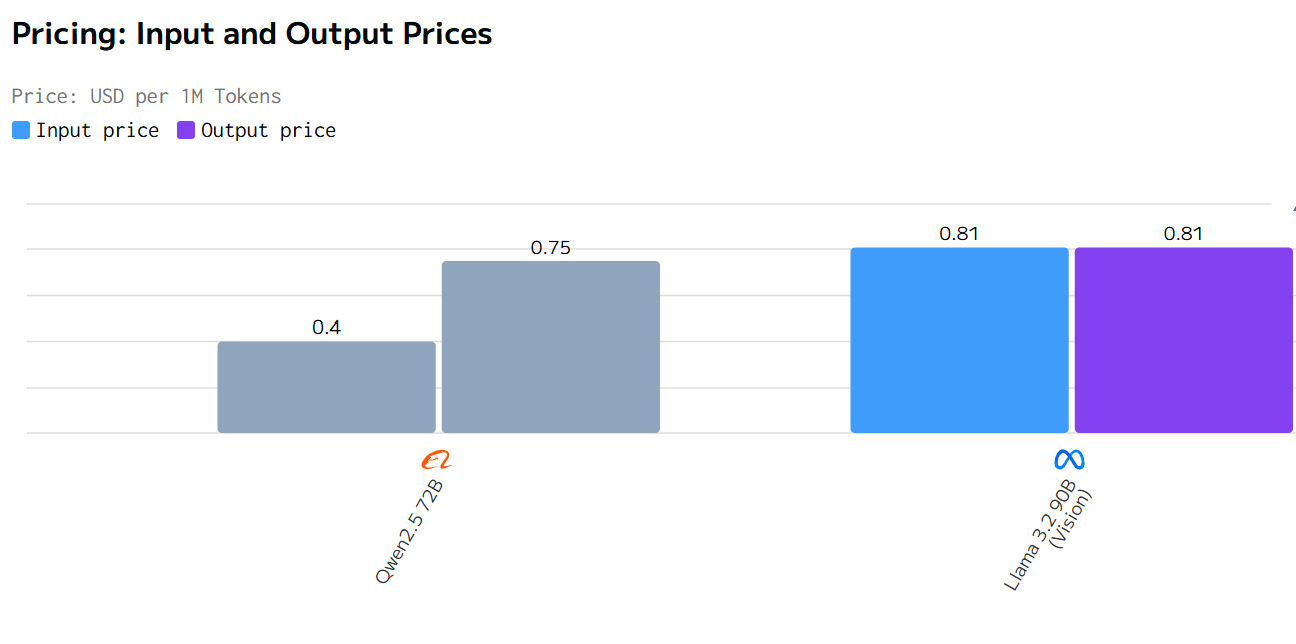

Cost Comparison

In summary, Qwen2.5 72B performs better in terms of total response time, pricing, and output speed, while Llama 3.2 90B performs better in terms of latency.

Benchmark Comparison

Now that we’ve established the basic characteristics of each model, let’s delve into their performance across various benchmarks. This comparison will help illustrate their strengths in different areas.

Benchmark Metrics Llama 3.2 90B (vision) Qwen 2.5 72b MMLU 84 86.8 HumanEval 80 59.1 MATH 65 83.1

In summary, Qwen 2.5 72b performs better on the MMLU and MATH benchmarks, while Llama 3.2 90B (vision) excels on HumanEval. Additionally, the specialized versions of Qwen 2.5, namely Qwen 2.5-Coder and Qwen 2.5-Math, may offer superior performance in programming and mathematics-related tasks, respectively. The performance of different models varies significantly across different tasks, so the choice of model should be based on the specific requirements of the task at hand.

If you would like to know more about the llama3.3 benchmark knowledge. You can view this article as follows:

If you want to see more comparisons between llama 3.3 and other models, you can check out these articles:

Qwen 2.5 72b vs Llama 3.3 70b: Which Model Suits Your Needs?

Llama 3.1 70b vs. Llama 3.3 70b: Better Performance, Higher Price

Applications and Use Cases

Llama 3.2 90B:

Image understanding and reasoning

Image captioning

Document-level understanding including charts and graphs

Visual grounding tasks

Real-time language translation with visual inputs

Qwen 2.5 72B:

Multilingual chatbots and assistants

Coding assistance and code generation

Synthetic data generation

Multilingual content creation and localization

Knowledge-based applications like question answering

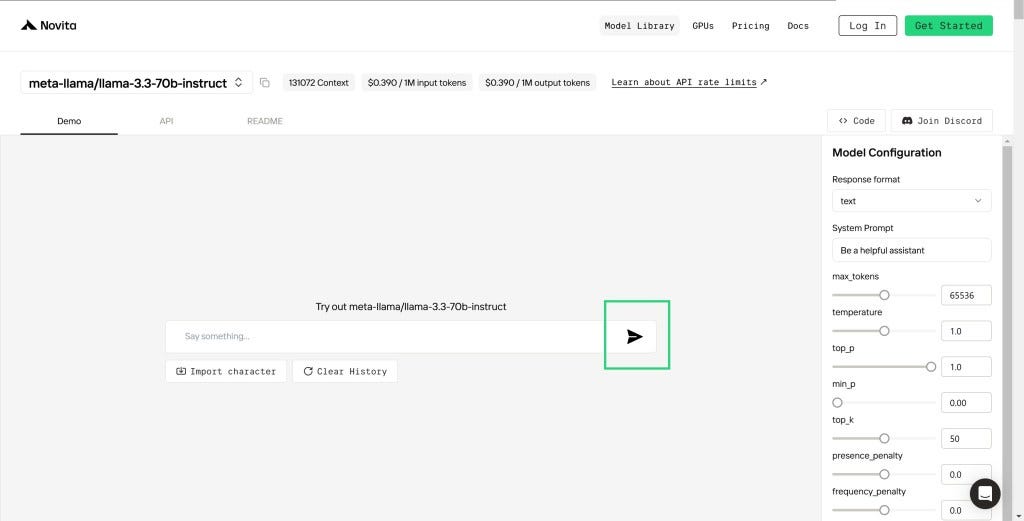

Accessibility and Deployment through Novita AI

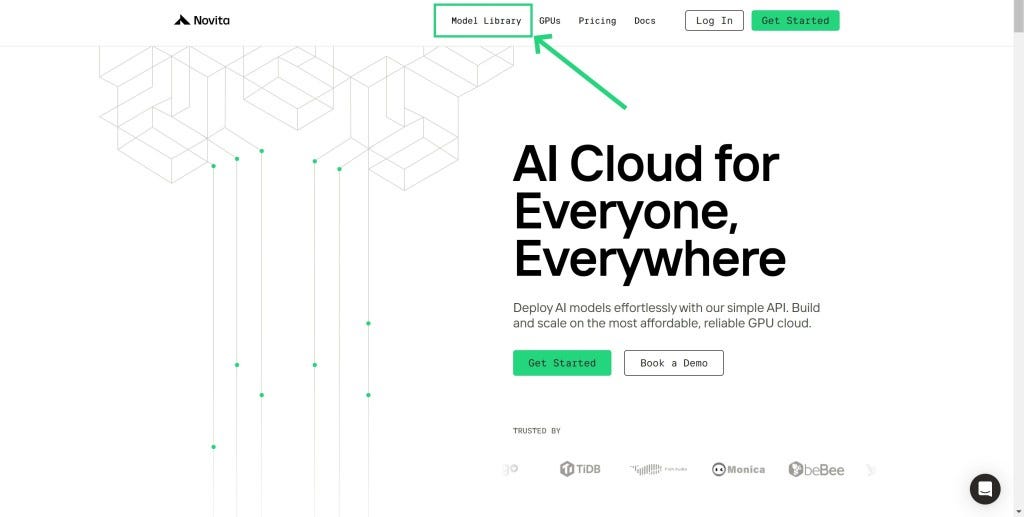

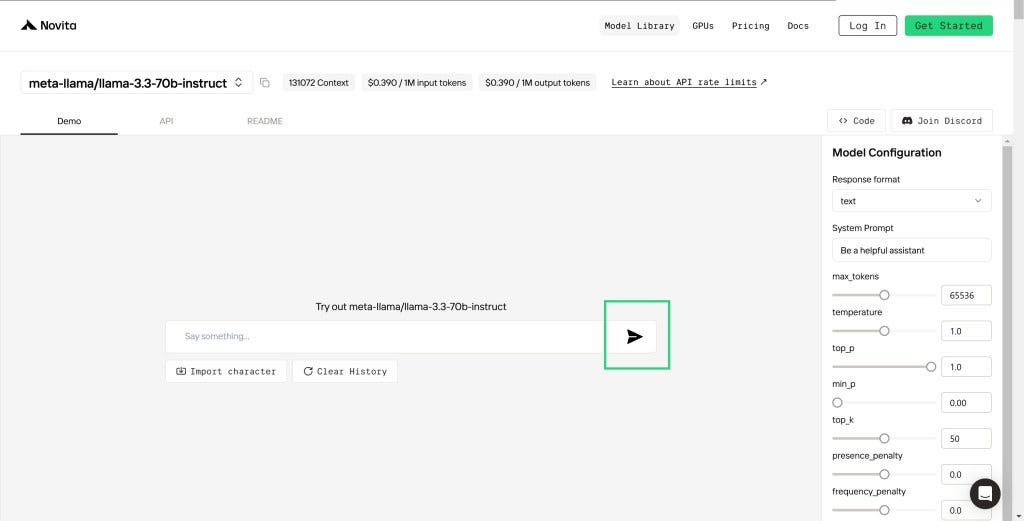

Step 1: Log In and Access the Model Library

Log in to your account and click on the Model Library button.

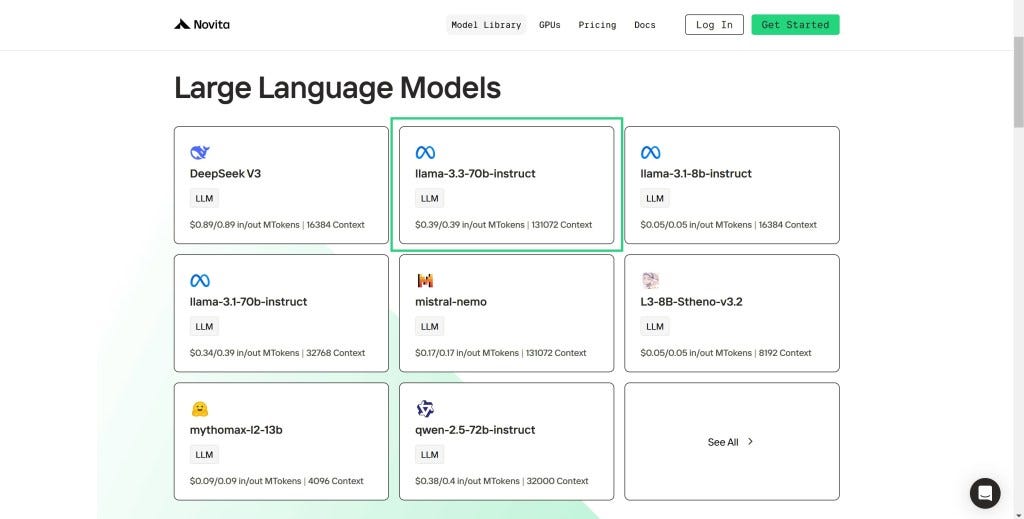

Step 2: Choose Your Model

Browse through the available options and select the model that suits your needs.

Step 3: Start Your Free Trial

Begin your free trial to explore the capabilities of the selected model.

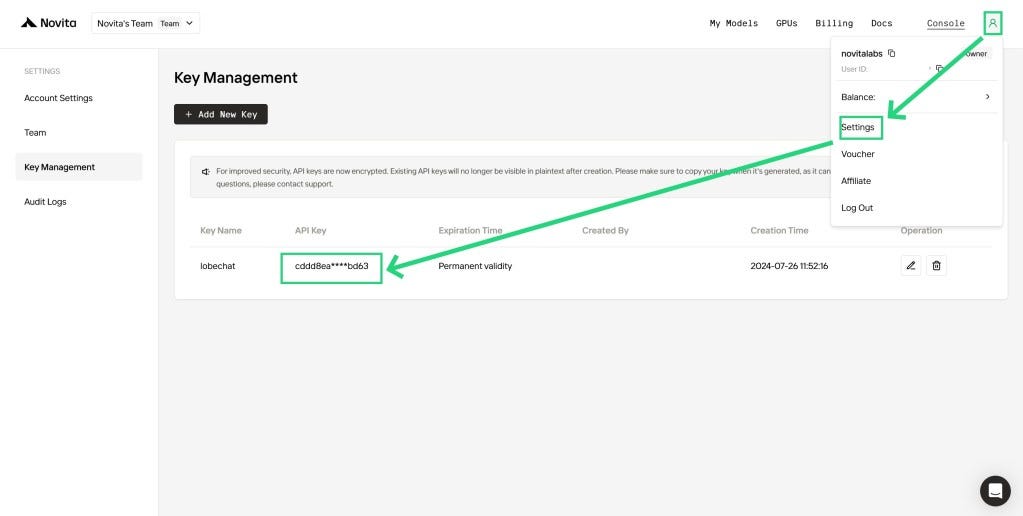

Step 4: Get Your API Key

To authenticate with the API, we will provide you with a new API key. Entering the “Settings“ page, you can copy the API key as indicated in the image.

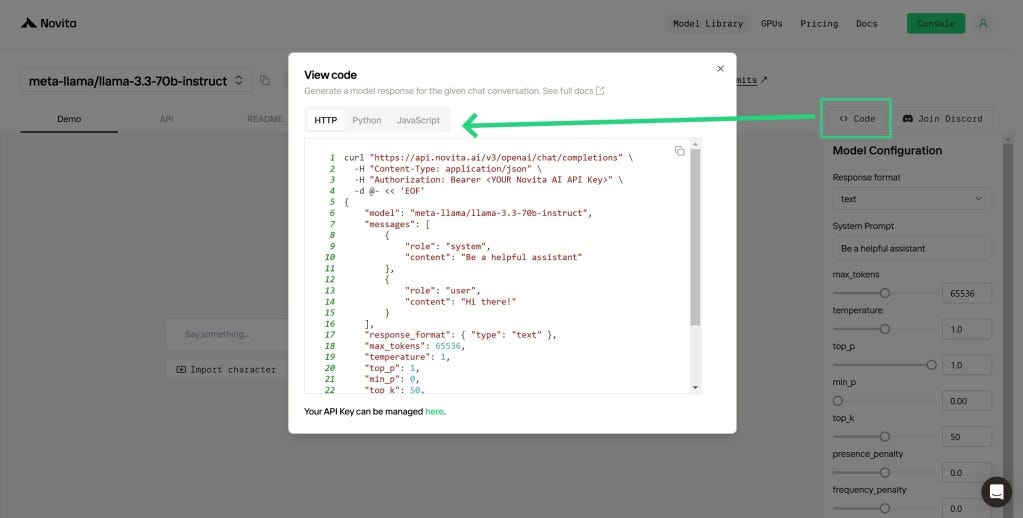

Step 5: Install the API

Install API using the package manager specific to your programming language.

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API for pthon users.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "qwen/qwen-2.5-72B"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Both Llama 3.3 70B and Llama 3.2 90B offer unique advantages tailored to different use cases. Llama 3.3 excels in text-based tasks requiring strong multilingual capabilities and instruction following with an emphasis on efficiency, while Llama 3.2 shines in multimodal applications involving image understanding.

Frequently Asked Questions

How is Llama 3.3 different from Llama 3.2?

Llama 3.3 is optimized for text tasks, excelling in multilingual capabilities, while Llama 3.2 is multimodal, handling both images and text.

Can Llama 3.3 run on standard developer hardware?

Yes, it is designed for common GPUs and developer-grade workstations.This polished article provides a thorough comparison of the two models while maintaining clarity in context and structure.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.