Key Highlights

The answer is YES!

Llama 3.3 70B demonstrates performance comparable to the larger Llama 3.1 405B, but with significantly lower computational requirements.

If you’re looking to evaluate the Llama 3.3 70b on your own use cases — Upon registration, Novita AI provides a $0.5 credit to get you started!

The world of language models is always changing, bringing us smarter AI. But this can make it hard to use these tools easily. Meta AI’s new model, Llama 3.3 70B, is here to help. This strong model works as well as the much bigger Llama 3.1 405B but needs less powerful hardware. In this review, we will look at Llama 3.3 70B. We will check its abilities through benchmarks to see if it really comparable to llama 3.1 405B.

Basic Introduction of Models

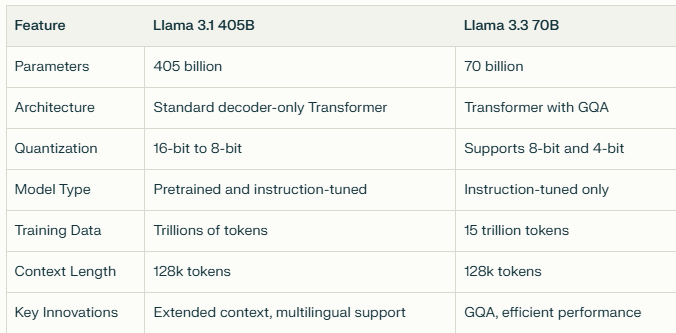

To begin our comparison, we first understand the fundamental characteristics of each model.

Llama 3.3 70b

Release Date: December 6, 2024

Model Scale:

Key Features:

Utilizes GQA technology to improve processing efficiency

Uses Reinforcement Learning with Human Feedback (RLHF) as part of its training process.

It can run on regular GPUs, so developers can test and share AI applications on their own computers.

Supports 8 languages

128K token context window

Llama 3.1 405b

Release Date: July 23, 2024

Other Llama 3.1 Models:

Key Features:

Supports 8 languages

128K token context window

Model Comparison

In summary:

Advantages of Llama 3.3 70B: It excels in efficiency and instruction-following tasks, suggesting it can deliver better performance with fewer computational resources for specific tasks.

Advantages of Llama 3.1 405B: With a larger parameter count and more extensive training data, it may have an edge in handling more complex tasks and providing broader knowledge, though it requires more computational resources.

Benchmark Comparison

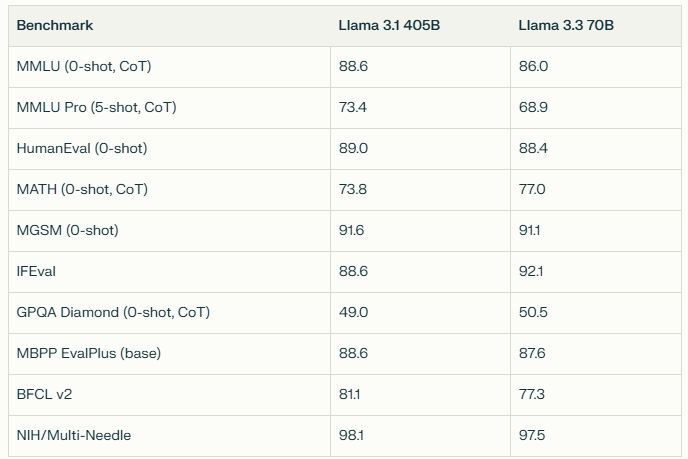

Now that we’ve established the basic characteristics of each model, let’s delve into their performance across various benchmarks. This comparison will help illustrate their strengths in different areas.

Summary:

Llama 3.3 70B achieves comparable or superior performance in specific areas despite having fewer parameters (70B vs 405B).

Llama 3.3 70B shows significant improvements in mathematical reasoning and instruction following.

Llama 3.1 405B maintains a slight edge in general knowledge and coding tasks.

The performance gap between the two models is relatively small, indicating that Llama 3.3 70B offers a more efficient alternative for many tasks.

If you would like to know more about the llama3.3 benchmark knowledge. You can view this article as follows:

If you want to see more comparisons between llama 3.3 and other models, you can check out these articles:

Qwen 2.5 72b vs Llama 3.3 70b: Which Model Suits Your Needs?

Llama 3.1 70b vs. Llama 3.3 70b: Better Performance, Higher Price

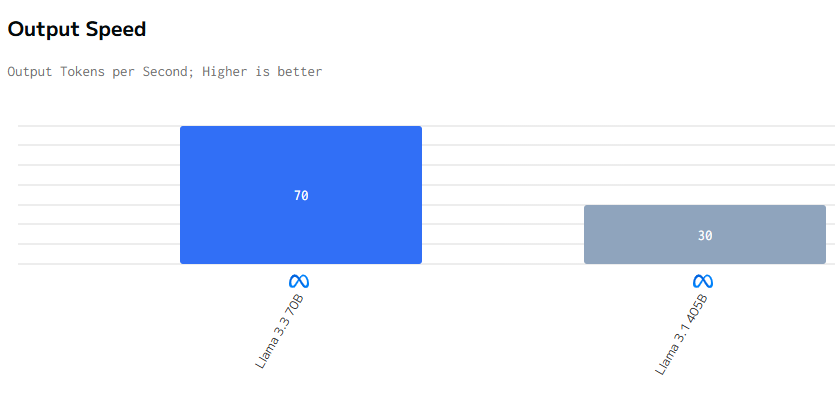

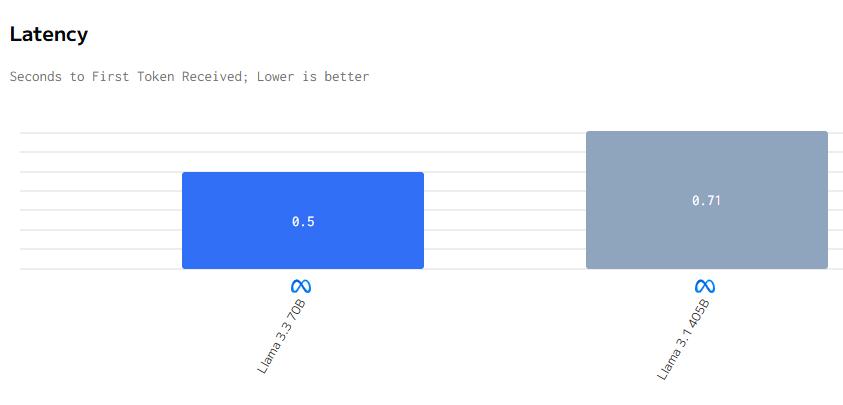

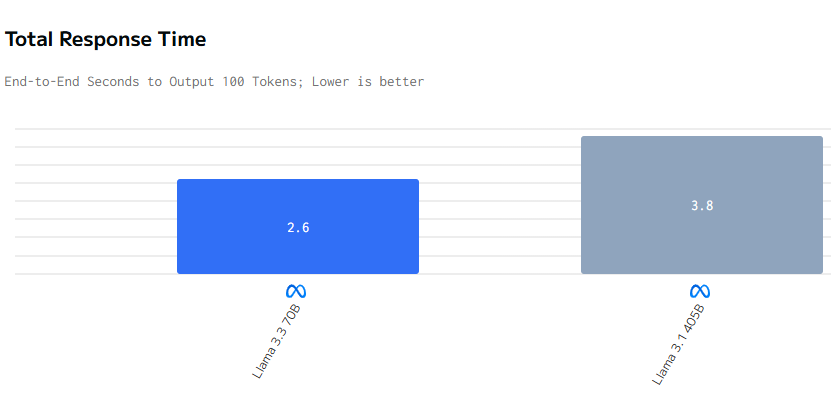

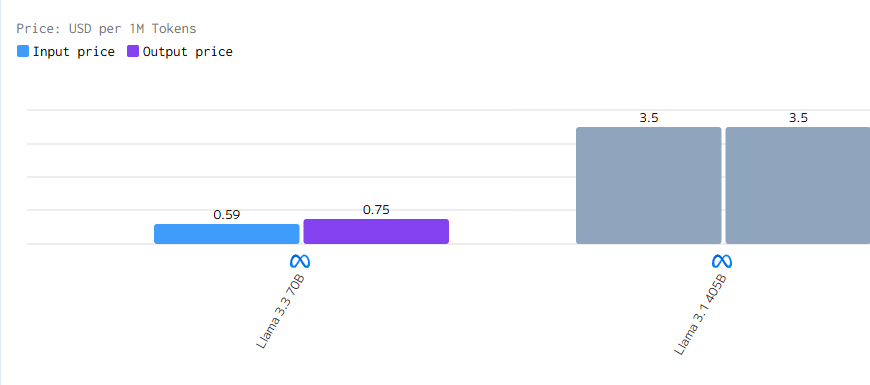

Speed and Cost Comparison

If you want to test the speed of llama 3.3 yourself, you can start a free trail on Novita ai!

Speed Comparison

Cost Comparison

These improvements make Llama 3.3 70B a more cost-effective and efficient option for many applications, especially those requiring text-based tasks such as multilingual chat, coding, and synthetic data generation

Applications and Use Cases

Llama 3.3 70B:

Multilingual chatbots and assistants

Coding support

Synthetic data generation

Multilingual content creation and localization

Research and experimentation

Knowledge-based applications

Flexible deployment

Llama 3.1 405B:

Large-scale synthetic data generation

Model distillation

Advanced research and experimentation

Industry-specific solutions

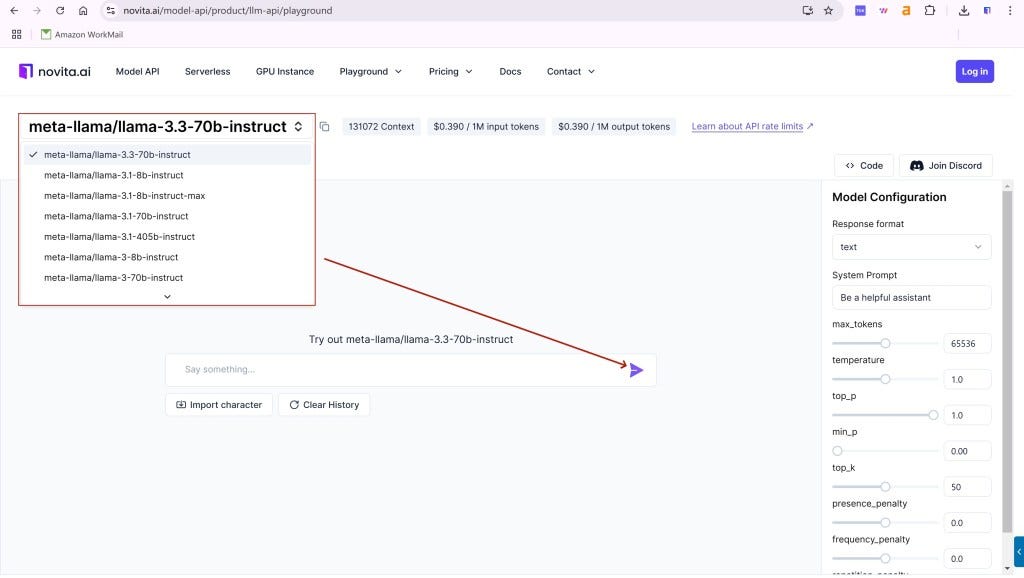

Accessibility and Deployment through Novita AI

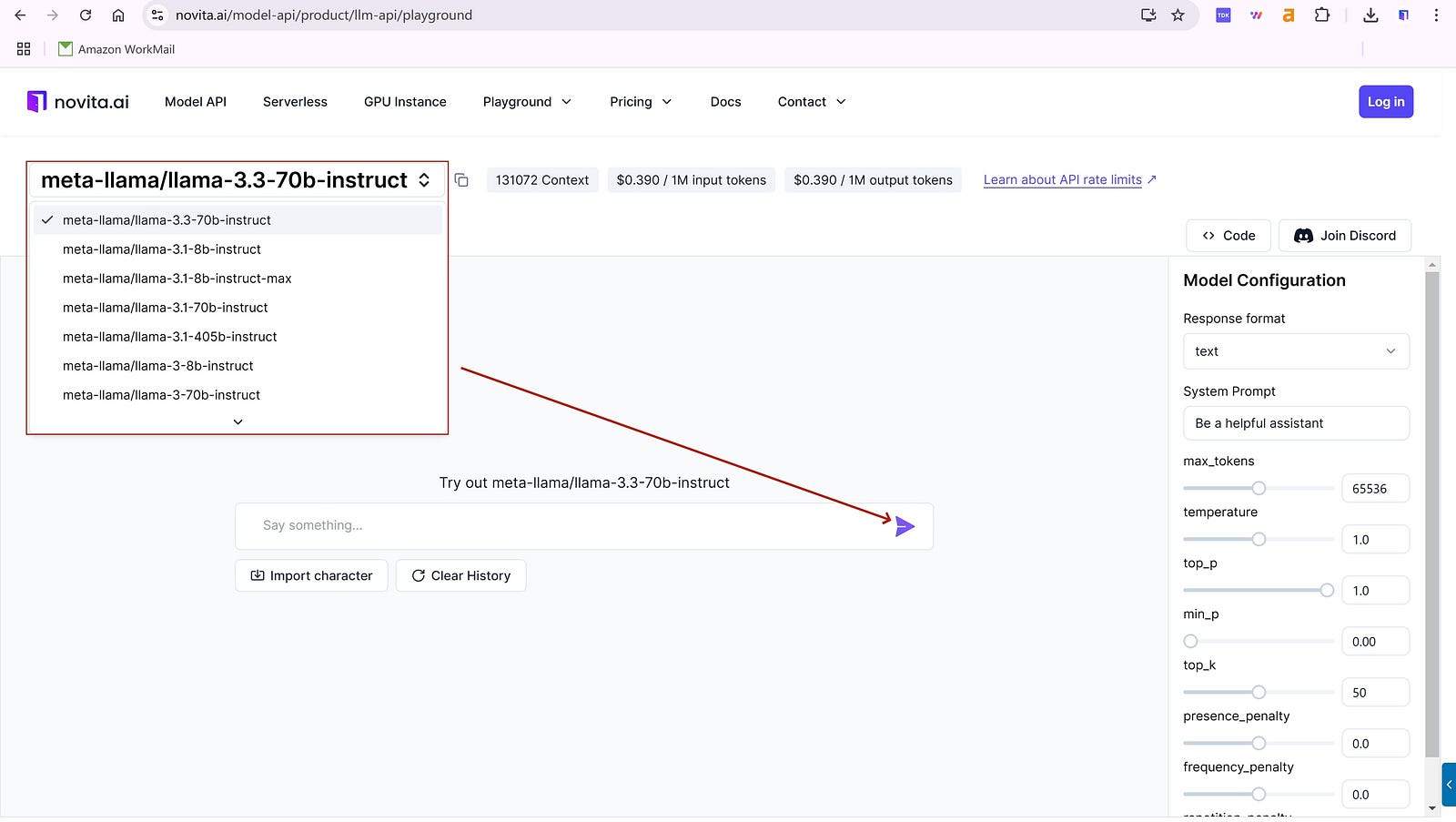

Novita AI offers an affordable, reliable, and simple inference platform with scalable Llama 3.3 70b API*, empowering developers to build AI applications.*

Step1: Log in and Start Free Trail !

you can find LLM Playground page of Novita AI for a free trial! This is the test page we provide specifically for developers! Select the model from the list that you desired. Here you can choose the Llama 3.3 70b model.

Step2: If the trial goes well, you can start calling the API!

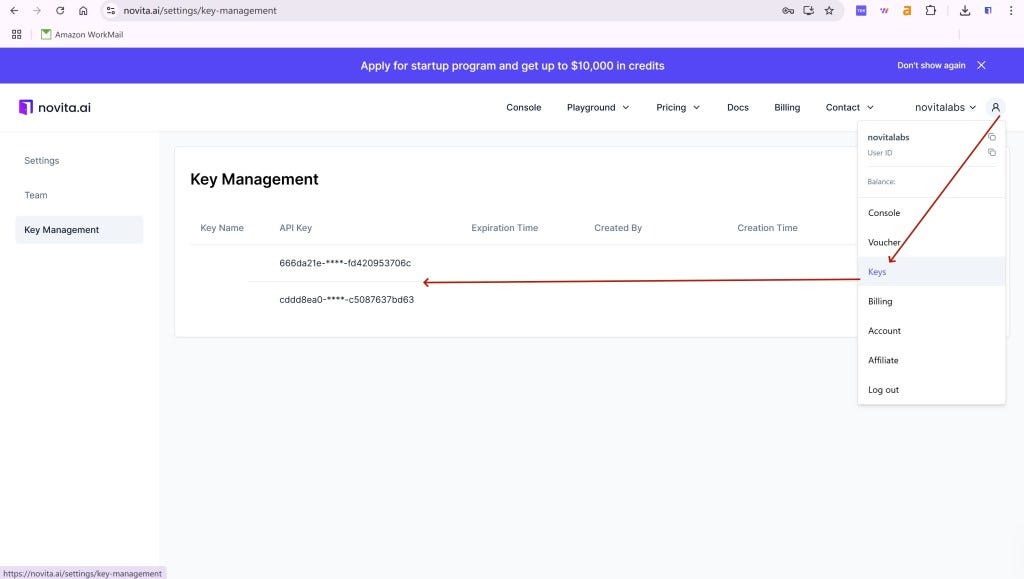

Click the “API Key” under the menu. To authenticate with the API, we will provide you with a new API key. Entering the “Keys“ page, you can copy the API key as indicated in the image.

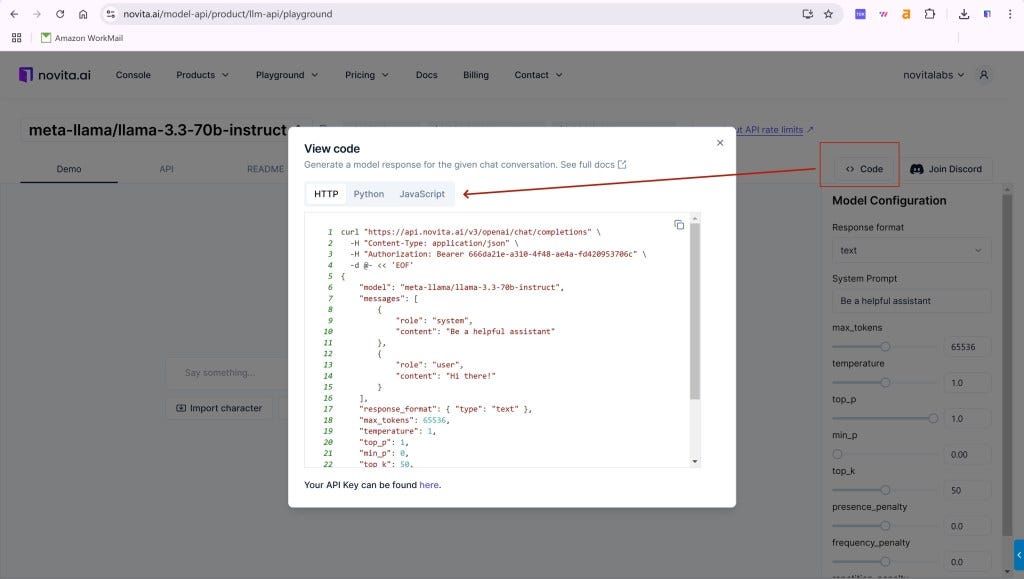

Navigate to API and find the “LLM” under the “Playground” tab. Install the Novita AI API using the package manager specific to your programming language.

Step3: Begin interacting with the model!

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.3-70b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Llama 3.3 70B represents an important step in making advanced AI more accessible. It is able to achieve comparable performance to Llama 3.1 405B while significantly reducing computing resource requirements, making it a practical choice for many applications. Whether it is multilingual chatbots, coding assistance or synthetic data generation, Llama 3.3 70B provides developers and researchers with a powerful and efficient solution.

Frequently Asked Questions

How is Llama 3.3 different from Llama 3.2?

Better fine-tuning, safety features, multilingual support, longer context window

Can Llama 3.3 run on standard developer hardware?

Yes, designed for common GPUs and developer workstations

What languages does Llama 3.3 support?

English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai

originally from Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.