Key Highlights:

Introduction to Text Classification with Llama Models: Understand how Llama models, developed by Meta, excel in text classification tasks such as sentiment analysis, spam detection, and document categorization with their scalable architecture and efficient language processing capabilities.

Optimizing Text Classification with Prompt Engineering: Learn how prompt engineering, especially through few-shot learning, can guide Llama models to deliver precise text classification with minimal data, improving accuracy and reducing the reliance on extensive labeled datasets.

Llama’s Role in Text Classification: Explore how Llama’s advanced architecture allows for nuanced understanding and categorization of text, making it an ideal choice for tasks requiring deep language comprehension.

Effective Tools for Text Classification: Leverage Novita AI’s platform and its interactive LLM Playground to refine and test classification prompts, optimizing the performance of Llama for text classification tasks.

Novita AI and LangChain for Scalable Solutions: Discover how Novita AI’s integration with LangChain simplifies the deployment of few-shot learning, enabling scalable, efficient text classification solutions for a range of NLP applications.

Table Of Contents

Understanding Llama for Text Classification

Preparing Your Setup for Effective Prompt Engineering

Advanced Prompt Engineering Techniques

Evaluating Model Performance

Optimizing Text Classification with Novita AI

Conclusion

Text classification plays a pivotal role in natural language processing (NLP) applications, powering tasks such as sentiment analysis, spam detection, and document categorization. With advancements in AI, Llama models, developed by Meta, have emerged as a robust solution for text classification due to their scalable architecture and efficiency in understanding complex language patterns.

Prompt engineering, particularly through few-shot learning, has transformed the way these models handle specific text classification tasks. By crafting task-specific prompts, developers can fine-tune model outputs, enabling high accuracy with minimal training data. This article explores the concepts, strategies, and tools to optimize Llama models for text classification using prompt engineering.

Understanding Llama for Text Classification

The Role of Llama Models in Classification Tasks

Llama models are advanced large language models (LLMs) designed to process and generate human-like text. As outlined in Meta’s official documentation, Llama 3 boasts improvements in efficiency and scalability, making it a preferred choice for text classification tasks. Key features include:

Fine-grained understanding: Llama’s architecture supports nuanced text analysis, allowing it to excel in categorizing and interpreting text.

Customizable outputs: Llama models adapt well to prompt engineering, producing outputs tailored to specific classification requirements.

Compared to traditional machine learning models, Llama reduces the need for extensive labeled datasets, enabling quicker deployment in NLP applications.

Challenges in Text Classification with Generalized Models

Generalized language models often struggle with domain-specific text classification tasks due to a lack of contextual understanding. Traditional approaches require large datasets and extensive fine-tuning, which can be resource-intensive.

By leveraging prompt engineering and tools like Novita AI’s platform, developers can mitigate these challenges. For example, few-shot learning techniques allow Llama models to perform well with limited examples, reducing reliance on labeled data.

Preparing Your Setup for Effective Prompt Engineering

Understanding Prompt Engineering

Prompt engineering involves designing structured inputs that guide language models to generate accurate and context-aware responses. Effective prompts provide:

Clarity: Clear instructions reduce ambiguity, improving model understanding.

Context: Task-specific context ensures relevant outputs.

Direction: Well-defined prompts guide models toward desired results.

Key Principles of Effective Prompts

Clarity and Specificity: Use concise, unambiguous instructions. For example, instead of “Classify this text,” specify, “Classify this review as positive, neutral, or negative.”

Example-Driven Design: Provide examples within prompts to illustrate the desired task format.

Prompt Diversity: Experiment with varied prompts to improve model adaptability.

Tools and Resources for Prompt Design

Platforms such as Novita AI’s LLM Playground provide an interactive interface for testing and refining prompts. For step-by-step guidance on leveraging their API, you can explore the comprehensive instructions available in the Get Started documentation.

Iterative Prompt Refinement

Evaluate prompt effectiveness by analyzing outputs. Iterative refinement — adjusting prompt phrasing, adding examples, or incorporating task-specific keywords — can significantly improve performance.

Advanced Prompt Engineering Techniques

Implementing Few-Shot Learning

Few-shot learning involves providing a model with minimal examples to perform a specific task. This technique is particularly effective for text classification when training data is scarce. Steps to implement few-shot learning include:

Define the task: Clearly state the classification objective within the prompt.

Include examples: Provide 2–3 labeled examples within the prompt.

Test and refine: Evaluate the model’s output and adjust examples or phrasing as needed.

Code Example: Using Novita AI with LangChain for Few-Shot Learning

const { ChatNovitaAI } = require("@langchain/community/chat_models/novita");

const llm = new ChatNovitaAI({

model: "meta-llama/llama-3.1-8b-instruct",

apiKey: process.env.NOVITA_API_KEY

});

const aiResponse = await llm.invoke([

["system", "You are an AI that classifies text reviews as positive or negative."],

["human", "Here are examples: \n1. 'This product is amazing!' -> Positive\n2. 'Terrible service, I won't return.' -> Negative"],

["human", "Review: 'The experience was decent, but could be better.'"]

]);

console.log(aiResponse);

Avoiding Common Pitfalls

Ambiguous Prompts: Lack of clarity can lead to inconsistent outputs. Ensure prompts explicitly define the task.

Overloading with Examples: Too many examples can confuse the model. Stick to a few relevant ones.

Evaluating Model Performance

Essential Metrics for Text Classification

Key metrics for evaluating Llama’s performance include:

Precision: Accuracy of positive predictions.

Recall: Proportion of actual positives correctly identified.

F1-Score: Harmonic mean of precision and recall, balancing both metrics.

Comparing Pre- and Post-Optimization Results

Use visualization tools to compare model performance before and after optimization. For example, plotting precision and recall scores can highlight the impact of prompt engineering and few-shot learning.

Optimizing Text Classification with Novita AI

Novita AI offers powerful tools for enhancing text classification processes through seamless integration with cutting-edge models. By tapping into Novita AI’s extensive API capabilities, developers can optimize prompt experimentation and fine-tune workflows, ensuring improved classification performance across various use cases.

Benefits of Novita AI Integration

Novita AI stands out for its user-centric features that foster quick adoption, scalability, and flexibility. Key advantages of incorporating Novita AI into your project include:

Streamlined Integration: Novita AI’s API is fully compatible with OpenAI standards, allowing for easy and seamless integration into existing systems. Whether you’re building from the ground up or optimizing an existing setup, Novita AI ensures minimal friction during implementation.

Cost-Effectiveness: With affordable pricing plans that scale to suit growing needs, Novita AI allows businesses to experiment with advanced models without incurring hefty operational costs. This makes it an attractive choice for both startups and large enterprises looking for a cost-efficient solution.

Access to Advanced Models: Novita AI gives users access to a broad range of cutting-edge models, including Llama, Mistral, Qwen, Gemma, and more. These models cover a diverse set of applications, from natural language understanding to advanced text generation, making Novita AI a versatile tool for various industries.

Scalability and Flexibility: Novita AI’s API allows for easy scaling, enabling developers to tailor solutions that meet their specific needs without worrying about bottlenecks. Its flexible architecture supports everything from small-scale tests to enterprise-level implementations.

Implementing Few-Shot Learning via Novita AI and LangChain

For those interested in deploying few-shot learning techniques, Novita AI’s integration with LangChain provides an efficient framework to do so. Few-shot learning, which requires minimal training data, is key for scenarios where data availability is limited, and Novita AI enables developers to experiment with this powerful approach.

By combining Novita AI’s API with LangChain, developers can set up robust few-shot learning models that deliver strong performance even with minimal training examples. LangChain simplifies the process of creating sophisticated pipelines, while Novita AI enhances them with access to advanced LLMs.

Here’s how to get started with Novita AI and set up your API access step by step:

1.**Log in:**you can create an account on Novita AI.

**2.Obtain an API Key:**navigate to the “Dashboard” tab, where you can create your API key.

**3.Copy Key:**Once you enter the page below, you can directly click “copy” to obtain your key.

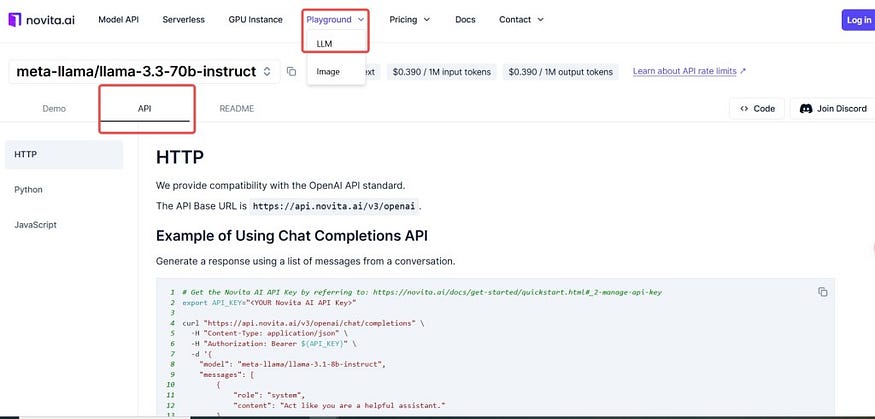

**4.Install:**Go to the API section, locate “LLM” under the “LLMs” tab in the Playground, and install it using the package manager for your programming language(HTTP,Python or JavaScript)

Conclusion

Prompt engineering and few-shot learning are transformative techniques for optimizing Llama models in text classification tasks. By leveraging tools like Novita AI, developers can achieve high accuracy and scalability, even with limited data. As NLP continues to evolve, these strategies will remain at the forefront of AI-driven innovation.

Frequently Asked Questions

1.What is a prompt for text classification?

A structured input guiding the model to perform text classification tasks effectively.

2.Can Llama be used for text classification?

Yes, Llama models are highly effective for text classification due to their advanced language understanding capabilities.

3.What is Llama prompt engineering?

The process of crafting prompts to optimize Llama’s outputs for specific tasks.

4.What is Llama 3.1 8B classification?

A classification task leveraging the Llama 3.1 model with 8 billion parameters.

5.Can ChatGPT categorize text?

Yes, but Llama models with optimized prompts can provide more task-specific accuracy in certain scenarios.

originally from Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommend Reading

1.Comprehensive Guide to Few-Shot Prompting Using Llama 3

2.Prompt Engineering Business Task: Develop for Success

3.Unveiling Examples of Roles in Prompt Engineering for Developers