The world of generative AI has a new and powerful addition - Llama 3. Made by Meta AI, this large language model (LLM) stands out for its ability to understand and create text that sounds human.

Llama 3 is expected to change the game by providing easy access and advanced features for different uses.

But what makes Llama 3 unique, and how can beginners get started with it? Let's look into these questions!

Why Llama 3 is a Game Changer

What is Llama 3

Llama 3, Meta’s Llama 3, is the latest model developed by Meta AI, offering a powerful tool in the realm of natural language processing. As part of the Meta Llama series, it provides a wide range of capabilities for various tasks, such as coding and creative content creation.

Plus, it excels in understanding a wide range of languages and handling complex concepts with longer context windows. Leveraging the expertise from previous versions, Llama 3 performs extensive human evaluations and stands out as a comprehensive solution for diverse NLP needs.

So, what's the secret to Llama 3's success? Let's dive into its main features.

What are the key features of Llama 3?

Real-time Tracking Capability: Llama 3 offers real-time tracking, allowing users to monitor their data in the moment.

Seamless Integration with Third-Party Apps: Llama 3 integrates seamlessly with various third-party applications, offering users flexibility and convenience in their tech ecosystem.

Advanced Analytics Capabilities: It provides users with valuable insights into their data and usage patterns through its advanced analytics.

User-Friendly Interface: The interface ensures that even newcomers to such devices can navigate and maximize its functionalities effortlessly.

Security Prioritization: The device emphasizes security with enhanced protocols to effectively safeguard sensitive information.

What are the Advantages that Differ from Llama 2?

Llama 3 has some clear advantages over earlier versions.

One big change is its size. Llama 3 has many more parameters than Llama 2. This leads to a better and deeper understanding of language.

Another key improvement is in the training process. Meta used new techniques and a larger dataset for Llama 3’s training. This means it performs better and can handle a wider range of tasks.

By now,I bet you're curious about what Llama 3 can do in the real world. The following section here are the most common use cases for Llama 3.

What are Llama 3's Main Applications

Multiple Character AI Chat:Llama 3 can switch between personalities, offering a unique AI experience. Developers can customize its responses for diverse applications like chat bots, virtual assistants, and storytelling in games.

Writing code:Llama 3 is a valuable coding partner for developers. It assists in creating code snippets, identifying bugs, and offering suggestions for enhancing code quality.

Creative Writing:With its strong grasp of context and ability to generate human-like text in various languages, Llama 3 is a valuable resource for writers worldwide.

Key Points Picker :Llama 3 efficiently extracts and summarizes information from various sources, enabling quick access to key points. It allows researchers, analysts, and businesses to focus on decision-making.

Data Analysis Reports: Llama 3 simplifies data analysis by creating clear reports that highlight key trends, patterns, and insights. By streamlining the report creation process, Llama 3 allows users to focus on interpreting data.

After hearing this,you're probably eager to give it a try. The good news is, there are a few different ways to use Llama 3! Let’s take a look at how you can do that with programming languages, frameworks, and APIs.

How to Use Llama 3 with Different Programming Languages

Llama 3 can work with many programming languages. You can choose Python or another language.

4 Factors for Choosing Programming Language

Performance Requirements: Think about how much computing power your project needs and choose a language that matches it.

Developer Expertise:Knowing a language's syntax can help you develop faster.

Ecosystem and Support:Select a language with strong community support, libraries, and frameworks suited to your needs.

Deployment and Maintenance: Factor in compatibility with the target platform and the ease of long-term maintenance, ensuring the language fits with your deployment environment and scaling needs.

By thinking about these factors, you can choose a programming language that helps you use Llama 3 well.

How to Use Llama 3 in Python,For Example

To use Llama 3 in Python, you first need to install the necessary libraries for loading and running the model. Then, you load the model and tokenizer, pass in your text, and get the output. Along the way, make sure to handle errors and test everything to ensure it works properly.

How to Use Llama 3 in Different Frameworks

Various frameworks are available to make it easier to integrate large language models. Using Llama 3 in these frameworks offers a clearer way to work, especially for big projects.

4 Factors for Choosing Frameworks

Ease of Use: The framework should be easy to use. A simple interface and clear APIs help make development smoother.

Performance: The framework's performance when handling large-scale tasks or high-concurrency scenarios. Whether it runs efficiently locally and supports distributed computing, etc.

Customization Options: The range of functionalities the framework supports and the breadth of application scenarios. For example, whether it is limited to text processing or can support complex workflows and multi-step tasks.

Flexibility and Extensibility:The framework's customizability and how easy it is to extend. Whether it allows for feature expansion or modification based on project requirements.

By looking at these things carefully, you can find a framework that speeds up your Llama 3 development and gives you the tools to create strong AI applications.

How to Use Llama 3 in LangChain,For Example

Install necessary libraries like LangChain, download the Llama 3 model, set up a virtual environment, configure the Transformers pipeline and LangChain, and run and test Llama 3 on your local machine for NLP tasks.

How to Use Llama 3 in different API

Accessing the power of Llama 3 doesn’t always mean you have to set up complicated environments. You can use APIs (Application Programming Interfaces) to work with the model weights easily, without needing a lot of local setup.

3 Benefits of Using Llama 3 with API

Integrating Llama 3 through APIs has many benefits. It can make the development process easier, especially for teams that want to deploy quickly and efficiently.

Quick Integration: APIs offer standardized interfaces, allowing developers to quickly integrate the Llama model into existing applications or platforms without dealing with the complexities of low-level model and code management.

Cost-Effectiveness:By using the API, you don’t need to set up high-performance computing resources (like GPUs). It’s ideal for developers or teams with limited hardware, as all computation is done on the cloud.

Simplified Development:With APIs, there’s no need to deploy and maintain the model locally. All computations are handled on remote servers, making deployment and maintenance much easier.

By using API benefits, you can access the power of Llama 3 and speed up the creation of your AI-driven apps.

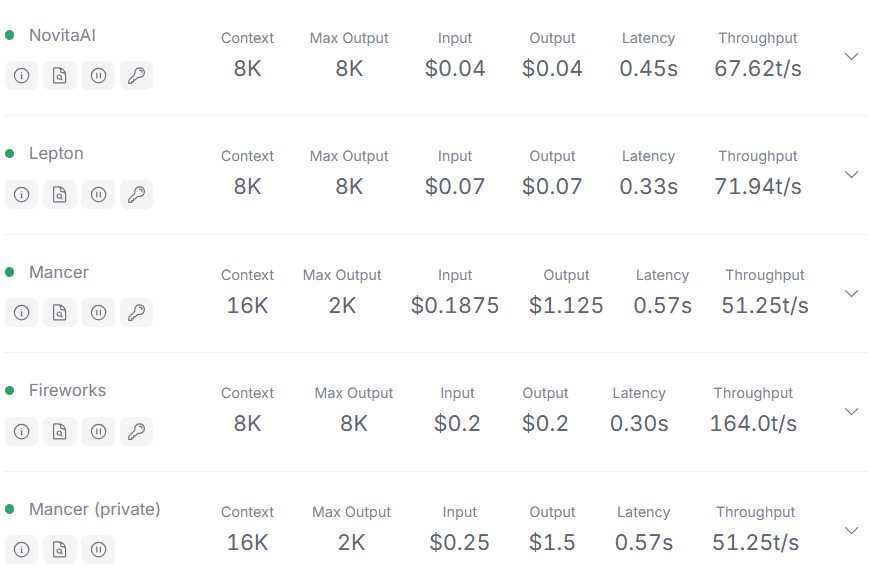

4 Factors for Choosing Different API

Max Output-The higher, the better :The maximum number of tokens the model can generate in a single call. A higher value means the model can produce longer text.

Cost of Input and Output-The lower, the better :he cost per million input and output tokens. Lower costs are better for users.

Latency- The lower, the better :The time from request to response. Lower latency means faster responses, which improves the user experience.

Throughput-The higher, the better: The number of tokens processed per second. Higher throughput means the model can handle more requests per unit of time, improving efficiency.

How to Use Llama 3 in Novita AI ,For Example

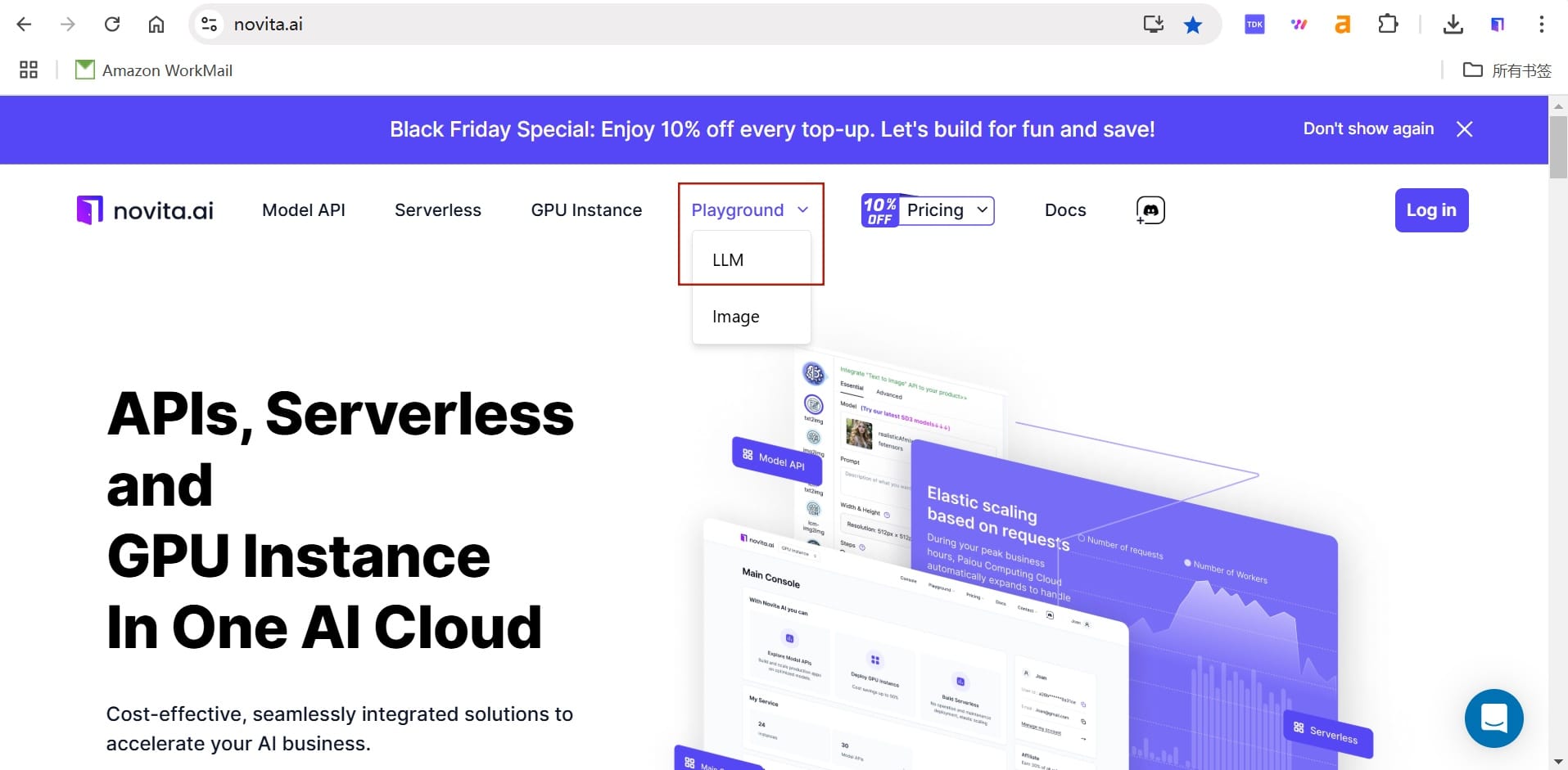

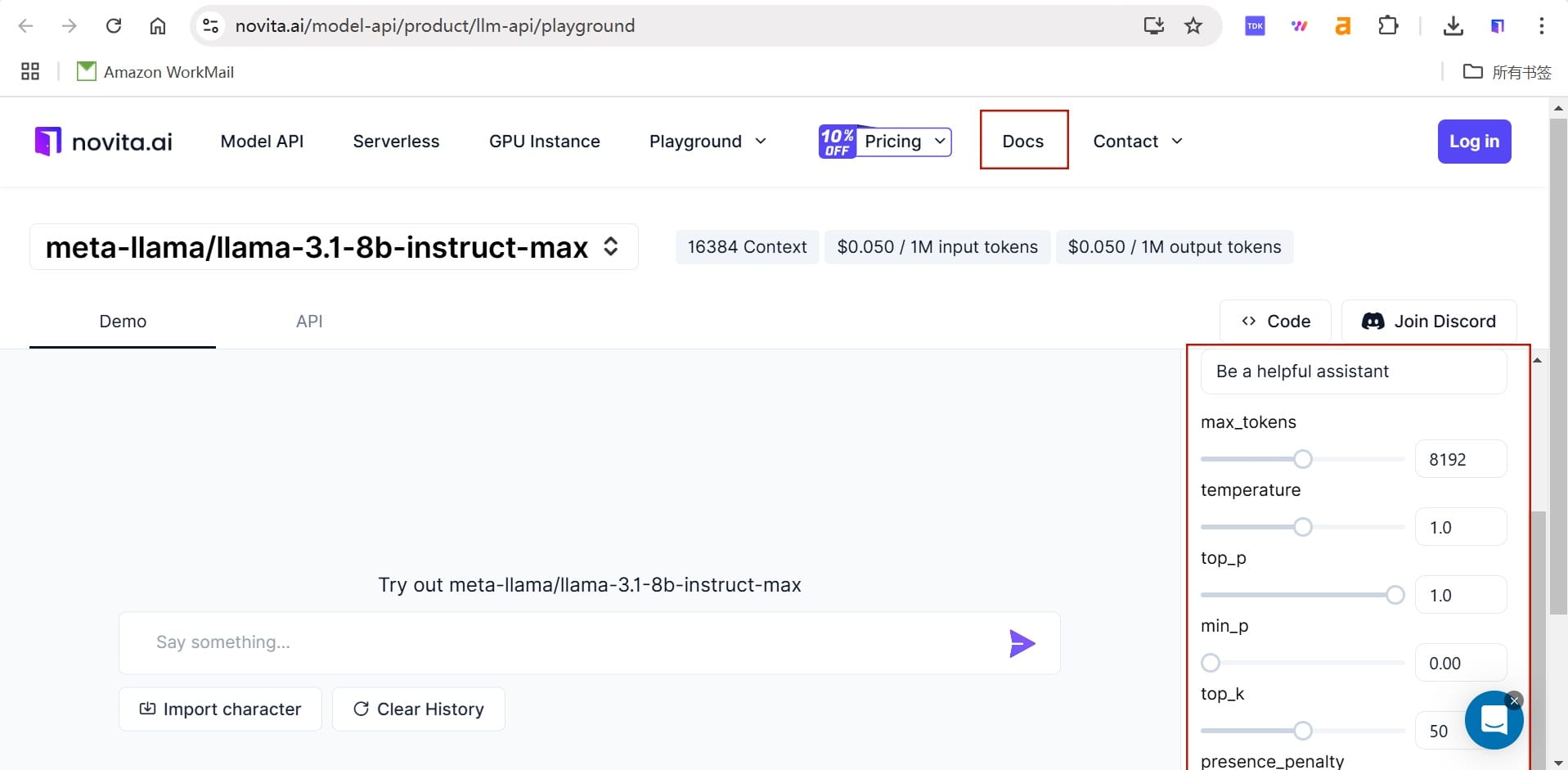

Step 1: Log in and click on the [LLM Playground] button

If you're a new user, please create an account first, then click on the LLM Playground button on our site.

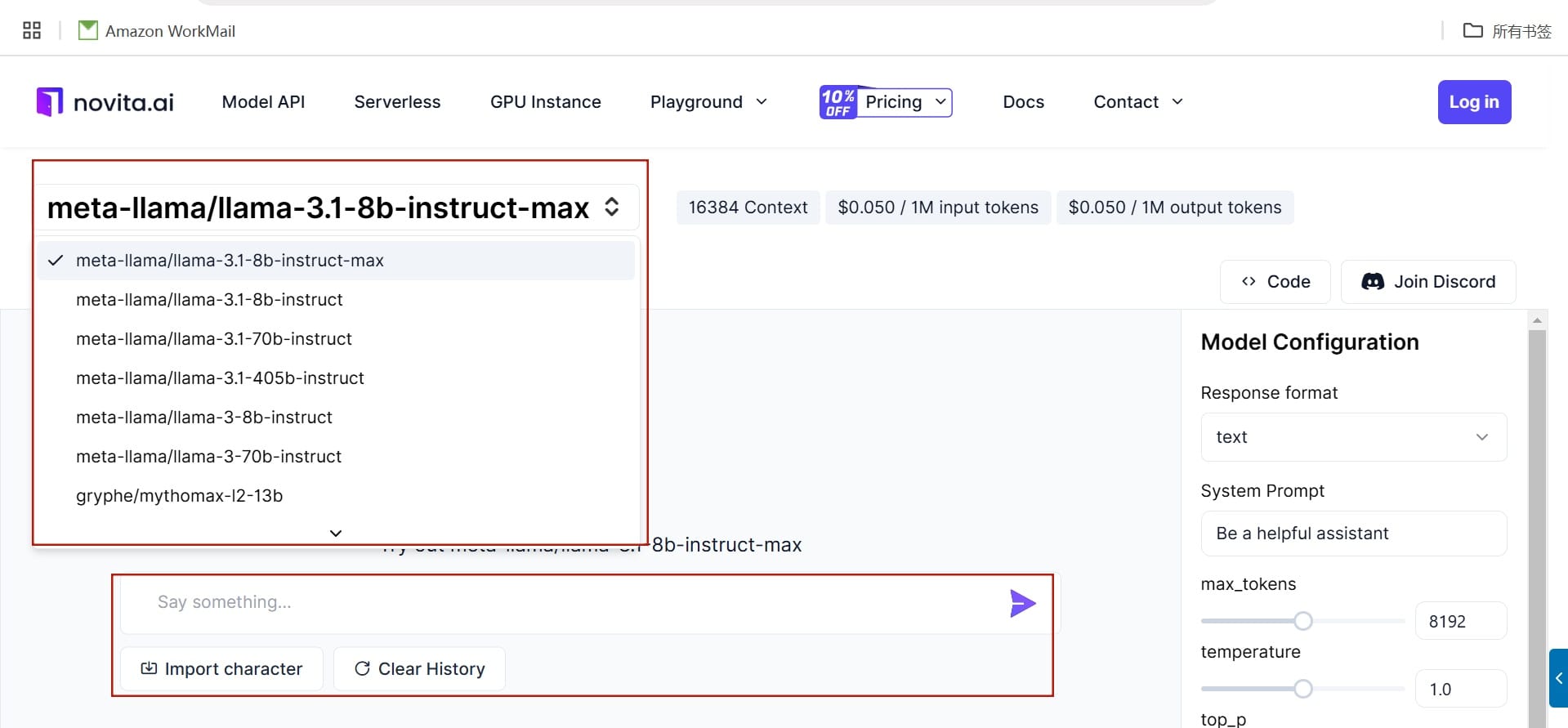

Step 2: select the LLM model you want to try and start your journey.

Now, you can choose the model you'd like to try. We're excited to tell you that we offer Llama 3 in three different power configurations.You can choose based on your specific requirements:

For prototyping: Visit our Llama 3.2 1B Instruct Demo for initial testing.

For production applications: Experiment with the Llama 3.2 3B Instruct model for enhanced capabilities.

For visual-linguistic tasks: Test multimodal features in our Llama 3.2 11B Vision Instruct Demo.

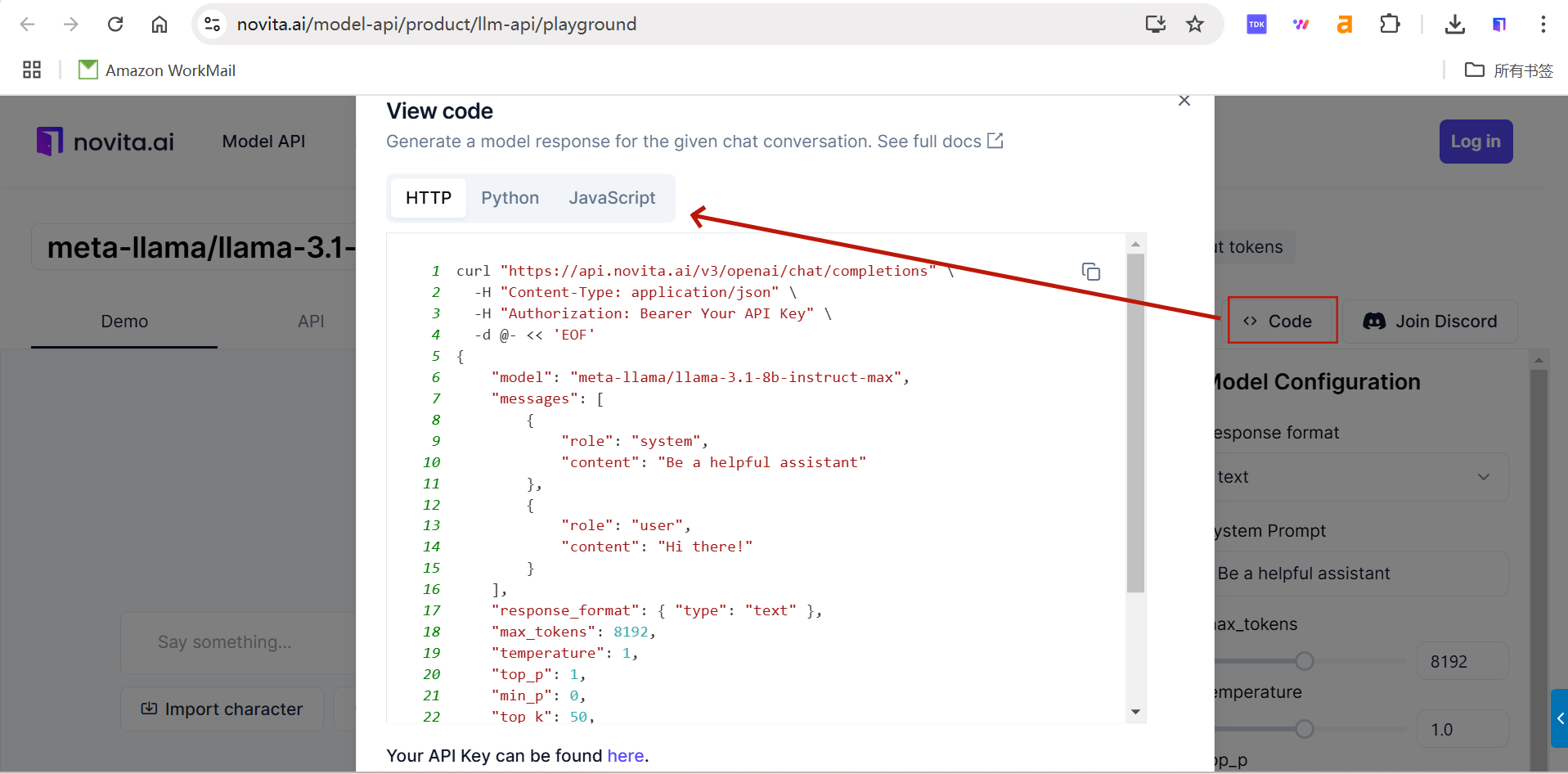

Step 3: Integrate and Deploy as Code in Data Science

You can click the "Code" button to get the API key.Then you can use one of the three programming languages—HTTP, Python, or JavaScript—to make the API call, ensuring thorough testing in your development environment.

Step 4: Customize your Model

Finally, you can adjust the parameters on the right to modify the model's output. If you have any questions about the parameters, click the "Docs" tab above to see their details.

How does Llama 3 Ensure the Safety of Use?

Llama 3 come with great potential but also a need for safe and ethical use. To help reduce risks, they have added several safety features.

Llama Code Shield: This feature filters out unsafe code generated by Llama, ensuring only secure code makes it to the final product.

Llama Guard 2: It checks your text (prompts and responses) and labels it as "safe" or "unsafe" based on standards, flagging things like hate speech or violence.

CyberSec Eval 2: This tool measures how secure Llama is, checking for issues like cyber security risks, prompt injections, and potential code misuse.

Torchtune: Llama 3 uses a PyTorch library to efficiently fine-tune models, making the training process more memory-friendly.

Conclusion

Llama 3 is a useful tool with many options. You can use it for tasks like creative writing, coding, information extraction, and data analysis, following best practices while ensuring data privacy. It works well with different programming languages, frameworks, and APIs. It is worth noting that using Llama 3 with APIs simplifies development by enabling quick integration, reducing costs (no need for powerful hardware), and eliminating the need for local deployment and maintenance. This makes it flexible and efficient for your work. Llama 3 is also safe to use on various platforms. Start using Llama with the APIs and unlock its full power!

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.