Key Highlights

1.Advanced Performance: The Llama 3.1 8B model offers efficient performance with a 128K token window, strong benchmarks (69.4 MMLU, 84.5 GSM-8K), and multilingual support through its open-source architecture.

2.How to access llama 3.1 8b via API: Novita AI offers an API for Llama 3.1 8b, at just $0.05 per million tokens for both input and output. Just sign up for a free trial and use the API with simple requests.

3.How to access llama 3.1 8b locally: To run Llama 3.1 8B locally, minimum requirements include 16 GB RAM, an 8-core CPU, and 20 GB free space. A dedicated GPU is recommended but not essential.

4.How to access llama 3.1 8b online: Access the Llama 3.1 8B model through platforms like HuggingChat, Fireworks AI, Groq, or Cloudflare Playground after creating an account for free usage.

This article provides a practical, technical guide on how to access and utilize Meta’s Llama 3.1 large language model (LLM), with a focus on the 8B parameter model. The Llama 3.1 family includes the 8B, 70B, and 405B parameter versions, with the 8B model being a lightweight, efficient option suitable for various deployment environments.

What is Llama 3.1 8B?

Llama 3.1 8B is a state-of-the-art multilingual large language model developed by Meta, featuring 8 billion parameters, designed for advanced text generation, reasoning, and instruction-following capabilities, with applications in areas such as long-form summarization and coding assistance.

Key Features

Multilingual capabilities supporting various languages.

Long context window of 128K tokens for processing lengthy texts.

State-of-the-art tool use and strong reasoning capabilities.

Compact design for efficient performance.

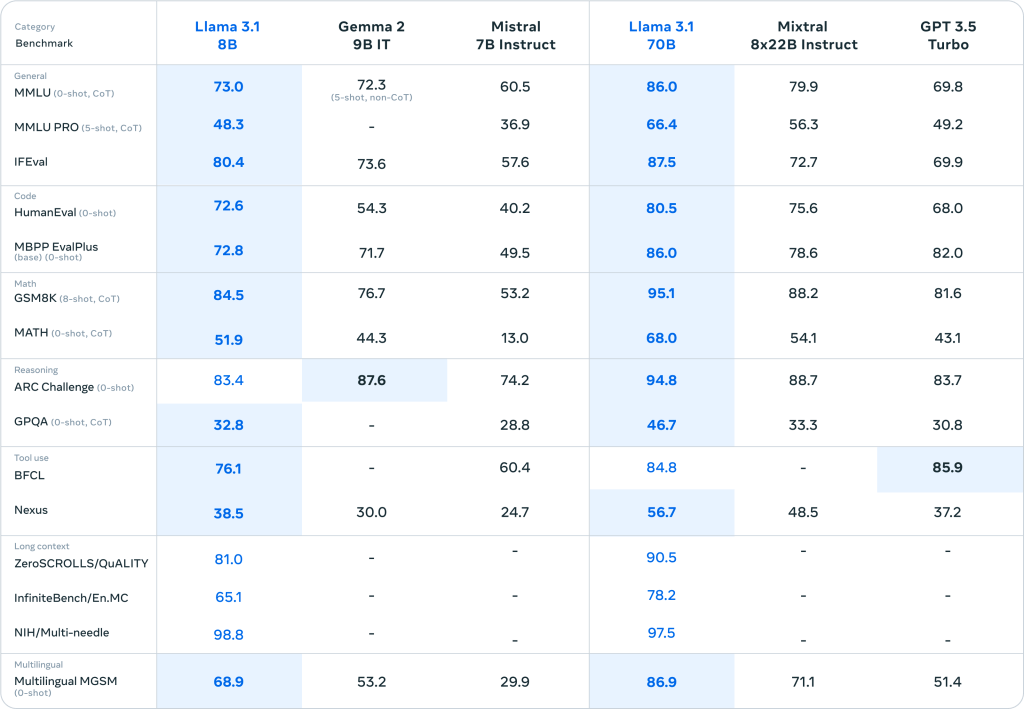

Benchmark

Compared with Other Llama Models

Advantages:

Fast processing speed

Low resource consumption

Lower hardware requirements

Suitable for edge devices and mobile platforms

Disadvantages:

Lower performance compared to 70B and 405B models

Limited functionality

Weaker performance on complex tasks

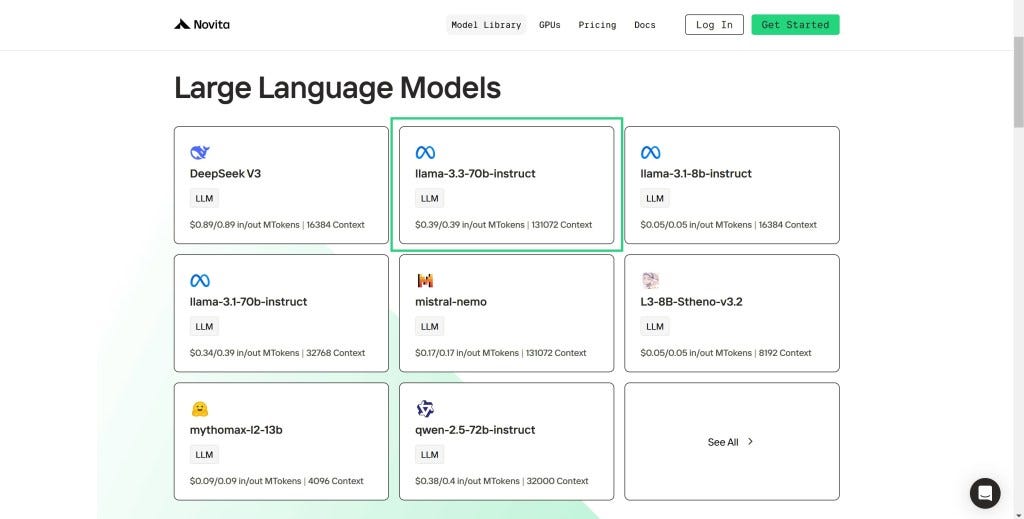

More llama 3 models are available on Novita AI

Compared with Other Models

Overall, while Llama 3.1 8B offers strong capabilities and cost advantages, Claude 3.5 Sonnet leads in programming performance and reasoning tasks, making the choice between them dependent on specific user needs and use cases.

If you want to see a more detailed parameter comparison, you can check out this article: Explore Llama 3.1 Paper: An In-Depth Manual

Applications

Ideal for scenarios requiring speed and low resource consumption.

Can be used on edge devices or in environments with limited computational resources.

Effective for various language tasks due to its multilingual capabilities.

How to Access Llama 3.1 8b via API on Cloud Platforms (like Novita AI)

Why choose API?

Easy Access: Developers can tap into Llama 3.1’s features without the need to manage the underlying infrastructure.

Flexibility: The API accommodates a wide range of applications, from chatbots to sentiment analysis.

Performance: It guarantees that applications maintain high performance under varying loads.

By streamlining interactions with Llama 3.1, the LLM API turns it into a versatile tool that any developer can use to integrate advanced language models into their projects.

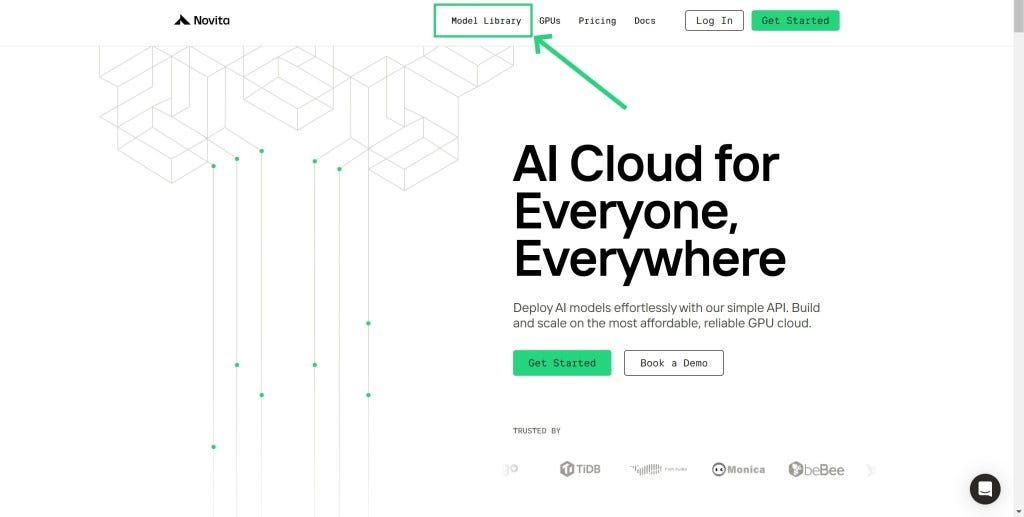

Step-by-Step Guide via Novita AI

Step 1: Log In and Access the Model Library

Log in to your account and click on the Model Library button.

Step 2: Choose Your Model

Browse through the available options and select the model that suits your needs.

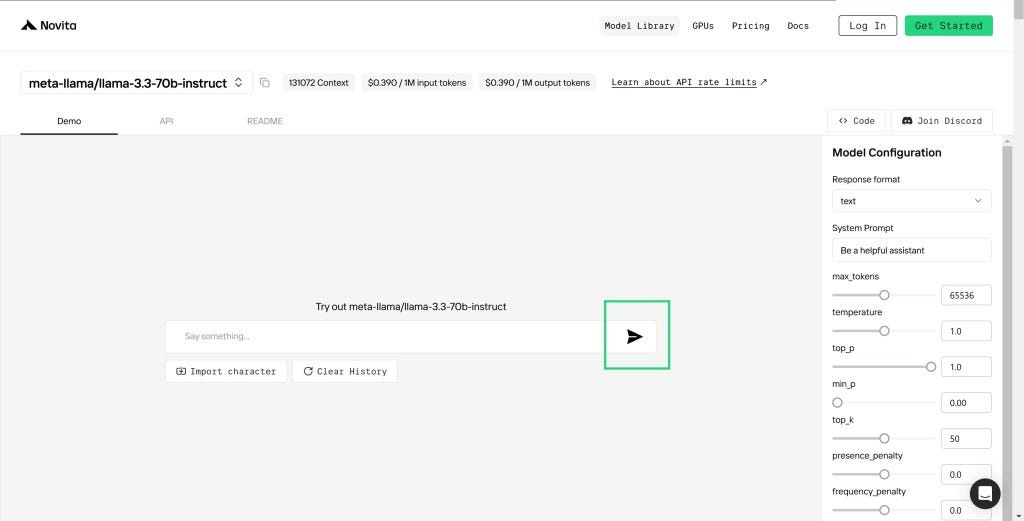

Step 3: Start Your Free Trial

Begin your free trial to explore the capabilities of the selected model.

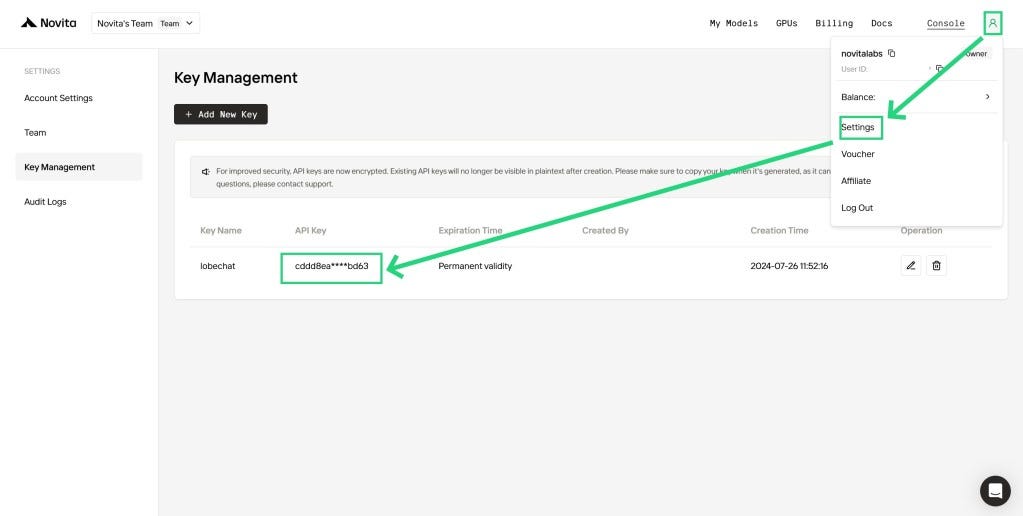

Step 4: Get Your API Key

To authenticate with the API, we will provide you with a new API key. Entering the “Settings“ page, you can copy the API key as indicated in the image.

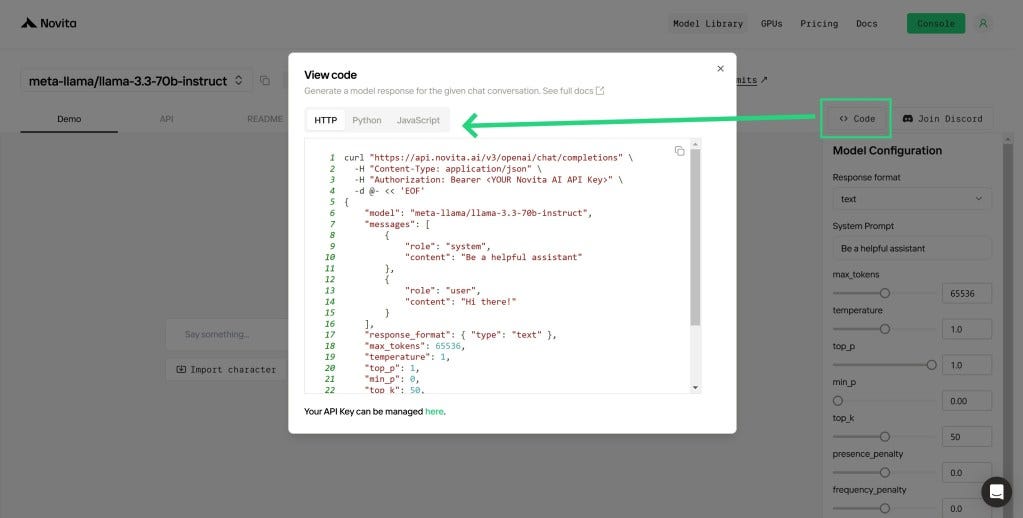

Step 5: Install the API

Install API using the package manager specific to your programming language.

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API for pthon users.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.3-70b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

How to Access Llama 3.1 8b Locally

Hardware Requirements

16 GB of RAM

8-core CPU

20 GB of free space

A dedicated GPU is not essential but can enhance performance.

Step-by-Step Installation Guide

Install Python and create a virtual environment.

Install required libraries: Use pip install bitsandbytes for GPU optimization.

Install the Hugging Face CLI and log in:

pip install huggingface-cli

huggingface-cli login

Request access to Llama-3.1 8b on the Hugging Face website.

Download the model files using the Hugging Face CLI:

huggingface-cli download meta-llama/Llama-3.3-70B-Instruct --include "original/*" --local-dir Llama-3.1-8B-Instruct

Load the model locally using the Hugging Face Transformers library:

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "meta-llama/Llama-3.1-8B-Instruct"

model = AutoModelForCausalLM.from_pretrained(

model_id, device_map="auto", torch_dtype=torch.bfloat16

)

tokenizer = AutoTokenizer.from_pretrained(model_id)

Run inference using the loaded model and tokenizer.

How to Access Llama 3.1 8b Online

You can access Llama 3.1 8B through several online platforms:

Novita AI LLM Playground: Offers an affordable, reliable, and simple inference platform with scalable LLM APIs*.*

HuggingChat: Free access after creating an account on Hugging Face.

Fireworks AI: Try out models using an API without cost.

Groq: Offers fast inference speeds with Llama 3.1 models.

Cloudflare Playground: Provides access to various text-generation models.

Which Methods Are Suitable for You?

Conclusion

In conclusion, accessing Llama 3.1 offers various options tailored to different user needs.

API access is ideal for developers seeking cost-effective integration and flexibility for fine-tuning models without heavy hardware investments.

Local access provides researchers and developers with complete control and customization, suitable for those who prioritize privacy and data security.

Online access is best for casual users looking for quick and easy interaction with the model without technical barriers.

Each method has its strengths, allowing users to choose the most appropriate approach based on their specific requirements and resources.

Frequently Asked Questions

What is the main difference between Llama 3.1 8B and 405B?

The 405B model is larger and more powerful but requires significantly more computational resources than the efficient 8B model.

Is Llama 3.1 8B open source?

Yes, it is released under Meta’s Open Model License Agreement for research and commercial use.

Does Llama 3.1 support multiple languages?

Yes, it supports several languages including English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.