xplore the top open-source Large Language Model (LLM) APIs for developers, including ChatGPT, LLAMA, PaLM, BERT, BLOOM, and novita.ai. Discover their unique features, applications, and how to choose the right API for your project based on model size, task suitability, and cost. Ideal for AI developers and tech enthusiasts interested in advancing natural language processing capabilities.

Introduction & Background

With the increasing popularity of LLMs, there is a growing demand for open-source APIs that allow developers to easily integrate these models into their projects.

APIs for large language models (LLMs) are transforming our approach to language processing. Utilizing advanced deep learning and machine learning techniques, these APIs offer remarkable natural language understanding. Developers now have the tools to build applications that interpret and interact with text in innovative ways.

This detailed article seeks to shed light on the unique characteristics, installation procedures, and user-friendliness of each open-source API. It examines six of the most popular and extensively used APIs for large language models, highlighting their varied features and their roles in natural language processing (NLP). This analysis serves as an invaluable guide for those exploring the rapidly evolving field of language processing technologies.

6 Open Source Large Language Models APIs for Developers

1. ChatGPT API

Undoubtedly, ChatGPT (Chat Generative Pre-trained Transformer) is one of the largest and most powerful large language models globally, drawing millions of users. The ChatGPT API, developed by OpenAI, enables developers to embed GPT3 and GPT4 models into their applications or use programming languages like Python to create sophisticated conversational interfaces.

ChatGPT is celebrated for its ability to produce text with human-like quality, translate languages, write programming code, craft various forms of creative content, and provide informative answers to questions.

To incorporate ChatGPT into a programming language, one must first establish a connection between the ChatGPT API and the chosen programming language. This integration harnesses ChatGPT’s text-generation prowess within your programming environment.

The exact steps and necessary libraries may differ based on the programming language utilized, but generally, the integration process includes the following steps:

Create an API Key: Start by generating a unique access code, which serves as your key to communicate and authenticate with the API. You can create your API key through the designated provider’s website.

Install the OpenAI API: Use the package manager specific to your programming language to install the OpenAI API library. This library contains the necessary tools and functions to interact with the API.

Import and Configure the API: After installing the OpenAI API, import the required classes into your application. Configure the API by using the bindings provided and your secret API key to enable the functionality. Follow these steps to initialize and start utilizing the API in your application.

Key features of ChatGPT include:

Large and Powerful Model: ChatGPT stands out as one of the largest open-source LLMs available, equipping it to handle a broad spectrum of tasks and produce high-quality results.

Versatility: Suitable for various NLP tasks such as text generation, translation, question answering, and code generation, ChatGPT is a versatile tool applicable across diverse fields.

Pathways System: It is trained using the innovative Pathways system, a training method that boosts the model’s efficiency and adaptability. This approach enables ChatGPT to assimilate information from multiple data sources and more effectively adapt to new tasks.

Chain-of-Thought Reasoning: ChatGPT employs a chain-of-thought reasoning strategy, which helps it decompose complex problems into simpler, manageable components. This feature significantly enhances its ability to tackle open-ended questions, explain its thought process, and generate coherent and pertinent text.

Dense Decoder-Only Transformer Architecture: The model uses a dense decoder-only transformer architecture, which augments its fluency and coherence in text generation. This architecture is crucial for capturing long-range dependencies in language, enabling the production of more natural and flowing text.

2. LLAMA

LLAMA (Large Language Model Meta AI) is a large language model developed by Meta AI. Touted as an accessible API, LLAMA is available in multiple configurations with 7B, 13B, 33B, and 65B parameters, making it versatile for both commercial and research use. It’s designed to democratize access to large language models by reducing the computing power and resources needed for training and deployment.

LLAMA is adept at performing a range of tasks such as text generation, translation, question answering, and code generation. The latest iteration, LLAMA 2, stands out even more, having been trained on 40% more data than its predecessor and boasting twice the context length, enhancing its capabilities further.

Offered as an open-source API, LLAMA supports integration with various programming languages. Additionally, a pre-trained model is readily available, allowing users to start implementing the technology immediately.

Here is how to use it:

Install the LLAMA API: You can install the LLAMA API using the package manager appropriate for your programming language. This is often the simplest method to get started.

Download the Model: Alternatively, you can download the LLAMA model directly from the official website. This method might be preferred if you need a specific model version or configuration that is not available through the package manager.

Import Classes and Specify Model Path: Once the LLAMA model is installed or loaded, import the necessary classes into your development environment and specify the path to the model. This sets up the model for use in your application.

Process Input Text: The LLAMA API can handle text input in various formats, such as plain text, HTML, and JSON. Choose the format that best suits your data and use case.

Generate Output Text: Finally, use the LLAMA API to generate output text. Like input, the API supports multiple output formats including plain text, HTML, and JSON, allowing you to integrate the generated content seamlessly into different parts of your application or workflow.

Key features of LLAMA include:

Large and Powerful: As one of the largest open-source LLMs available, LLAMA has the capacity to handle a broad spectrum of tasks, consistently delivering high-quality output. Its substantial size and sophistication enable it to perform complex language processing tasks effectively.

Versatile: LLAMA is equipped to manage a diverse array of NLP tasks. These include text generation, translation, question answering, code generation, creative writing, and code completion. Such versatility makes it an indispensable resource for various applications, appealing to users from different technological fields.

Open-Source: Being an open-source model, LLAMA is freely accessible to the public. This openness is particularly beneficial for developers and researchers who wish to explore and innovate within the realm of large language models without the constraints of licensing or fees. It encourages widespread experimentation and adoption across the tech community.

3. PaLM API

PaLM (Pathways Language Model) is a revolutionary large language model introduced by Google AI in early 2022. The PaLM API, along with MakerSuite, provides developers with a streamlined and efficient way to harness Google’s advanced language models for crafting innovative AI applications.

Boasting an impressive 540 billion parameters, PaLM is among the largest and most formidable language models ever developed. This vast capacity empowers PaLM to excel at various natural language processing (NLP) tasks. Its ability to quickly process complex prompts makes it particularly adept at handling intricate and multifaceted language challenges, setting a new standard in the field of AI-driven language processing.

Below is a step-by-step guide tailored for Python users:

Obtain an API Key: First, you need to secure an API key, which is essential for authentication and accessing the API’s capabilities. You can generate this key through Google or MakerSuite, depending on where the API is hosted.

Installation via pip: Use Python’s package manager, pip, to install the PaLM API. This can be done by running the following command in your terminal or command prompt:

pip install palm-api

Make sure to replace palm-api with the actual package name if it differs.

3. Import Libraries and Configure API Key: After installation, import the necessary libraries into your Python script. Configure the API by initializing it with your API key. This step is crucial for setting up your environment to interact with PaLM. Here’s an example of how you might do it:

from palm import PaLM

palm = PaLM(api_key='your_api_key_here')

4. Using PaLM to Generate Content: With the setup complete, you can now use PaLM to generate text for various applications. For instance, if you want to create a short story for your blog, you can prompt PaLM with a specific scenario or theme and let it generate a creative piece. Example usage might look like this:

story = palm.generate("Write a short story about a lost young dragon trying to find its way home.", max_length=500)

print(story)

Key features of PaLM API include:

Multilingual Translation: PaLM excels in translating text between a broad spectrum of languages while preserving the original text’s nuances and context. It can adapt its translation style to accommodate the cultural and linguistic differences of various languages, ensuring more natural and accurate translations.

Domain-Specific Translation: The model can be fine-tuned to cater to specific domains, such as legal or medical translation. This fine-tuning enhances the accuracy and relevance of translations in these specialized areas, making PaLM particularly valuable for professional applications where precision is critical.

Reasoning and Inference: PaLM is capable of chain-of-thought reasoning and inference. This ability allows it to understand the context and implications of questions better, leading to more accurate and relevant responses. This feature is particularly useful in scenarios that require a deep understanding of content and context, such as academic research or complex problem solving.

4. BERT API

BERT (Bidirectional Encoder Representations from Transformers) is a groundbreaking Natural Language Processing (NLP) model developed by Google. It is designed to handle a variety of NLP tasks such as translation, text generation, and text summarization.

To increase BERT’s accessibility to developers, Google has released pre-trained models integrated with the TensorFlow library. These pre-trained models are available in various sizes, allowing developers to select the model that best suits their specific needs and available computational resources.

How to install and use BERT API in Python:

- Install the Transformers Library: Use pip to install the Transformers library. This library provides access to BERT and other NLP models and tools:

pip install transformers

2. Import Necessary Classes: Import the classes from the Transformers library that will allow you to tokenize input text and perform sequence classification:

from transformers import AutoTokenizer, AutoModelForSequenceClassificatio

3. Load the Pre-trained BERT Model: Choose and load the BERT model you wish to use. For example, use the “bert-base-uncased” model, which is a commonly used version

4. Prepare Input Text: Encode your input text into tokens using the tokenizer. This conversion is necessary as BERT models process numerical token representations.

5. Perform a BERT Task (e.g., Text Classification): Feed the encoded text to the model to perform a specific task like text classification. Capture the predictions from the model:

predictions = model(encoded_text) predicted_label = predictions.logits[0].argmax() # Assuming logits are returned and using argmax to get the predicted class index

6. Output the Prediction: Print the predicted label. This label represents the class to which the input text has been assigned by the model.

key features of BERT include:

- Pre-training Techniques:

Masked Language Model (MLM): During its pre-training, BERT uses a technique where some of the words in a sentence are randomly masked, and the model learns to predict the original value of the masked words based only on their context.

Next Sentence Prediction (NSP): BERT also learns to predict whether two segments of text appear consecutively in the original document. This helps the model understand the relationship and flow between consecutive sentences.

2. Achievements in NLP: BERT has set new standards in NLP, achieving state-of-the-art results on numerous benchmarks and competitions. Its ability to grasp complex contextual nuances in text has made it a top choice for a variety of NLP tasks.

3. Multilingual Support: BERT has been extended to support multiple languages, broadening its usability beyond English to tasks like language translation and cross-lingual information retrieval. This makes BERT highly effective in a global context, catering to a diverse range of linguistic needs.

4. Fine-Tuning for Specific Tasks: Designed for adaptability, BERT can be fine-tuned on a relatively small amount of data for various specific tasks. These tasks include text classification, named entity recognition, and question answering. This transfer learning approach enables BERT to apply its pre-trained knowledge effectively across different domains and challenges.

5. BLOOM API

The Bloom (BigScience Large Open-Science Open-Access Multilingual) Large Language Model, known as BLOOM, is a significant advancement in the field of natural language processing (NLP). Developed by BigScience and built on the transformer architecture, BLOOM is a robust, 176-billion-parameter language model designed for a wide range of linguistic tasks.

Here’s a step-by-step guide to install BLOOM API:

- Import the necessary classes from the Transformers library in your Python script. This will allow you to access the Bloom API functionality.

from transformers import AutoTokenizer, AutoModelForSequenceClassification

- Specify the pre-trained Bloom model you want to use. The Bloom API currently offers two models: Bloom 176B and Bloom 1.5B.

model_name = “bigscience/bloom”

- Download the pre-trained Bloom model from the Hugging Face model hub. This will download the model’s weights and configuration files.

tokenizer = AutoTokenizer.from_pretrained(model_name) model = AutoModelForSequenceClassification.from_pretrained(model_name)

Key Features of Bloom LLM API

Summarization Capability: The Bloom LLM efficiently condenses extensive texts, preserving key details while ensuring clarity and coherence.

Code Completion Proficiency: Bloom LLM enhances coding productivity by offering pertinent code suggestions in response to incomplete snippets, thereby minimizing errors.

Debugging Support: Bloom LLM aids developers in pinpointing and fixing coding mistakes, streamlining the debugging process and enhancing code quality.

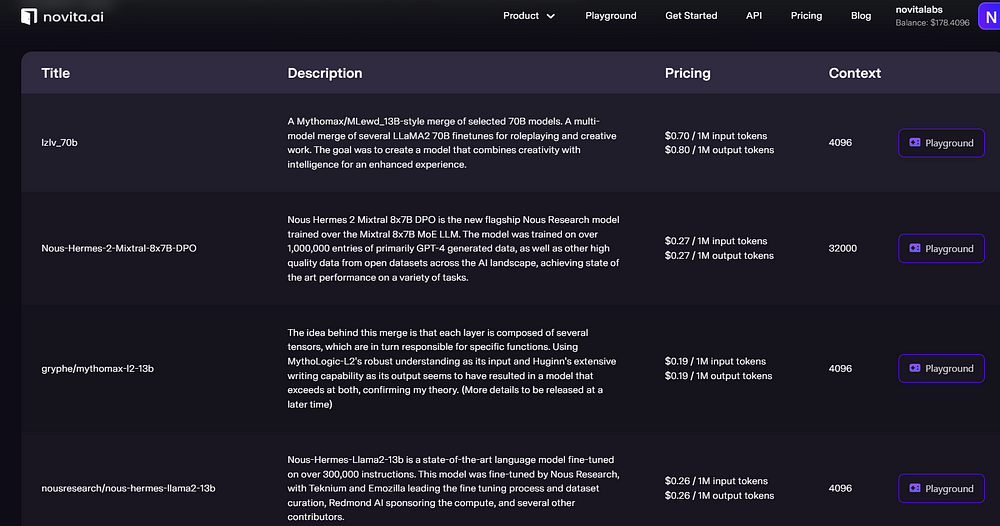

6. novita.ai LLM API

The novita.ai large language model, known as Chat-Completion is another groundbreaking advancement in the field of NLP. Novita AI LLM Inference Engine offers uncensored, unrestricted conversations.

Here is how to install Novita AI LLM API guide-by-guide:

- API Key Acquisition: Begin by obtaining an API key from Novita AI. This key is essential for authentication and will allow you to access the API’s features.

2. Installation via Package Manager: Install the Novita AI API using the package manager specific to your programming language. For Python users, this might involve a simple command like:

pip install novitaai

3. Importing Libraries and Configuring the API Key: After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM:

from novitaai import NovitaLLM novita = NovitaLLM(api_key='your_api_key_here')

4. Utilizing the API: You can now use the Novita AI LLM API to perform various NLP tasks.

Key Features of novita.ai LLM API

NSFW-Friendly: Novita AI LLM Chat APIs empower your conversations on any topic of your choice, whether they are NSFW, uncensored or rule-free.

Cost-Effective: Transparent, low-cost pricing: $0.20 per million tokens, with no hidden fees or escalating costs.

Auto Scaling: Fast scaling infrastructure. Updating models and Scaling data can be performed offline.

- Stable and Reliable: Maintain low latency in less than 2 seconds, Fast network with private information protected.

How to choose your own LLM API

When choosing an LLM API, there are several key factors to consider, including:

Model Size: The size of an LLM model is crucial as it influences the model’s capability and efficiency. Larger models tend to be more powerful, offering a broader range of functionalities, but they may require more resources to operate and could be slower and costlier.

Task Suitability: When selecting an LLM API, it’s essential to ensure it supports the specific tasks you intend to use it for. Some APIs are tailored for particular applications like text generation or translation, while others may be more versatile.

Cost Considerations: The pricing of LLM APIs can vary significantly. Some may be freely available, whereas others might charge per use or on a subscription basis. Choosing an API that aligns with your financial constraints is vital.

Documentation and Support: Opting for an LLM that comes with comprehensive documentation and robust support is beneficial, as these resources are invaluable for troubleshooting and guidance when issues arise.

Conclusion

As the landscape of natural language processing rapidly advances, the diverse array of open-source large language model (LLM) APIs — such as ChatGPT, LLAMA, PaLM, BERT, BLOOM, and novita.ai — provide developers with powerful, adaptable tools tailored for a variety of NLP tasks. Each of these APIs offers unique capabilities, including multilingual translation, domain-specific tuning, and innovative integration features, making them invaluable for both complex AI development and experimental applications. When selecting an LLM API, developers should carefully consider model size, specific task support, cost, and the availability of comprehensive documentation and support to ensure the tool meets their project requirements and budget constraints.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.