Key Highlights

Llama 3.3 70B is Meta’s advanced multilingual language model with 70 billion parameters, offering robust performance in text generation, reasoning, and translation tasks across eight officially supported languages.

API access offers developers a scalable and cost-effective way to integrate Llama 3.3 70B, eliminating the need for expensive local infrastructure while providing standardized interfaces for easy implementation and maintenance.

Novita AI offers an API for Llama 3.3 70b, at just $0.04 per million tokens for both input and output. Just sign up for a free trial and use the API with simple requests.

Meta’s Llama 3.3 70B model represents a significant advancement in large language models (LLMs), offering enhanced capabilities for various natural language processing tasks. This article explores the Llama 3.3 70B model, its features, performance, and how to access it effectively through an Application Programming Interface (API). Understanding the nuances of API access, particularly concerning cost and technical requirements, is essential for developers and businesses looking to leverage this powerful technology.

What is Llama 3.3 70B?

The Llama 3.3 70B is a multilingual large language model with 70 billion parameters. It is a generative model that has been pre-trained and instruction-tuned, designed to handle text-in and text-out tasks. Meta has optimized the model for multilingual dialogue, demonstrating strong performance against both open-source and closed-source models.

Key Features

Model Architecture: Llama 3.3 utilizes an optimized transformer architecture and operates as an auto-regressive language model.

Context Window Size: The model features a context window of 131,072 tokens, enabling it to maintain longer conversations and engage in more complex reasoning.

Supported Languages: It officially supports English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai. Although trained on a broader range of languages, using unsupported languages may necessitate fine-tuning.

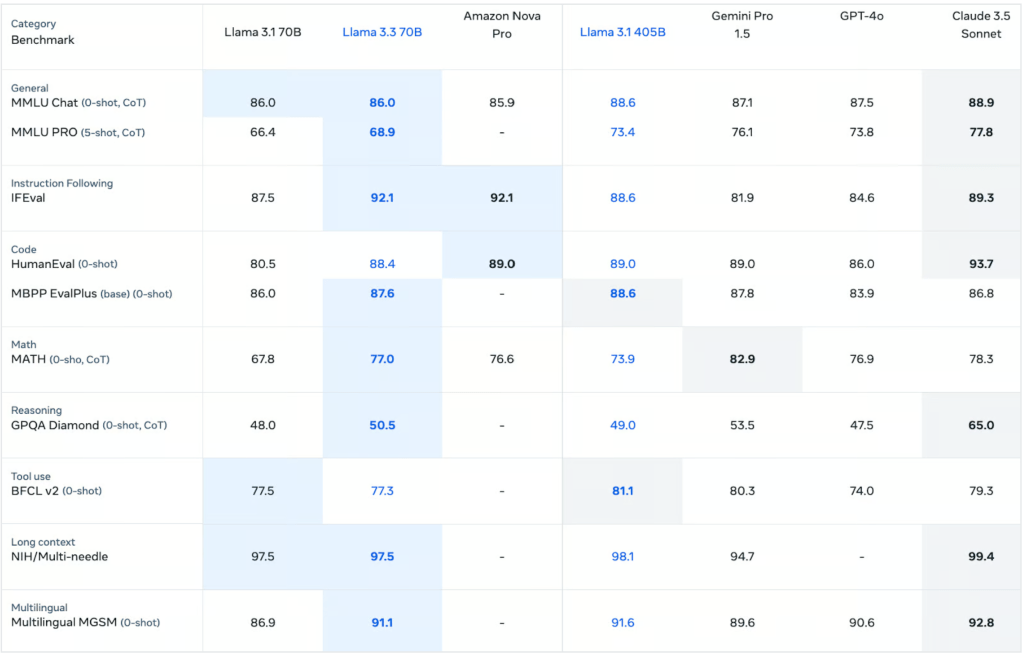

Benchmark

Compared with Other Models

Compared with Other Llama Models

Llama 3.2 3B: This smaller model with only 3 billion parameters is less capable of handling complex tasks but may be more efficient for simpler applications where resource constraints are a consideration.

Llama 3.1 405B: The Llama 3.3 70B provides similar performance to the Llama 3.1 405B model while being smaller in size and incurring reduced computational costs.

Llama 3.1 70B: The Llama 3.3 70B exhibits performance improvements in benchmarks such as MMLU (CoT), MATH (CoT), and HumanEval compared to the Llama 3.1 70B.

Llama 3 70B: Similar in size to the Llama 3.3, it offers high performance but lacks some optimizations present in the newer model.

Compared with Other Models

- Llama 3.3 70B excels in several categories, notably in instruction following (IFEval) and coding (HumanEval and MBPP EvalPlus). GPT-4o performs well in general conversation (MMLU Chat and MMLU PRO) and tool use (BFCL v2), but lags in some reasoning and coding tasks. Claude 3.5 Sonnet outperforms in most categories, especially in coding (HumanEval), reasoning (GPQA Diamond), and multilingual capabilities (Multilingual MGSM).

Applications

Use Cases: Llama 3.3 is suitable for various applications including:

Multilingual dialogue systems

Assistant-like chat interfaces

Natural language generation tasks

Code generation

Content creation

Sentiment analysis

Industry Applications:

Customer service automation

Content creation for marketing and media

Educational tools

AI-powered research assistance

Limitations: While powerful, it’s important to acknowledge limitations when using it for languages beyond those officially supported. The model is also subject to the Llama 3.3 Acceptable Use Policy which prohibits illegal or harmful uses.

Understanding API Access

What is an API?

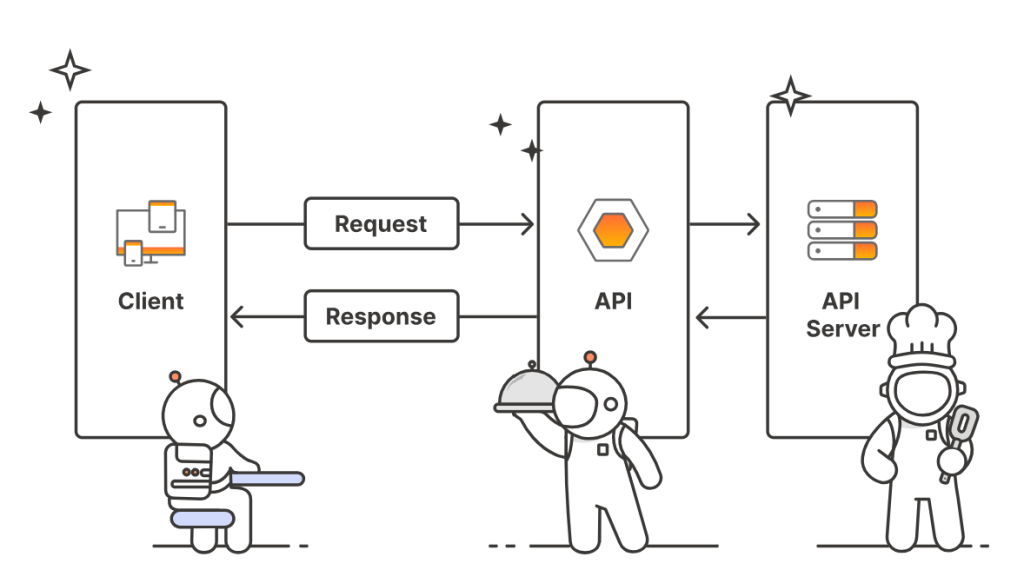

An API (Application Programming Interface) is a set of rules, protocols, and tools that facilitate communication between different software applications, acting as a bridge to enable data sharing and functionality.

APIs operate through a request-response cycle where the client sends a request to the server via the API, and the server responds accordingly.

Utilizing an API allows users to leverage application functionality without needing to understand its underlying implementation.

Benefits of Using an API

Scalability: APIs allow applications to scale by accessing remote resources as needed.

Cost Efficiency: They reduce the need for expensive local infrastructure.

Maintenance: Maintenance responsibilities lie with the API provider rather than the user.

Security: APIs offer secure access without exposing underlying systems.

Integration Ease: They provide standardized interfaces for easy integration into existing applications.

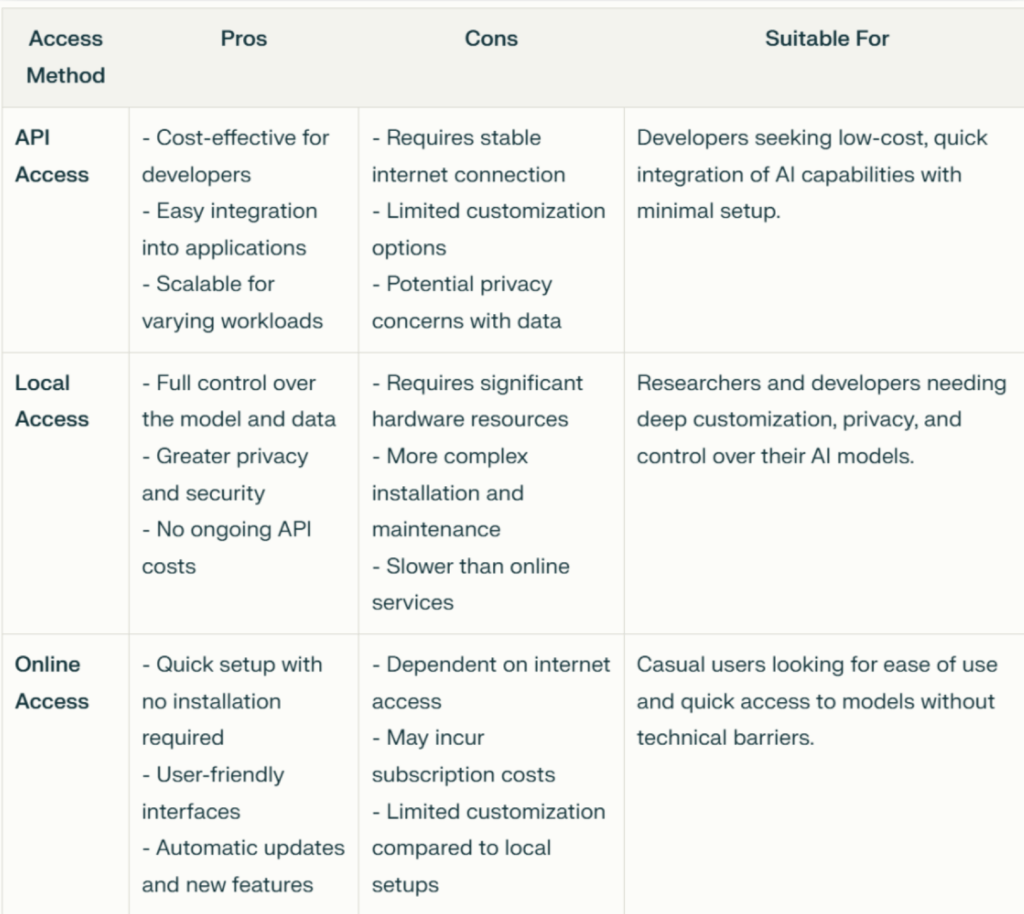

Comparison of Access Methods

In conclusion, accessing Llama 3.3 offers various options tailored to different user needs.

API access is ideal for developers seeking cost-effective integration and flexibility for fine-tuning models without heavy hardware investments.

Local access provides researchers and developers with complete control and customization, suitable for those who prioritize privacy and data security.

Online access is best for casual users looking for quick and easy interaction with the model without technical barriers.

Each method has its strengths, allowing users to choose the most appropriate approach based on their specific requirements and resources.

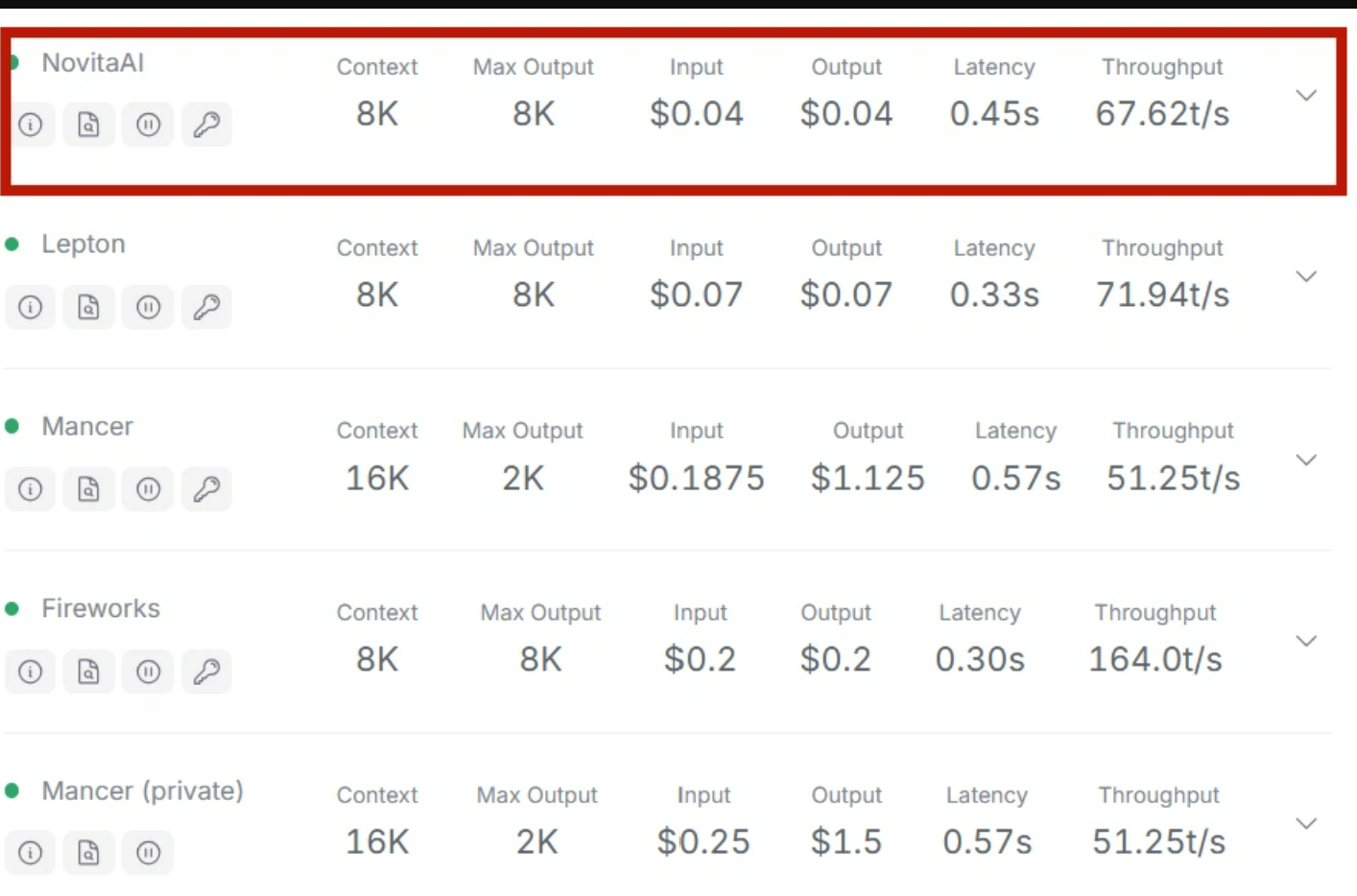

How to Choose a Suitable Llama 3.3 70B API

You can consider the following four factors when choosing an API.

Max Output-The higher, the better :The maximum number of tokens the model can generate in a single call. A higher value means the model can produce longer text.

Cost of Input and Output-The lower, the better :The cost per million input and output tokens. Lower costs are better for users.

Latency- The lower, the better :The time from request to response. Lower latency means faster responses, which improves the user experience.

Throughput-The higher, the better: The number of tokens processed per second. Higher throughput means the model can handle more requests per unit of time, improving efficiency.

Recommended provider

Novita AI offers an affordable, reliable, and simple inference platform with scalable Llama 3.3 API, empowering developers to build AI applications. Try the Novita AI Llama 3.3 API Demo today!

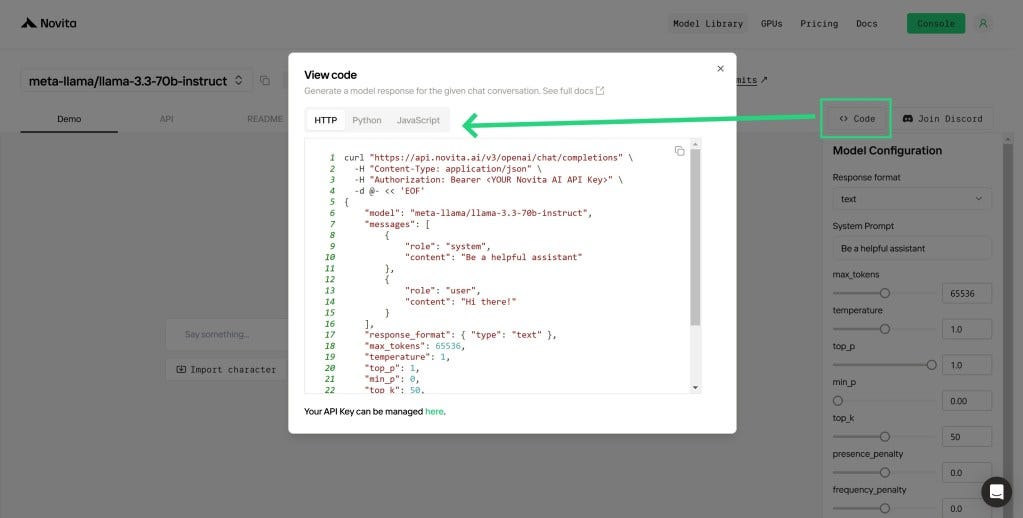

How to Access via Llama 3.3 70B API

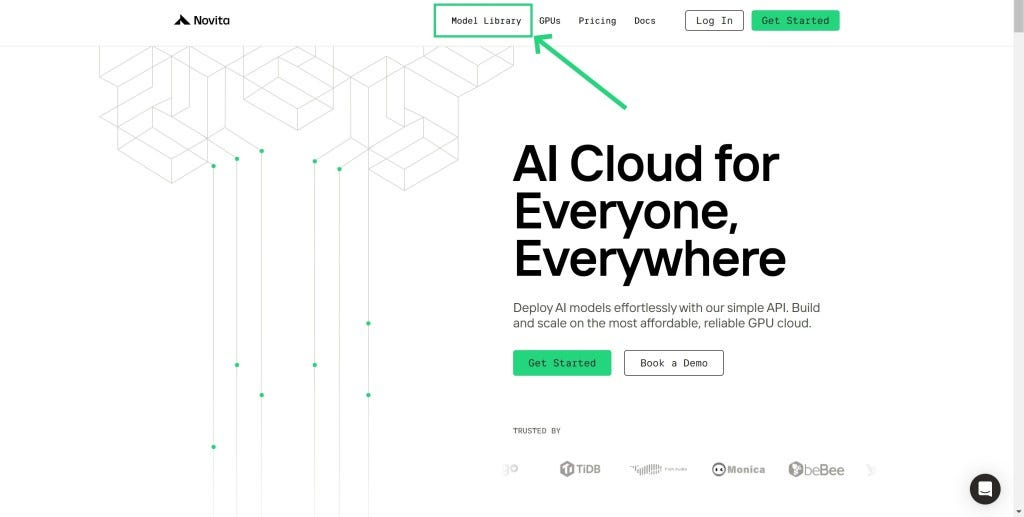

Step 1: Log In and Access the Model Library

Log in to your account and click on the Model Library button.

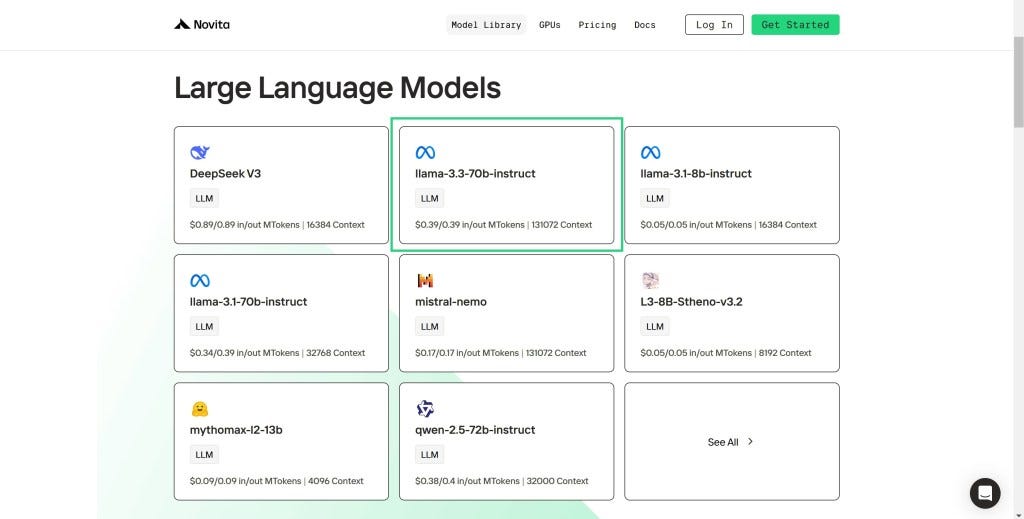

Step 2: Choose Your Model

Browse through the available options and select the model that suits your needs.

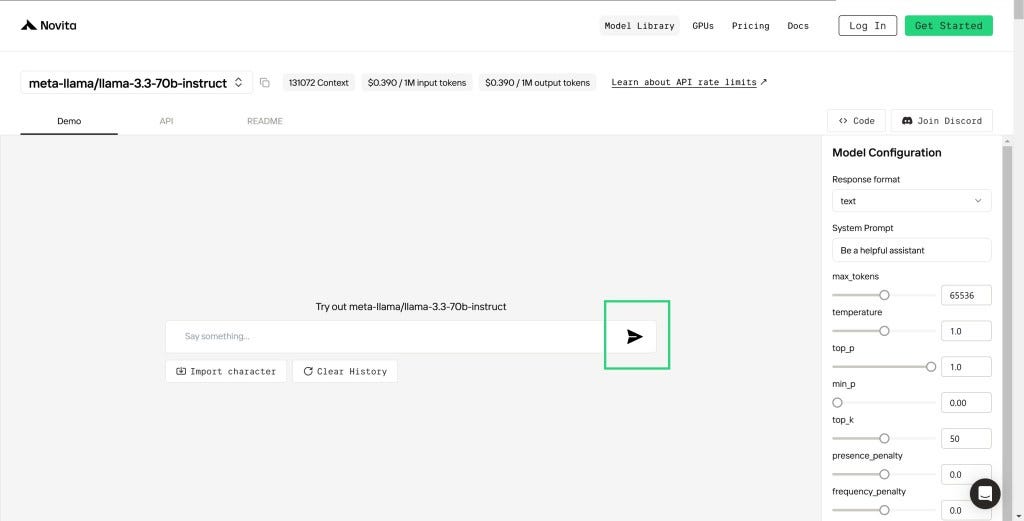

Step 3: Start Your Free Trial

Begin your free trial to explore the capabilities of the selected model.

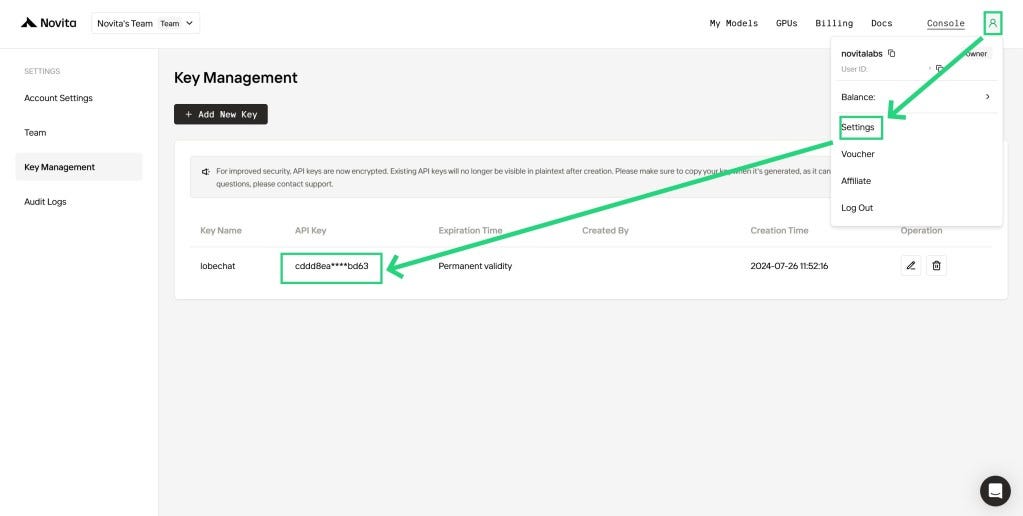

Step 4: Get Your API Key

To authenticate with the API, we will provide you with a new API key. Entering the “Settings“ page, you can copy the API key as indicated in the image.

Step 5: Install the API

Install API using the package manager specific to your programming language.

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API for pthon users.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.3-70b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Conclusion

The Llama 3.3 70B model is a powerful tool accessible through an API for various applications including code generation, translation, and content creation. Novita AI offers an affordable way to utilize this model effectively for developers and businesses alike. By understanding its capabilities alongside API mechanics and pricing structures, efficient integration of this technology becomes feasible.

Frequently Asked Question

What languages does Llama 3.3 70B support?

English, German, French, Italian, Portuguese, Hindi, Spanish, and Thai.

What is the context window size of Llama 3.3 70B?

It has a context window size of 131,072 tokens.

Is it better to use an API or local deployment?

Generally speaking, using an API is more cost-effective and simpler for most use cases; however, local deployment may offer more control if resources are available.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.