Key Highlights

Yes or No: The Llama 3.2 3B model is a lightweight yet capable language model that supports function calling.

What can it do: Function calling allows the model to interact with external tools and APIs, expanding its functionality beyond basic text generation.

How to implement? Install APIs through Novita AI “LLM Playground”, then implement with Langchain framework.

In the current field of artificial intelligence, many people are actively discussing whether the Llama 3.2 3B model can implement function calling capabilities. Numerous users and developers are sharing their experiences with Llama 3.2 3B’s function calling on forums and social media. Some users report achieving good results with a success rate of around 80% when using the model for tool calls. However, there are also reports from users indicating that in certain cases, the model may misunderstand context, leading to inaccurate or failed function calls. Today, we will delve deeper into the function calling feature of Llama 3.2.

Does Llama 3.2 Support Function Calling?

Yes!

Llama 3.2 models, including the 3B variant, support function calling. This capability allows the model to detect when it needs to call a function and then output JSON with arguments to call that function. This functionality is a key feature of the Llama 3.2 series, with models being fine-tuned for it. The Llama 3.2 3B model is particularly well-suited for on-device applications due to its lightweight design while still supporting powerful features like function calling.

What is Function Calling?

Function calling is a methodology that enables LLMs to interact with external systems, APIs, and tools. By equipping an LLM with a set of functions or tools, along with details on how to use them, the model can intelligently choose and execute the appropriate function to perform a specific task. This capability extends the functionality of LLMs beyond basic text generation, enabling them to perform actions, control devices, and access databases.

Supported Models for Function Calling

Many LLMs and platforms now support function calling. You can install API through the “LLM Playground” page of Novita AI, and implement function calling through langchain.

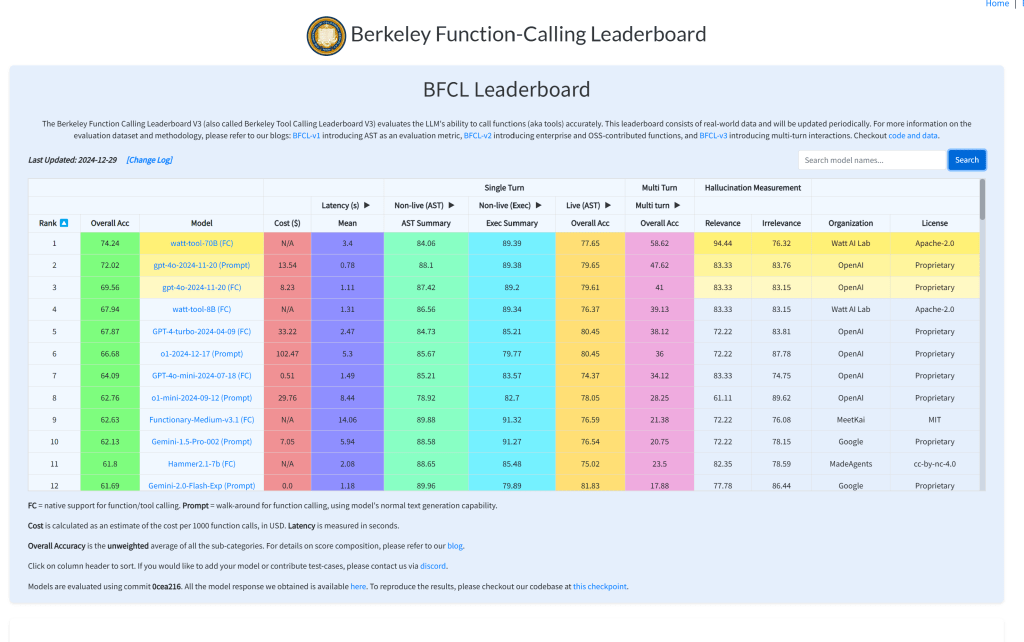

Llama 3.3: The 70 billion parameter version has shown strong performance in function calling tests by successfully identifying when and which functions to call based on user requests.

Mistral: Models like Mistral-Large-2 demonstrate success in function calling within environments like watsonx.ai.

Gemini: Google’s Gemini models also support function calling with various usage examples available.

If you want to know more information, you can see this website!

How Does Function Calling Work?

Function calling typically involves a two-step process:

Mapping the user prompt to the correct function and input parameters. The LLM assesses whether any available tools are relevant to the user’s query.

Processing the function’s output to generate a final, coherent response. If applicable, the LLM constructs a formatted request to call the tool.

- The tool’s output is then analyzed and integrated into the final response.

Practical Applications of Function Calling

Function calling with the Llama 3.2 3B model has numerous real-world applications:

Customer Support Chatbots: Automate responses that require calculations or information lookup.

Data Processing: Interact with backend systems to fetch or update data.

Virtual Assistants: Improve user interactions by enabling the assistant to perform operations like scheduling or calculations.

API Interactions: Convert natural language to API calls.

Database Queries: Create applications that translate natural language into valid database queries.

Smart Home Control: Control smart home devices by setting temperature or toggling lights using natural language commands.

Conversational Knowledge Retrieval Engines: Interact with knowledge bases.

E-commerce Platforms: Provide real-time product suggestions based on inventory databases, track orders, or manage customer support tickets.

Healthcare: Schedule appointments or retrieve patient information.

Travel: Retrieve flight information, book reservations, or manage bookings.

Finance: Provide up-to-date account balances and process transactions.

How to Use Llama 3.2 3B Function Calling via Novita AI

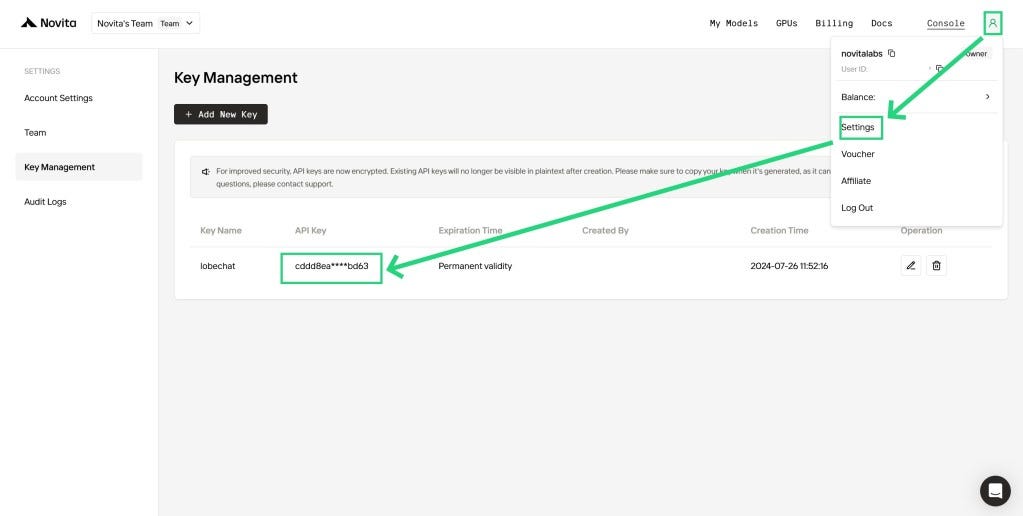

Step1: Get an API Key

Entering the “Keys“ page, you can copy the API key as indicated in the image.

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Step2: Use Langchain to Implement the Function Calling

We’ll create a simple math application that can perform addition and multiplication operations.

💡 While this guide uses LangChain for convenience, implementing function calling doesn’t require any specific framework. The key is in designing the right prompts to make the model understand and correctly invoke functions. LangChain is used here simply to streamline the implementation.

Prerequisites

First, install the required packages:

pip install langchain-openai python-dotenv

Setting Up the Environment

Create a .env file in your project root and add your Novita AI API key:

NOVITA_API_KEY=your_api_key_here

Implementation Steps

1. Define the Tools

First, let’s create two simple mathematical tools using LangChain’s @tool decorator:

from langchain_core.tools import tool

@tool

def multiply(x: float, y: float) -> float:

"""Multiply two numbers together."""

return x * y

@tool

def add(x: int, y: int) -> int:

"""Add two numbers."""

return x + y

tools = [multiply, add]

2. Create the Tool Execution Function

Next, implement a function to execute the tools:

from typing import Any, Dict, Optional, TypedDict

from langchain_core.runnables import RunnableConfig

class ToolCallRequest(TypedDict):

name: str

arguments: Dict[str, Any]

def invoke_tool(

tool_call_request: ToolCallRequest,

config: Optional[RunnableConfig] = None

):

"""Execute the specified tool with given arguments."""

tool_name_to_tool = {tool.name: tool for tool in tools}

name = tool_call_request["name"]

requested_tool = tool_name_to_tool[name]

return requested_tool.invoke(tool_call_request["arguments"], config=config)

3. Set Up the LangChain Pipeline

Create a chain that uses Novita AI’s LLM to select and prepare tool calls:

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.tools import render_text_description

4. Create the Main Processing Function

Implement the main function that processes mathematical queries:

def process_math_query(query: str):

"""Process a mathematical query by using an LLM to select the appropriate tool and execute it."""

chain = create_chain()

message = chain.invoke({"input": query})

result = invoke_tool(message, config=None)

return message, result

def create_chain():

"""Create a chain that uses the specified LLM model to select and prepare tool calls."""

model = ChatOpenAI(

model="meta-llama/llama-3.3-70b-instruct",

api_key=os.getenv("NOVITA_API_KEY"),

base_url="https://api.novita.ai/v3/openai",

)

rendered_tools = render_text_description(tools)

system_prompt = f"""\

You are an assistant that has access to the following set of tools.

Here are the names and descriptions for each tool:

{rendered_tools}

Given the user input, return the name and input of the tool to use.

Return your response as a JSON blob with 'name' and 'arguments' keys.

The `arguments` should be a dictionary, with keys corresponding

to the argument names and the values corresponding to the requested values.

"""

prompt = ChatPromptTemplate.from_messages(

[("system", system_prompt), ("user", "{input}")]

)

return prompt | model | JsonOutputParser()

5. Usage Example

Here’s how to use the implementation:

if __name__ == "__main__":

message, result = process_math_query(

"meta-llama/llama-3.2-3b-instruct",

"what's 3 plus 1132"

)

print(result) # Output: 1135

Common Issues and Troubleshooting

Common issues encountered during function calling include:

Incorrect Function Invocation: The model may misunderstand the context, leading to the invocation of the wrong function.

High Latency: Slow responses can occur, potentially affecting user experience.

Unrecognized Functions: The model may fail to recognize valid function names or parameters, resulting in runtime errors.

Model Hallucinations: The model might generate incorrect outputs or parameters, which can lead to unexpected behavior.

Inconsistent Parameter Passing: Parameters may be passed in unexpected formats, causing errors during function execution.

Network Issues: External API dependencies can introduce latency or failures if network connectivity is unstable.

Parsing Failures: The model’s output might not conform to expected formats (e.g., invalid JSON), leading to parsing errors.

Best Practices for Optimizing Function Calling

To optimize function calling:

To address Incorrect Function Invocation: Fine-tune the model on specific prompts related to function calling to enhance context understanding and minimize errors in function invocation.

To mitigate High Latency: Optimize response times by reducing the token count in prompts or implementing asynchronous function calls for improved performance.

To resolve Unrecognized Functions: Prior to invoking functions, validate function names and parameters to ensure they are correctly recognized, thus preventing runtime errors.

To manage Model Hallucinations: Implement careful error handling strategies to deal with incorrect outputs or parameters generated by the model, ensuring fallback mechanisms are in place for unexpected results.

To ensure Consistent Parameter Passing: Establish clear guidelines for parameter formats and enforce strict validation checks to achieve consistency in how parameters are passed during function execution.

To handle Network Issues: Develop a robust error-handling strategy for network-related problems when interacting with external APIs, including retry mechanisms for transient failures.

To prevent Parsing Failures: Utilize output validation techniques to ensure that model outputs conform to expected formats (e.g., valid JSON), and implement error handling for parsing errors to maintain system stability.

In summary, function calling capability represents a significant advancement in the functionality of large language models, allowing them to interact effectively with external tools and APIs. While there are common challenges such as incorrect function invocation, high latency, unrecognized functions, and model hallucinations, implementing best practices and robust error handling can enhance its reliability and performance. As developers continue to explore and refine this feature, the potential applications in various domains will likely expand, making Llama 3.2 a versatile tool for real-world tasks.

Frequently Asked Questions

Why is function calling crucial for AI agents?

It enables AI agents to autonomously perform tasks requiring external data or actions, enhancing efficiency in dynamic environments.

How does function calling improve LLM performance?

It enhances accuracy by allowing real-time data retrieval, task execution, and informed decision-making through external tools.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.