Introduction

Are you worried about your low VRAM when running Stable Diffusion? Do you try to find solution to optimize you SD performance? Stable Diffusion technology has great potential for users who want to get the most out of their systems with limited resources. It shows how innovation and adaptation can make a big impact. This introduction looks at how Stable Diffusion can be used on systems with low VRAM to create a new computing experience.

Understanding Stable Diffusion and VRAM Requirements

Stable diffusion helps create images more efficiently and reduces memory errors. Low VRAM affects performance, including inference time and output quality. VRAM usage affects batch size and model running.

The basics of Stable Diffusion technology

Stable Diffusion technology improves images by making them better over time. It reduces the risk of memory errors, which is important for high-quality results. You can adjust the batch size to use VRAM more efficiently without losing quality. AI tools with Stable Diffusion are more efficient. Knowing how the diffusion model and CKPT files work is important for success. NVIDIA GPUs support this technology, making it valuable for various applications.

Why VRAM matters for performance

VRAM is important for using Stable Diffusion technology well. If you don’t have enough VRAM, you might get memory errors. These affect image generation and model training. VRAM also affects how much data you can process at once. With less VRAM, you might have to wait longer for results or you might not be able to run multiple AI tools at once. You should upgrade your VRAM or change your settings to make sure everything runs smoothly and you use all the capabilities of Stable Diffusion.

Optimizing Stable Diffusion for Low VRAM Systems

To improve performance in low VRAM systems, use less VRAM. Adjust settings for efficient image generation. Techniques like adjusting batch size and using half precision can help with memory errors in low VRAM adventures.

Techniques to reduce VRAM usage

You can reduce VRAM usage by adjusting the batch size or using half-precision.

Smaller batch sizes during inference can relieve memory stress. Reducing the image width or number of iterations also helps conserve VRAM. These changes are important for smooth, error-free performance on systems with limited VRAM.

Adjusting Stable Diffusion settings for better efficiency

In Stable Diffusion folder there’s a .bat file called webui-user.bat (the one you double click to open automatic 111).

- xformers - listen - api - no-half-vae - medvram - opt-split-attention - always-batch-cond-uncond

Edit it with note pad or any other text editor and add it after COMMANDLINE_ARGS=

The last one gave a an amazing speed upgrade /went from 2–3 minutes an image to around 25 seconds

Hardware Considerations and Solutions

For low VRAM, try NVIDIA GeForce GTX or AMD Radeon RX. External hardware like eGPUs can improve performance. These options help create images and reduce memory errors.

Recommended GPUs for low VRAM scenarios

AMD Radeon RX 5600 XT and NVIDIA GeForce GTX 1660 Super are ideal for low VRAM scenarios. These GPUs offer excellent performance without high VRAM requirements.

The AMD Radeon RX series provides a cost-effective solution with efficient memory usage, while the NVIDIA GeForce GTX series excels in stability and performance under low VRAM conditions.

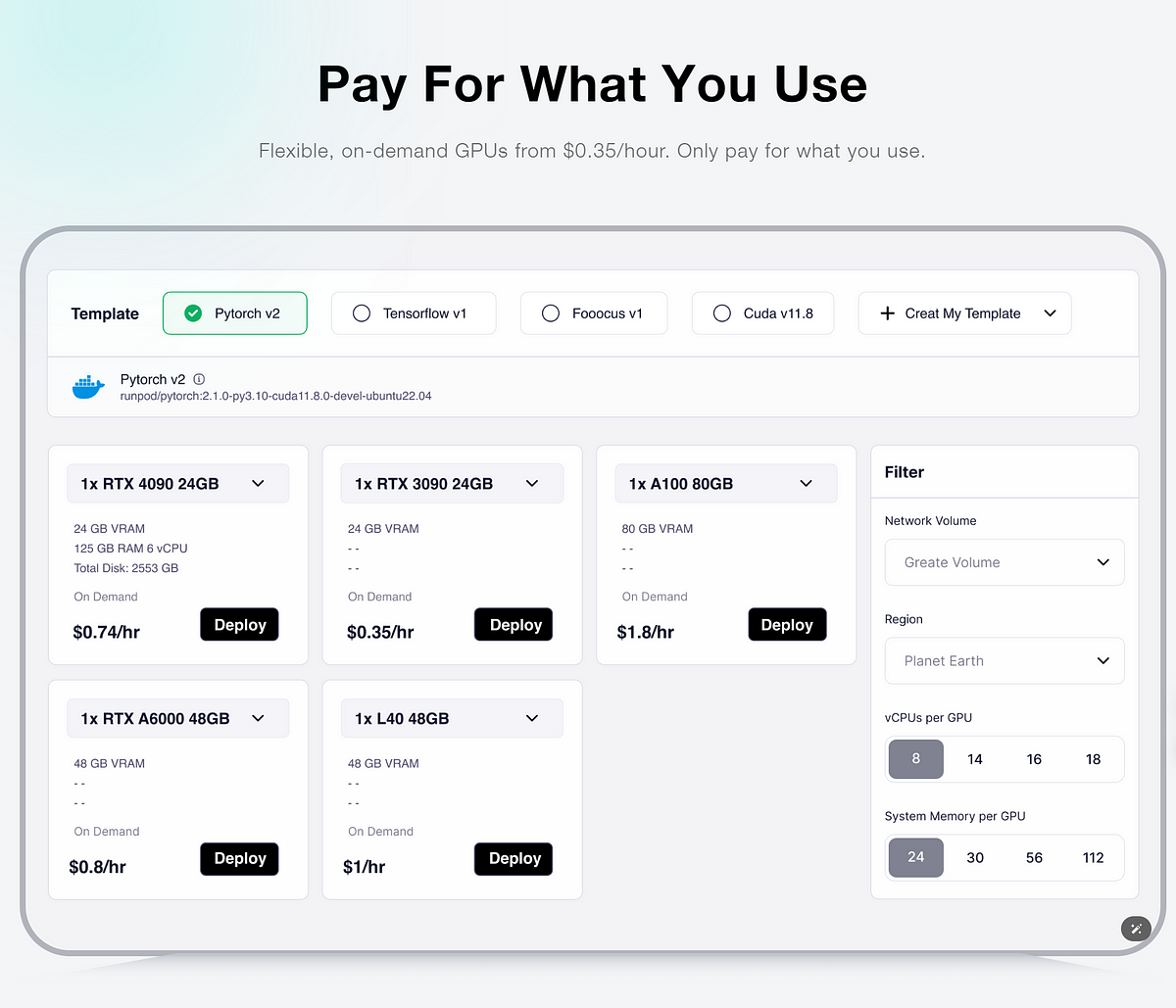

Get Supreme Experience with Novita AI GPU Pods

Don’t want to worry about VRAM and GPU anymore? Here is an awesome method for you to get cost-effective GPU resource with Novita AI GPU Pods. Novita AI GPU Pods offers a robust a pay-as-you-go platform for developers to harness the capabilities of high-performance GPUs with at least 24GB VRAM .

By choosing Novita AI GPU Pods, developers can efficiently scale their GPU resources and focus on their core development activities without the hassle of managing physical hardware.

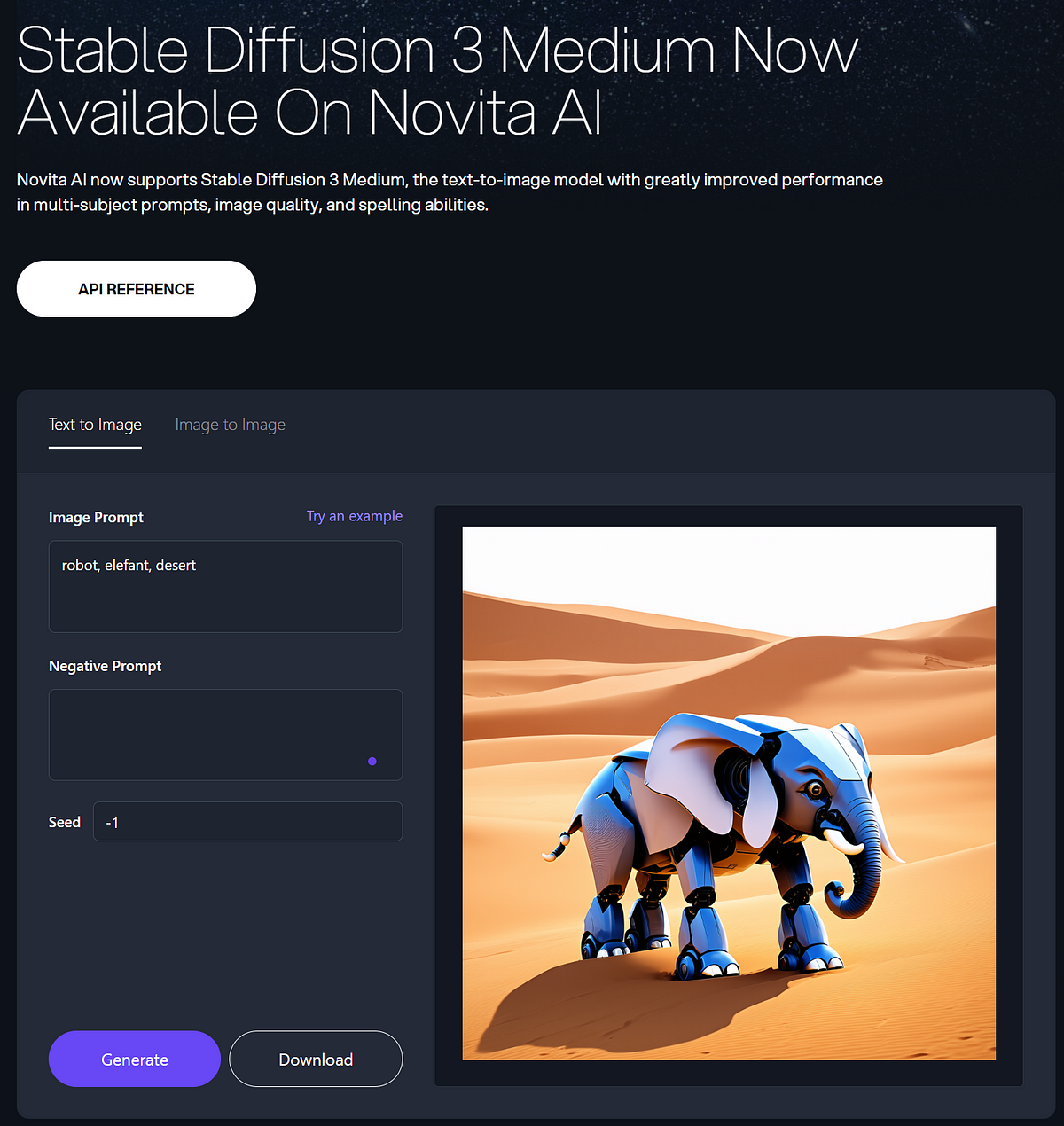

Moreover, with Stable Diffusion API on the Novita AI Model API, you can directly intergrate SD into your product. Go to the playground and Join Novita AI Community to discuss!

Conclusion

Stable Diffusion improves performance on low VRAM systems without compromising quality. Users can use diffusion models on limited hardware by optimizing VRAM usage and adjusting settings. The right GPUs and external hardware can make image generation tasks more stable and faster. A systematic approach and AI tools for memory management make low VRAM adventures smoother and more productive.

Frequently Asked Questions

Can Stable Diffusion run on 4GB of VRAM?

Stable Diffusion can run on 4GB of VRAM, but with limitations. To optimize performance, consider reducing VRAM usage through settings adjustments and system preparation. For detailed instructions and recommended GPUs for low VRAM scenarios, refer to the blog outline.

Can I run Stable Diffusion without GPU?

Stable Diffusion, a powerful image generation model, is typically associated with NVIDIA GPUs. However, you can try GPU Cloud service without buying a graphic card.

How does Stable Diffusion impact the overall performance of a system with low VRAM?

Stable Diffusion performance is affected by several factors when used with low VRAM, like image resolution, Batch size, Model size and so on.

Are there specific strategies or techniques that can be implemented to enhance Stable Diffusion in systems with low VRAM?

You can use different strategies and techniques with Stable Diffusion. (1) Use a smaller version of Stable Diffusion, like Stable Diffusion 2, which uses less VRAM. (2) Batch images to save VRAM. (3) Generate images at a lower resolution to save VRAM. (4)Change the model’s settings to find the best combination for low VRAM systems. (5)Use mixed precision training to reduce memory consumption.

How much VRAM does Stable Diffusion need?

The larger you make your images, the more VRAM Stable Diffusion will use. The minimum amount of VRAM you should consider is 8 gigabytes.

Originally published at Novita AI

Novita AI, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.