Key Highlights

Model Comparison: Gemma 2 excels in multi-turn conversations and reasoning skills, while Llama 3 stands out in coding and solving math problems.

Performance Insights: Gemma 2 leads in general knowledge; Llama 3’s larger models handle complex code and math problems with ease.

Deployment Flexibility: Gemma 2 works efficiently on standard setups, whereas Llama 3’s scalability is best for high-power hardware.

Ideal Use Cases: Gemma 2 fits educational tools; Llama 3 is a go-to for software development and complex problem-solving.

Novita AI Integration: Novita AI’s API offers easy access, making it simple to explore, test, and call models like Gemma 2 and Llama 3.

Effortless Comparison: Quickly compare Gemma 2’s general knowledge with Llama 3’s coding and problem-solving strengths.

Future Potential: Both models are set to redefine standards and spark new innovations in AI.

Introduction

Ready to dive into the AI showdown between Google’s Gemma 2 and Meta’s Llama 3? Both models bring unique strengths: Gemma 2’s lightweight versatility and multimodal flair versus Llama 3’s power in complex tasks and customization. Let’s explore which of these open-source stars might just be the perfect match for your next big project.

Exploring the Gemma 2 and Llama 3 models

Gemma 2, made by Google, is a group of lightweight, open-source models. They deliver great performance and versatility. These models are based on the same advanced technology as the Gemini models from Google.

Then, there is Llama 3, which is Meta’s newest open-source large language model. Llama 3 comes in different sizes. It has been carefully trained on a big dataset. This training helps it to manage complex tasks well.

Key Features of Gemma 2

Gemma 2 represents a major advancement in AI language model capabilities, equipped with features that make it versatile, powerful, and accessible across a wide range of applications. Here’s an overview of the standout features:

Multimodal Integration: Gemma 2 can process and integrate multiple data types — text, images, and audio — simultaneously. This enables it to generate more contextually aware outputs, seamlessly bridging different data sources for a richer understanding.

Enhanced Contextual Understanding: With advanced NLP and deep learning techniques, Gemma 2 excels in understanding complex queries and nuanced meanings, allowing it to produce accurate, contextually rich responses for diverse applications.

Scalability and Efficiency: Built on an improved architecture, Gemma 2 handles larger datasets and complex tasks efficiently without sacrificing performance. This scalability makes it adaptable for both research and industrial-scale applications.

Enhanced Performance Across Diverse Tasks: Gemma 2 is highly effective across varied tasks, including question-answering, commonsense reasoning, and advanced problem-solving in fields like mathematics, science, and coding.

Optimized for Accessibility: Designed for efficient deployment, Gemma 2 is optimized to run on NVIDIA GPUs or a single TPU host, making it accessible for organizations with diverse technical resources.

These key features make Gemma 2 a highly adaptable, efficient, and powerful AI model for a wide array of uses, from academic research to enterprise deployment. Its multimodal capabilities and open architecture set it apart as a robust tool for the future of AI-driven solutions.

Key Features of Llama 3

Llama 3 introduces innovative advancements that elevate its performance, reasoning capabilities, and usability in natural language processing tasks. Here’s a look at the features that set it apart:

State-of-the-Art Performance: Llama 3 outperforms leading models like GPT-4 in reasoning, creative tasks, and coding, setting new benchmarks in major evaluations.

Optimized Architecture for Efficiency: With a 128,000-token vocabulary and 8,192-token context length, Llama 3 is highly efficient, supporting better document understanding.

Enhanced Reasoning and Instruction Following: Advanced training methods improve Llama 3’s ability to reason, generate code, and follow complex instructions accurately.

Open-Source Accessibility: Freely available, Llama 3 includes tools like Llama Guard 2 and Torchtune, fostering a strong open-source community.

Extensive Training Data for Broader Understanding: Trained on 15 trillion tokens in over 30 languages, Llama 3 handles diverse linguistic styles with ease.

These key features make Llama 3 a powerful, versatile, and open-access model, well-suited for a wide range of applications in natural language processing.

An in-depth comparison of Gemma 2 vs Llama 3

Choosing between Gemma 2 and Llama 3 is important. You need to think about what you need and what matters most to you. Both models have their own strengths. Knowing how they are different will help you make a good choice.

Next, we will look at Gemma 2 and Llama 3. We will compare them based on important factors. These include benchmark results, how flexible they are when deploying, and the different use cases. This thorough comparison will give you the information you need. It will help you find out which model fits your AI development needs the best.

Benchmark Results

Benchmarking helps us objectively assess the strengths of different language models. The table shows that while Gemma 2 29B outperforms Llama 3 8B in general knowledge and reasoning benchmarks like MMLU and ARC Challenge, Llama 3’s larger models (especially 70B and 405B) excel in specific tasks like code generation (HumanEval) and math problem-solving (GSM8K).

These results suggest that Gemma 2 is strong in knowledge-intensive tasks, whereas Llama 3’s larger variants may be better suited for complex code and math tasks. The best choice depends on your project’s specific needs.

Deployment Flexibility

Both Gemma 2 and Llama 3 provide flexible deployment options, but they cater to different needs.

Gemma 2 is optimized for efficiency, running well on standard NVIDIA GPUs or even a single TPU host, making it ideal for setups with limited resources or smaller-scale applications.

In contrast, Llama 3 offers greater scalability, particularly in its larger models, but typically requires more robust hardware. Its open-source nature allows for extensive customization, which is ideal for organizations with larger infrastructures or specialized requirements.

Use Cases

When comparing the wide-ranging applications of Gemma 2 and Llama 3, both models stand out for their versatility across different fields. Gemma 2 is now available to researchers and developers, making it particularly valuable in education for creating personalized tutoring systems and sophisticated educational tools that enhance the learning experience.

On the other hand, Llama 3 shines in coding applications, offering strong support for software development and handling complex algorithms. Additionally, both models demonstrate impressive problem-solving abilities in reasoning tasks, making them highly effective for aiding critical decision-making.

Click here for a more in-depth analysis of Gemma 2 vs Lamma 3.

Gemma 2 vs Llama 3: Which One is Right for Your Needs?

Choosing between Gemma 2 and Llama 3 largely depends on your specific needs and use cases, as both models excel in different areas.

Gemma 2 29B is better for general knowledge and reasoning tasks. Llama 3’s larger models (70B, 405B) excel in code generation and math problem-solving, making them ideal for complex tasks.

Gemma 2 runs efficiently on standard GPUs or a single TPU, ideal for smaller-scale setups. Llama 3 requires more powerful hardware but offers better scalability and customization options for larger projects.

Gemma 2 is great for educational tools and personalized tutoring. Llama 3, especially its larger models, excels in software development, coding tasks, and solving advanced algorithms.

In short, if you’re working with general knowledge tasks or need something that runs efficiently on a smaller scale, Gemma 2 is probably your best bet. But if your project involves complex coding, solving math problems, or dealing with larger datasets, you’ll likely get better results with the bigger models of Llama 3.

Whether you need Gemma 2 or Llama 3, you can easily access their APIs on Novita AI. Let’s now explore how to call and use the Gemma 2 and Llama 3 models on Novita AI.

Calling Gemma 2 and Llama 3 on the Novita AI LLM API

With Novita’s easy-to-use API, you can concentrate on making the most of these models. There is no need to worry about setting up and managing your own AI systems.

- Step 1: Create an account or log in to Novita AI

Step 2: Navigate to the Dashboard tab on Novita AI to access your LLM API key. If necessary, you can generate a new key.

Step 3: Go to the Manage Keys page and click “Copy” to easily copy your key.

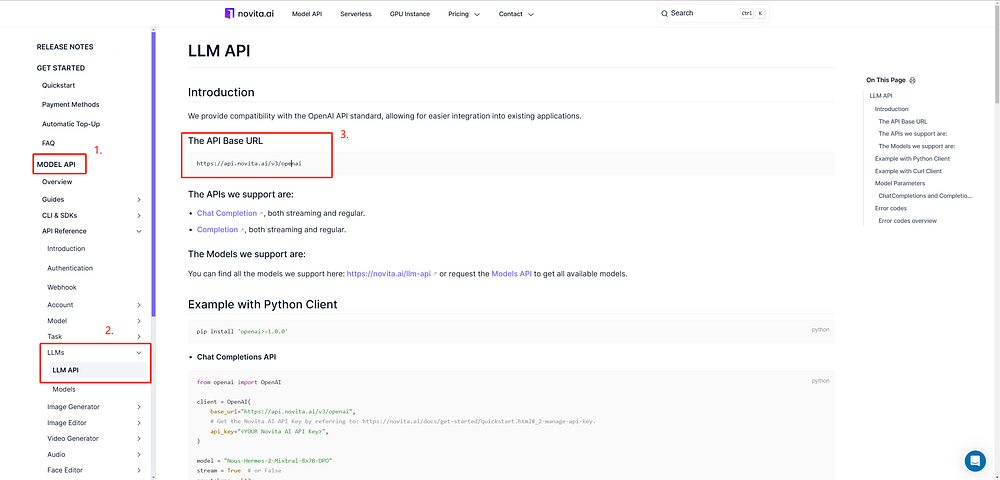

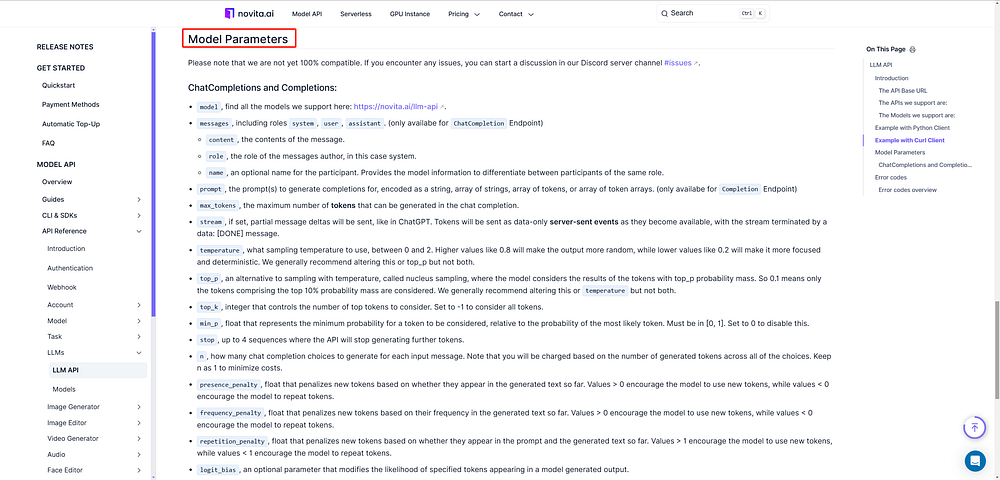

- Step 4: Access the LLM API documentation by clicking “Docs” in the navigation bar. Then, go to the “Model API” section and find the LLM API to view the API Base URL.

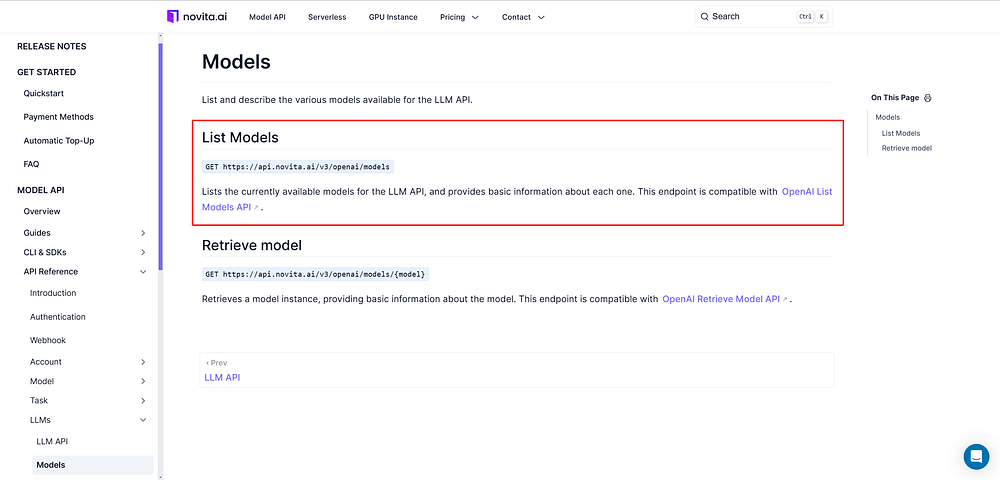

- Step 5: Choose the model that best suits your needs. In addition to Gemma 2 and Llama 3, we offer various other models, such as the LLaMA 3.1 API.

To view the complete list of available models, check out the Novita AI LLM Models List.

- Step 6: Modify the prompt parameters: Once you’ve selected the model, you’ll need to configure the parameters accordingly.

- Step 7: Run several tests to verify the API’s reliability.

A Tutorial on Using Gemma 2 and Llama 3 Demo on Novita AI

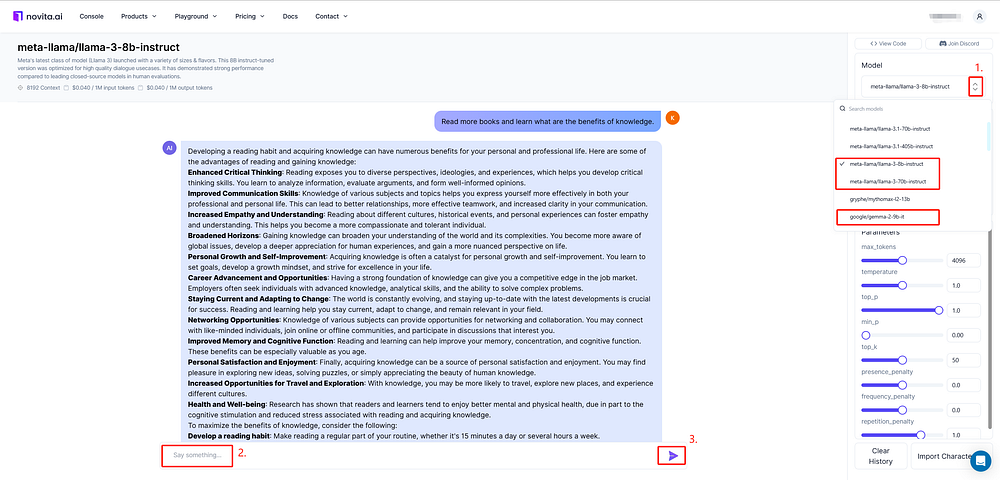

Before making API calls to LLaMA 3 and Gemma 2, you can test the models using Novita AI’s LLM demo. This will give you a better understanding of the differences between LLaMA 3 and Gemma 2.

- Step 1: Access the demo by navigating to the “Model API” tab and selecting “LLM API” to start exploring the LLaMA 3 and Gemma 2 models.

- Step 2: After selecting the model you want to use, enter your prompt in the specified field and receive the results.

Here’s what we offer for Llama 3 and mistral:

Ready to unlock the potential of LLaMA 3 and Mistral? Begin using Novita AI LLM APIs today to supercharge your AI projects with powerful, efficient, and customizable language models. Start building now!

Future Prospects

As we look to the future of AI innovation, both Gemma 2 and Llama 3 hold immense potential. Meta’s release of Llama 3 models marks a new era in open-access AI, sparking creativity and driving progress across the industry. Meanwhile, Google’s ongoing advancements in Gemma models suggest future releases that could set new performance benchmarks and expand the horizons of AI capabilities.

Conclusion

Whether you’re looking to power educational tools or dive into complex code, there’s a model here for you. With Novita AI’s API, you’re just a few clicks away from bringing these AI giants into action. The future looks bright — and it’s full of Gemma 2 and Llama 3!

Frequently Asked Questions

Is LLaMA 3.2 3B better than Gemma 2B?

Comparing LLaMA 3.2 3B and Gemma 2B is challenging due to their unique strengths and weaknesses. Benchmark results are helpful, but selecting the best model depends on your specific needs, such as context window size and capabilities.

What is the difference between LLaMA 2 and LLaMA 3?

They differ in their size, training data, and capabilities. v3 is significantly larger than v2, boasts improved performance on various tasks, and utilizes a more advanced training dataset.

How good is Gemma 2 27B?

Gemma 2 27B impresses with high performance despite its compact size. Its design and training lead to excellent test scores in tasks such as text generation, summarization, and code generation.

Is llama 3.1 better than llama 3?

Llama 3.1 outperforms Llama 3 in key metrics like MMLU, scoring 86 compared to Llama 3’s 82, showing improved performance in STEM and humanities.

Is llama 3 the best open-source model?

Llama 3’s title as the “best” open-source AI model is subjective and based on usage and metrics. Consider other leading open-source models, each with unique strengths.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.