Key Highlights

We explored the latest benchmarks, evaluated the input and output token costs, assessed latency and throughput, and provide guidance on the best model choice for your needs. From this analysis we learn that:

General Knowledge Understanding: Llama 3.3 70b performs better in MMLU scores.

Coding: Llama 3.3 70b performs better in HumanEval scores.

Math Problems: Llama 3.3 70b performs better at MATH scores.

Multilingual Support: Llama 3.3 70b performs better with more supported languages.

Price & Speed: Llama 3.1 70b has lower requirements for API and hardware

If you’re looking to evaluate the Llama 3.3 70b or Llama 3.1 70b on your own use-cases — Novita AI can provide a free trial.

Llama 3.3 70b and Llama 3.1 70b, developed by Meta, are large language models with significant differences. Let’s compare their performance, resource efficiency, applications, and how to choose and access them.

Basic Introduction of Models Families

To begin our comparison, we first understand the fundamental characteristics of each model.

Llama 3.1 Model Family Characteristics

Release Date: Early 2024

Model Scale:

meta-llama/llama-3.1–8b-instruct

meta-llama/llama-3.1–70b-instruct

- Key Features:

Context Window Expansion to 128k tokens.

Multilingual Capability Enhancement

Resource Efficiency

Llama 3.3 Model Family Characteristics

Release Date: Mid-2024

Model Scale:

meta-llama/llama-3.3–70b-instruct

- Key Innovations:

Optimized transformer architecture

Trained using supervised fine-tuning (SFT) and reinforcement learning with human feedback (RLHF)

Incorporates 15 trillion tokens of publicly available data in its training.

The suggested approach utilizes grouped query attention (GMA) to enhance inference scalability.

Supports eight core languages with a focus on quality over quantity.

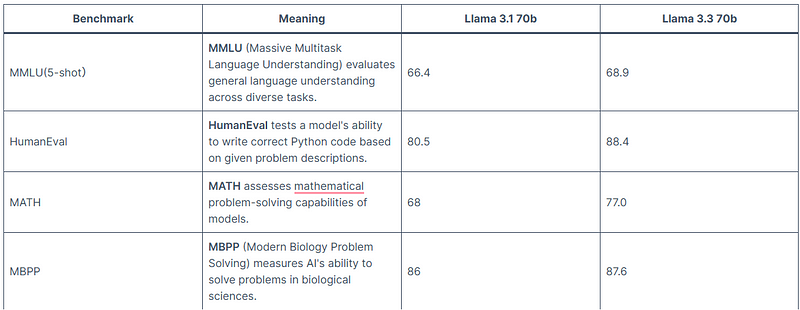

Performance Comparison

Now that we’ve established the basic characteristics of each model, let’s delve into their performance across various benchmarks. This comparison will help illustrate their strengths in different areas.

As we can see from this table, Llama 3.3 70b demonstrates particular strengths in all dimensions.

If you would like to know more about the llama3.3 benchmark knowledge. You can view this article as follows: Llama 3.3 Benchmark: Key Advantages and Application Insights.

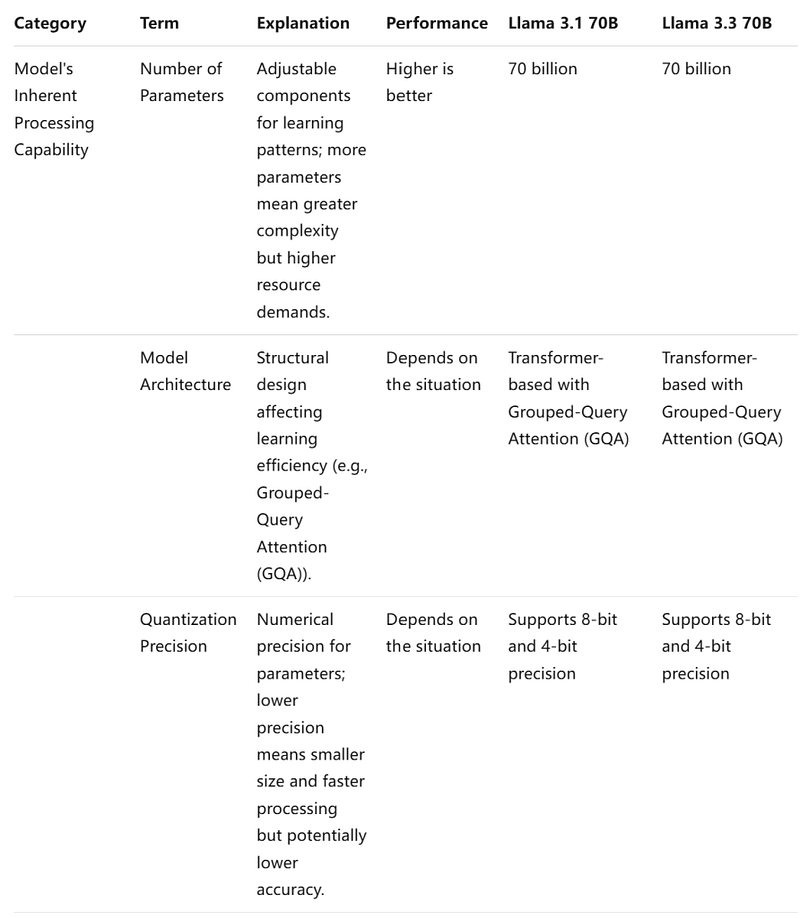

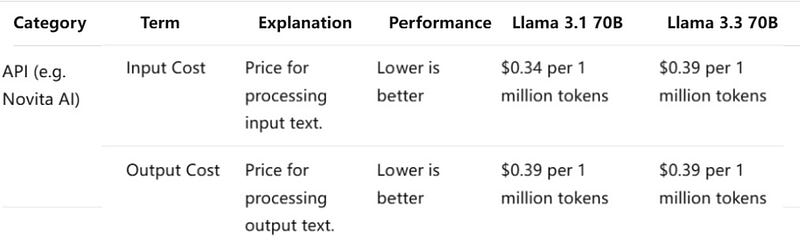

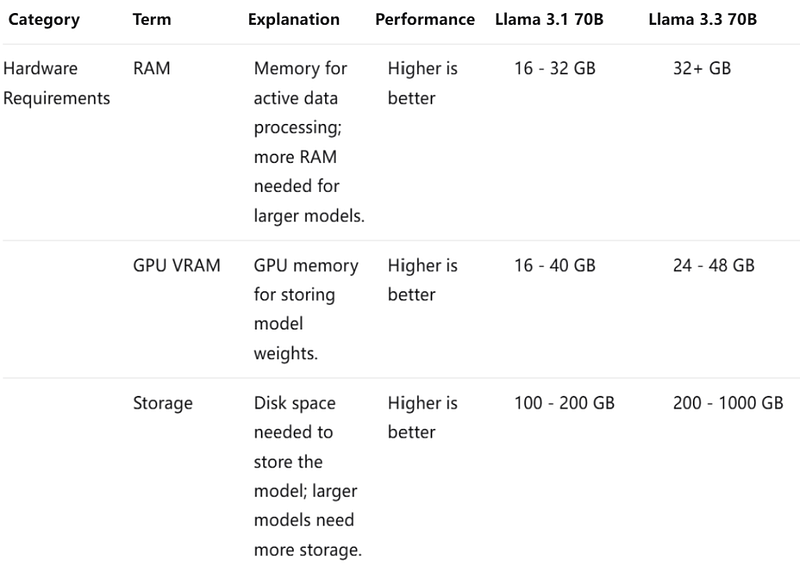

Resource Efficiency

When evaluating the efficiency of a Large Language Model (LLM), it’s crucial to consider three key categories: the model’s inherent processing capabilities, API performance, and hardware requirements.

Llama 3.1 70b excels in API performance cheaper cost, making it more efficient for quick and extensive text generation. If you want to use them, Novita AI provides a $0.5 credit to get you started !

Applications and Use Cases

Both models are suitable for similar applications, including:

Multilingual chat

Coding assistance

Synthetic data generation

Text summarization

Content creation

Localization

Knowledge-based tasks

Tool use

Llama 3.3 70b may perform better in these applications, especially in multilingual dialogue scenarios, due to its optimizations

Accessibility and Deployment through Novita AI

Novita AI offers an affordable, reliable, and simple inference platform with scalable Llama 3.3 70b and Llana 3.1 70b API*, empowering developers to build AI applications.*

Step1: Log in and Start Free Trail !

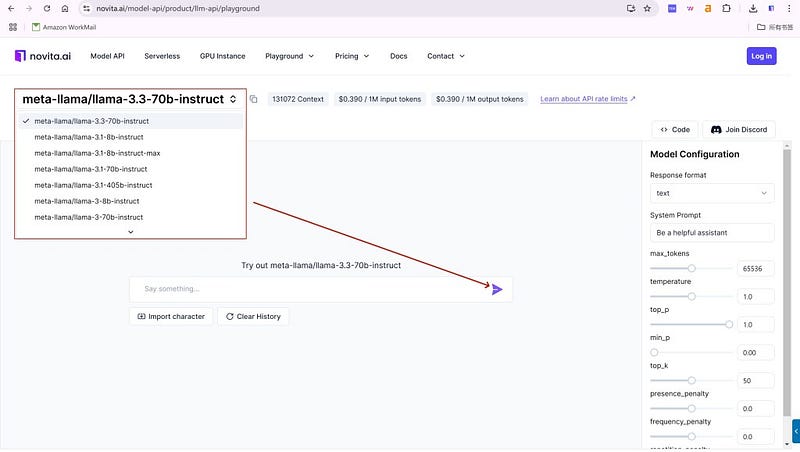

you can find LLM Playground page of Novita AI for a free trial! This is the test page we provide specifically for developers! Select the model from the list that you desired. Here you can choose the Llama 3.3 70b and Llama 3.1 70b model.

Step2: If the trial goes well, you can start calling the API!

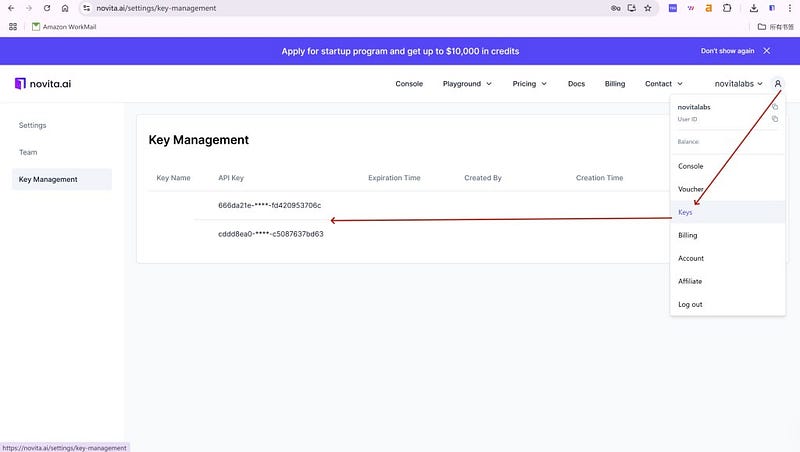

Click the “API Key” under the menu. To authenticate with the API, we will provide you with a new API key. Entering the “Keys“ page, you can copy the API key as indicated in the image.

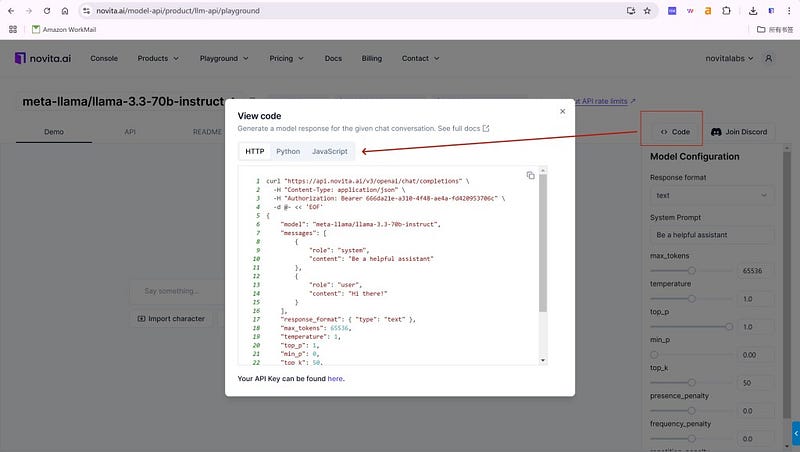

Navigate to API and find the “LLM” under the “Playground” tab. Install the Novita AI API using the package manager specific to your programming language.

Step3: Begin interacting with the model!

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.3-70b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Conclusion

In conclusion, the choice between Llama 3.1 70B and Llama 3.3 70B depends on the specific requirements of your application and the available hardware resources. Llama 3.1 70B excels in terms of cost and latency, making it well-suited for applications that demand quick responses and cost efficiency. On the other hand, Llama 3.3 70B shines in maximum output and throughput, making it ideal for applications that require the generation of long texts and high throughput, albeit with higher hardware demands. Therefore, it is crucial to weigh these factors carefully to select the model that best fits your needs.

Frequently Asked Questions

Is llama 3.1 restricted?

For Llama 3.1, Llama 3.2, and Llama 3.3, this is allowed provided you include the correct attribution to Llama. See the license for more information.

Is llama 3.1 better than GPT-4?

Chatbots: Since Llama 3 has a deep language understanding, you can use it to automate customer service.

ConEven for the problem solving tasks the response and corrected o/p was accurate compared to gpt 4. Llama 3 and GPT-4 are both powerful tools for coding and problem-solving, but they cater to different needs. If you prioritize accuracy and efficiency in coding tasks, Llama 3 might be the better choice.

How is llama 3.1 different from llama 3?

Model Recommendations: Llama 3.1 70B is ideal for long-form content and complex document analysis, while Llama 3 70B is better for real-time interactions. LLM API Flexibility: The LLM API allows developers to seamlessly switch between models, facilitating direct comparisons and maximizing each model’s strengths.

originally from Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.