Key Highlights

Llama 3.1 8B is a more general-purpose model with enhanced reasoning and general knowledge capabilities, suitable for a broader range of applications.

Llama 3.2 3B is optimized for on-device applications, excelling in tasks like summarization, instruction following, and rewriting while prioritizing privacy through local data processing.

If you’re looking to evaluate on your own use-cases — Upon registration, Novita AI provides a $0.5 credit to get you started!

The Llama series of language models, developed by Meta, has introduced several notable iterations. This article provides a detailed comparison of two significant models: Llama 3.2 3B and Llama 3.1 8B. We’ll explore their technical specifications, performance benchmarks, and practical applications to help developers and researchers make informed decisions based on their specific needs.

Basic Introduction of Model

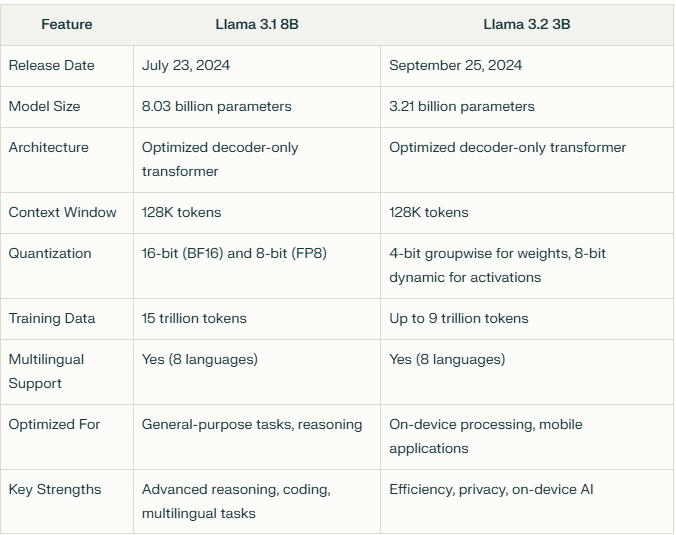

To begin our comparison, we first understand the fundamental characteristics of each model.

Llama 3.1 8B

Release Date: July 23, 2024

Other Models:

Key Features:

8.03 billion parameters

General-purpose text-only, auto-regressive language model

Supports 16-bit (BF16) and 8-bit (FP8) quantization

Multilingual support for 8 languages

Excels in advanced reasoning, coding, and general knowledge tasks

Llama 3.2 3B

Release Date: September 25, 2024

Other Models:

Key Features:

3.21 billion parameters

Lightweight text-only model optimized for on-device processing

Designed for mobile devices and edge computing

Multilingual support for 8 languages

Excels in tasks like summarization, instruction following, and rewriting

Model Comparison

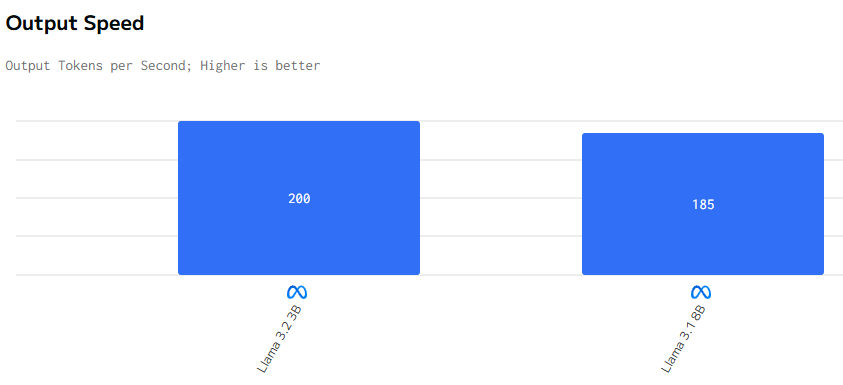

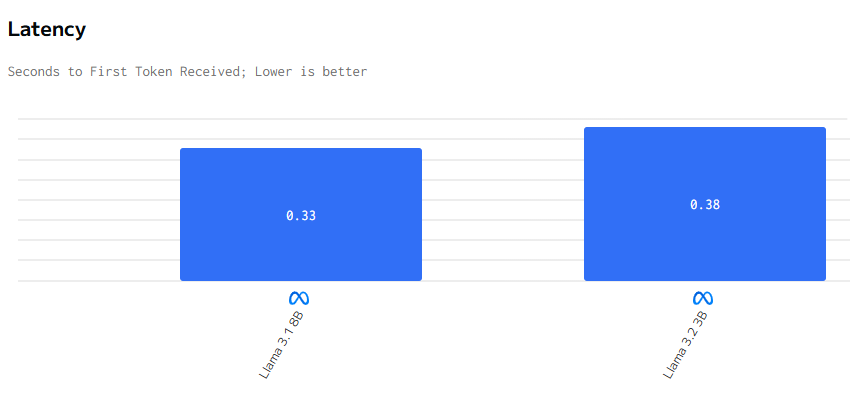

Speed Comparison

If you want to test it yourself, you can start a free trial on the Novita AI website.

Speed Comparison

Llama 3.2 3B outperforms Llama 3.1 8B in terms of total response time, latency, and output speed.

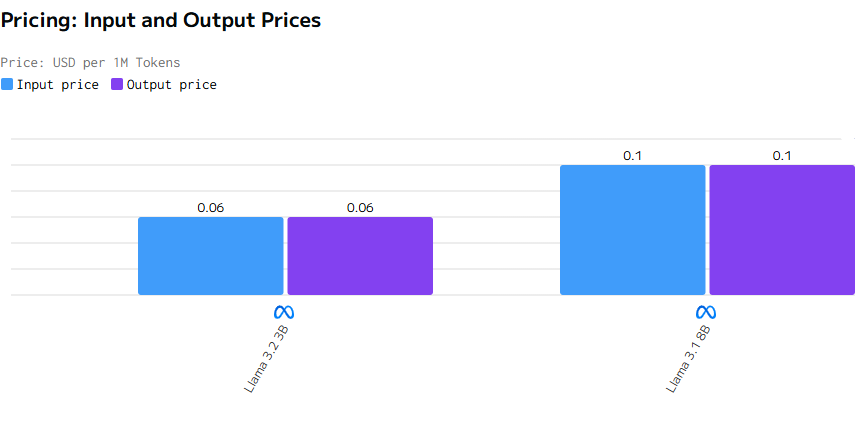

Cost Comparison

The pricing for Llama 3.2 3B is significantly lower than that of Llama 3.1 8B, with the input and output prices per 1M tokens being only half of those for Llama 3.1 8B.

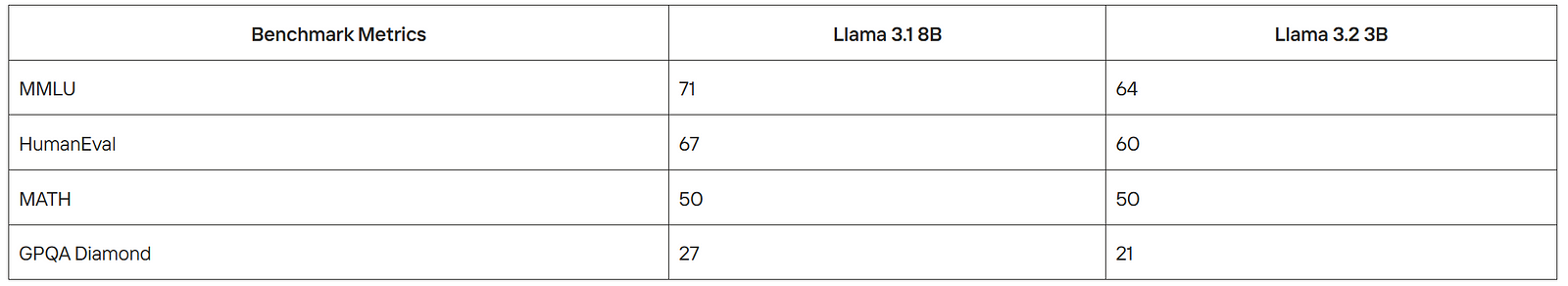

Benchmark Comparison

Now that we’ve established the basic characteristics of each model, let’s delve into their performance across various benchmarks. This comparison will help illustrate their strengths in different areas.

Llama 3.1 8B outperforms Llama 3.2 3B on the MMLU, HumanEval, and GPQA Diamond benchmarks. Both models perform equally on the MATH benchmark. If better performance on these specific benchmarks is required, Llama 3.1 8B is the preferable choice. However, other factors such as cost or specific task requirements should also be considered for a comprehensive decision.

If you would like to know more about the llama3.1 and llama 3.2. You can view this article as follows:

If you want to see more comparisons, you can check out these articles:

Applications and Use Cases

Llama 3.1 8B:

Multilingual conversational agents

Coding assistants

General-purpose text-based tasks

Long-form summarization

Llama 3.2 3B:

On-device AI for mobile applications

Edge computing with low-latency, privacy-preserving AI

Summarization and tool use within devices

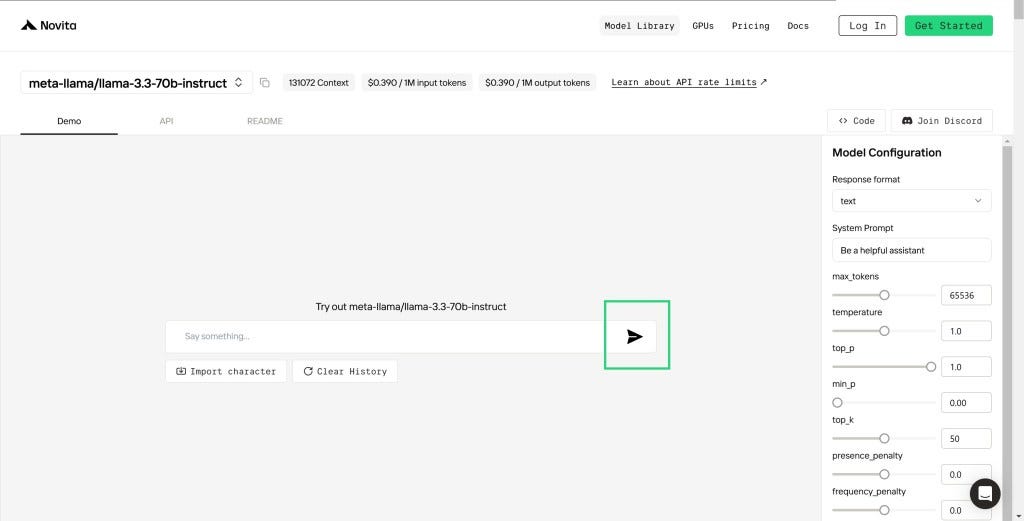

Accessibility and Deployment through Novita AI

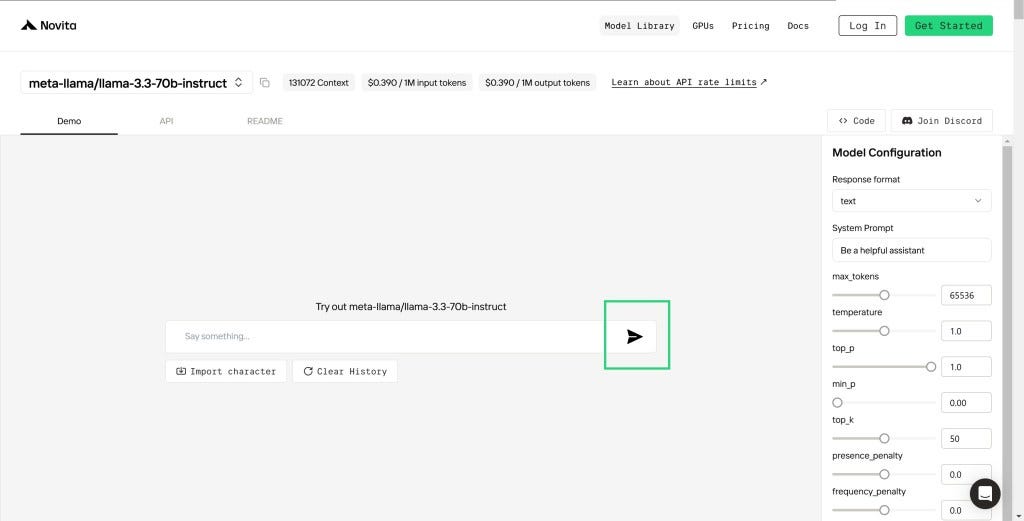

Step 1: Log In and Access the Model Library

Log in to your account and click on the Model Library button.

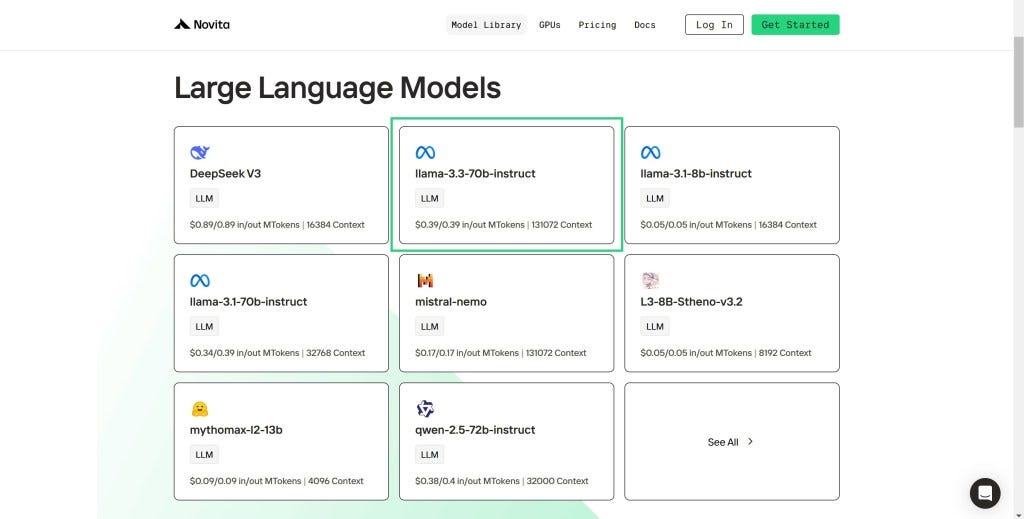

Step 2: Choose Your Model

Browse through the available options and select the model that suits your needs.

Step 3: Start Your Free Trial

Begin your free trial to explore the capabilities of the selected model.

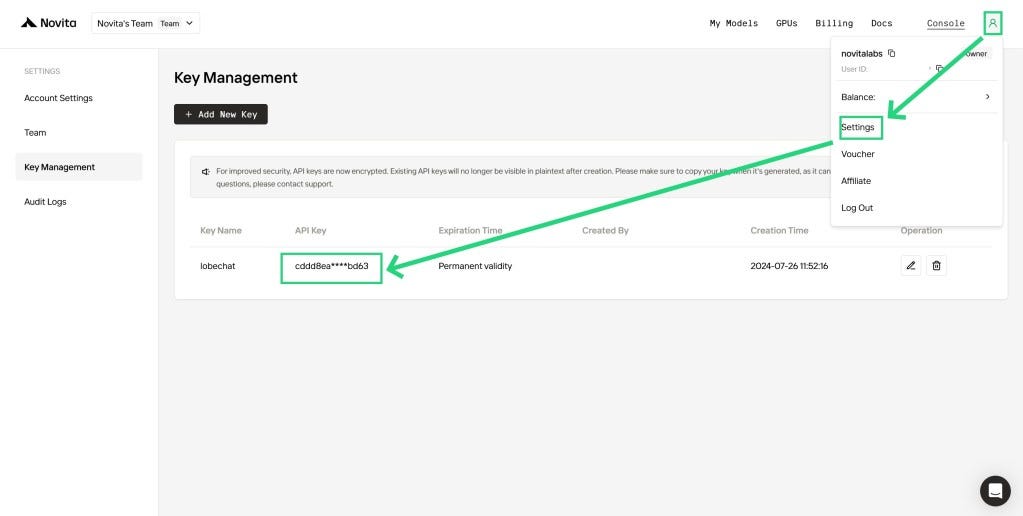

Step 4: Get Your API Key

To authenticate with the API, we will provide you with a new API key. Entering the “Settings“ page, you can copy the API key as indicated in the image.

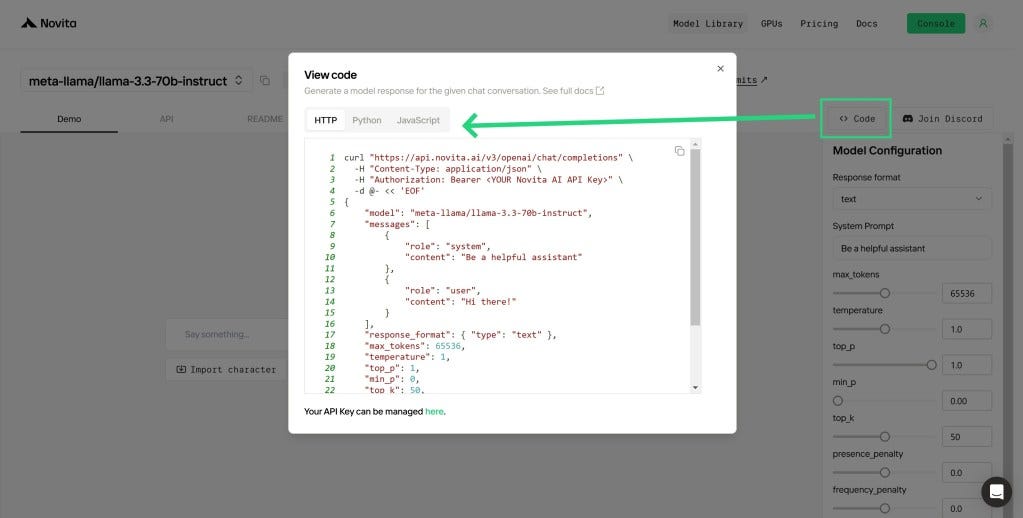

Step 5: Install the API

Install API using the package manager specific to your programming language.

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API for pthon users.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "meta-llama/llama-3.2-3b-instruct"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Both Llama 3.2 3B and Llama 3.1 8B are powerful models, but cater to different use cases. Llama 3.2 3B is an excellent choice for developers looking to build on-device AI applications, where resource constraints and privacy are major concerns. Its smaller size and optimizations for mobile devices make it a practical option for a range of tasks. Llama 3.1 8B provides a more powerful option for applications requiring advanced reasoning capabilities, and general knowledge, and is suitable for more general-purpose applications as well as areas like coding and multilingual interactions.

Frequently Asked Questions

What is Meta Llama 3.1, and what makes it significant?

Meta Llama 3.1 is a family of large language models with up to 405 billion parameters, notably the first openly available model achieving state-of-the-art capabilities comparable to leading closed-source models like GPT-4 and Claude 3.5 Sonnet.

How do Meta’s Llama models compare to other open-source and closed-source models?

Llama 3.1 models are designed to compete with top foundation models like GPT-4 and Claude 3.5 Sonnet, showing comparable performance in larger versions, while Llama 3.2’s smaller models excel within their size category, even outperforming similar models like Gemma.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.