Key Highlights

Model Overview

Llama 3.2 3B: A lightweight, text-only model designed for low-latency applications, optimized for edge devices with 3.21 billion parameters.

DeepSeek V3: A powerful Mixture-of-Experts (MoE) model featuring 671 billion parameters, designed for high-performance tasks in coding and reasoning.

Model Differences

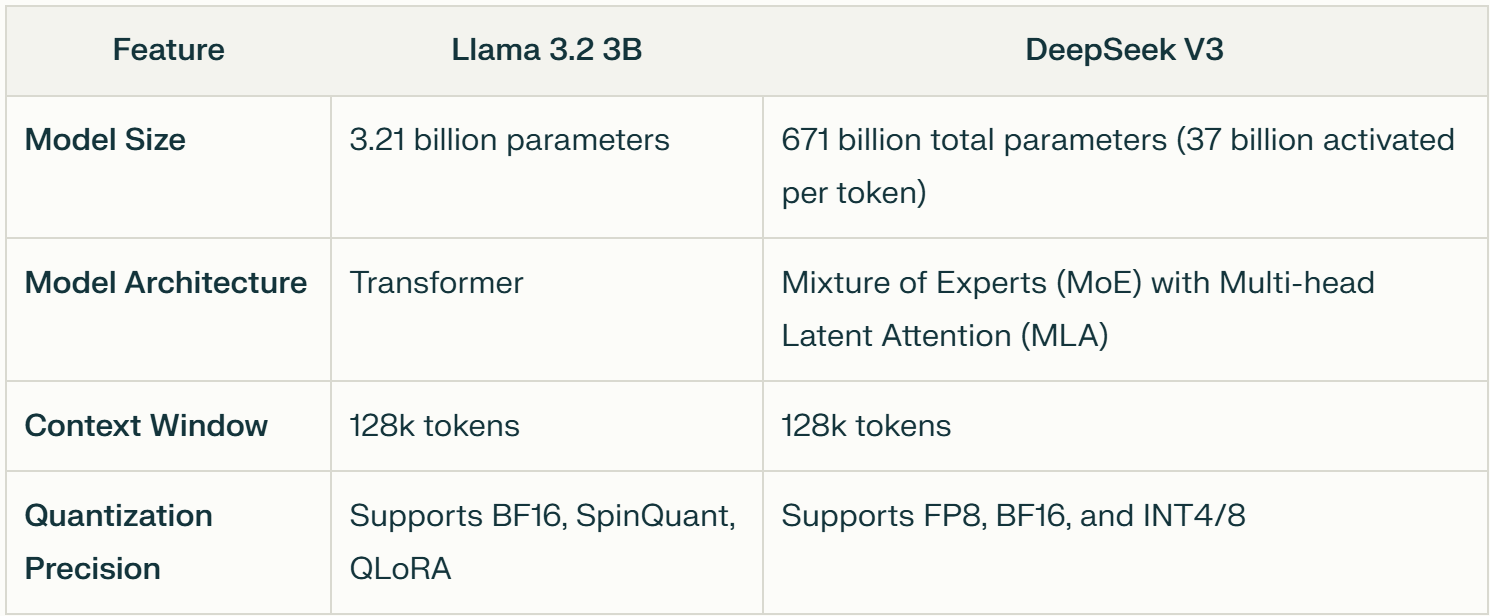

Architecture: Llama 3.2 3B uses a standard transformer architecture, while DeepSeek V3 employs a Mixture-of-Experts architecture with advanced features like Multi-Head Latent Attention.

Context Length: Both models support a context length of up to 128k tokens, but DeepSeek V3 activates only 37 billion parameters per token.

Performance

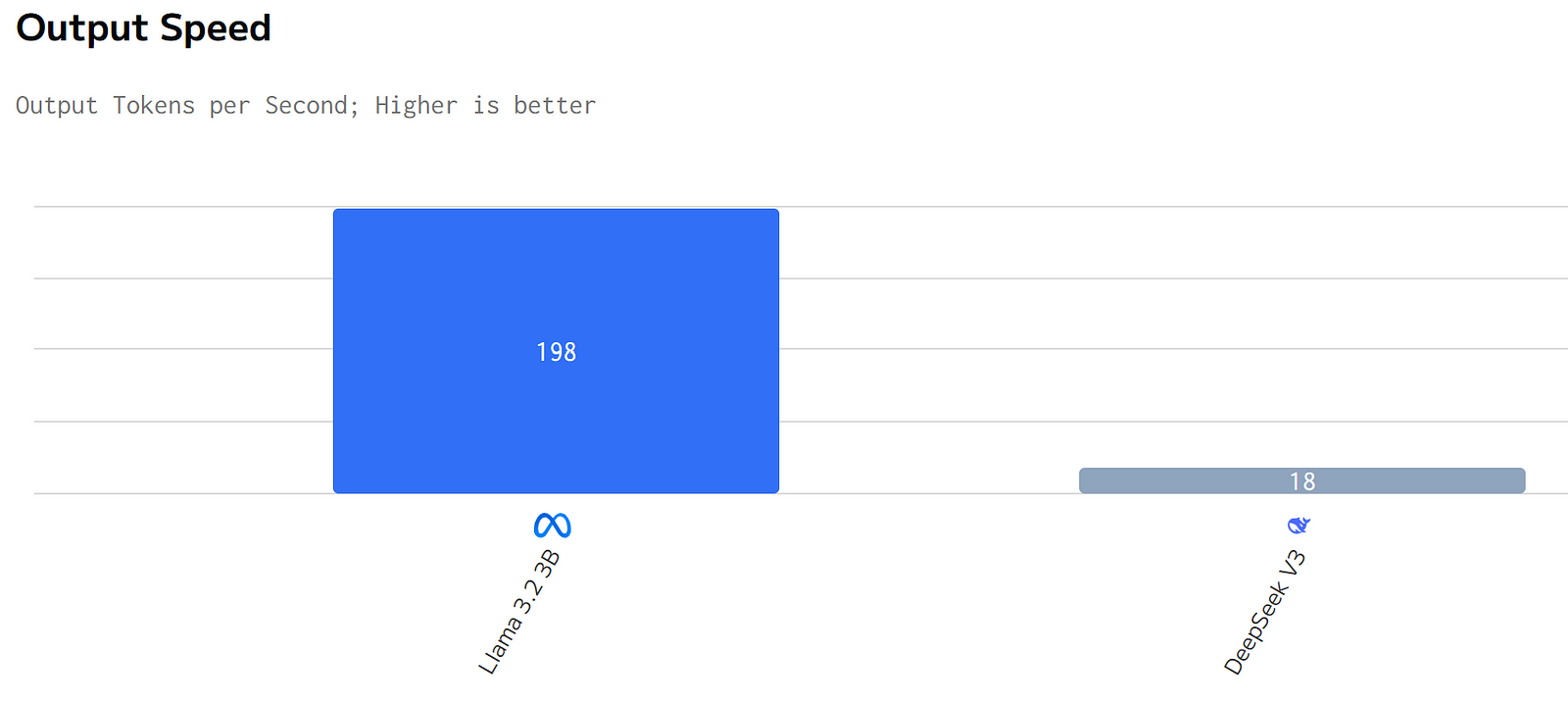

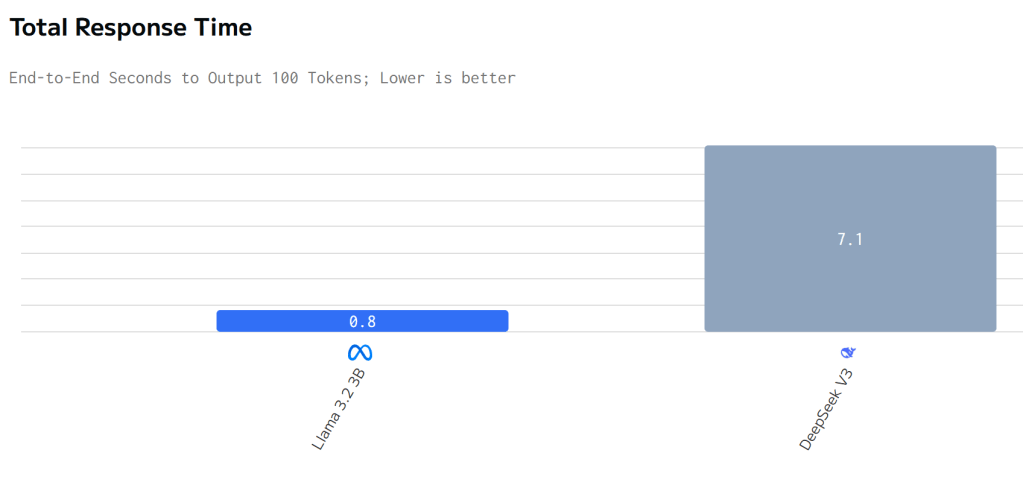

Llama 3.2 3B excels in tasks like summarization and translation, achieving an output speed of approximately 203.5 tokens per second.

DeepSeek V3 outperforms in complex reasoning and coding benchmarks, achieving high scores on MMLU and HumanEval tests.

Hardware Requirements

Llama 3.2 3B can run on devices with lower VRAM requirements (around 6GB recommended), making it suitable for mobile applications.

DeepSeek V3, due to its size and complexity, requires substantial GPU resources, typically needing high-end GPUs with significant VRAM.

Use Cases

Llama 3.2 3B is ideal for mobile AI applications, customer service bots, and personal writing assistants.

DeepSeek V3 is well-suited for educational tools, coding platforms, and complex data analysis tasks in enterprise settings.

If you’re looking to evaluate the Llama 3.2 3B and DeepSeek-V3 on your own use-cases — Upon registration, Novita AI provides a $0.5 credit to get you started!

This article provides a detailed technical comparison of Meta’s Llama 3.2 3B and DeepSeek-V3 models. The goal is to offer a practical guide for developers and researchers on the specific characteristics and use cases for each model. We will explore model architecture, benchmark performance, hardware requirements, and suitable applications to help you make informed decisions when choosing between them.

Basic Introduction of Model

To begin our comparison, we first understand the fundamental characteristics of each model.

Llama 3.2 3b

Release Date: September 25, 2024

Other Models:

Key Features:

Model Architecture: an auto-regressive language model that utilizes an optimized transformer architecture.

Technical Features: 128K context window length

Performance Metrics: Excels in tasks like summarization, instruction following, and rewriting

Training Scale: 9 trillion tokens of data sourced from publicly available online content.

Language Support: supports eight languages

Designed for mobile devices and edge computing

DeepSeek v3

Release Date: December 26, 2024

Model Scale:

Key Features:

Model Architecture: Mixture-of-Experts (MoE) model

Technical Features: 128K context window length

Performance Metrics: Excellence in code-related and math tasks

Training Scale: Trained on 14.8 trillion tokens

Language Support: no specific information

Model Comparison

Speed and Cost Comparison

If you want to test it yourself, you can start a free trial on the Novita AI website.

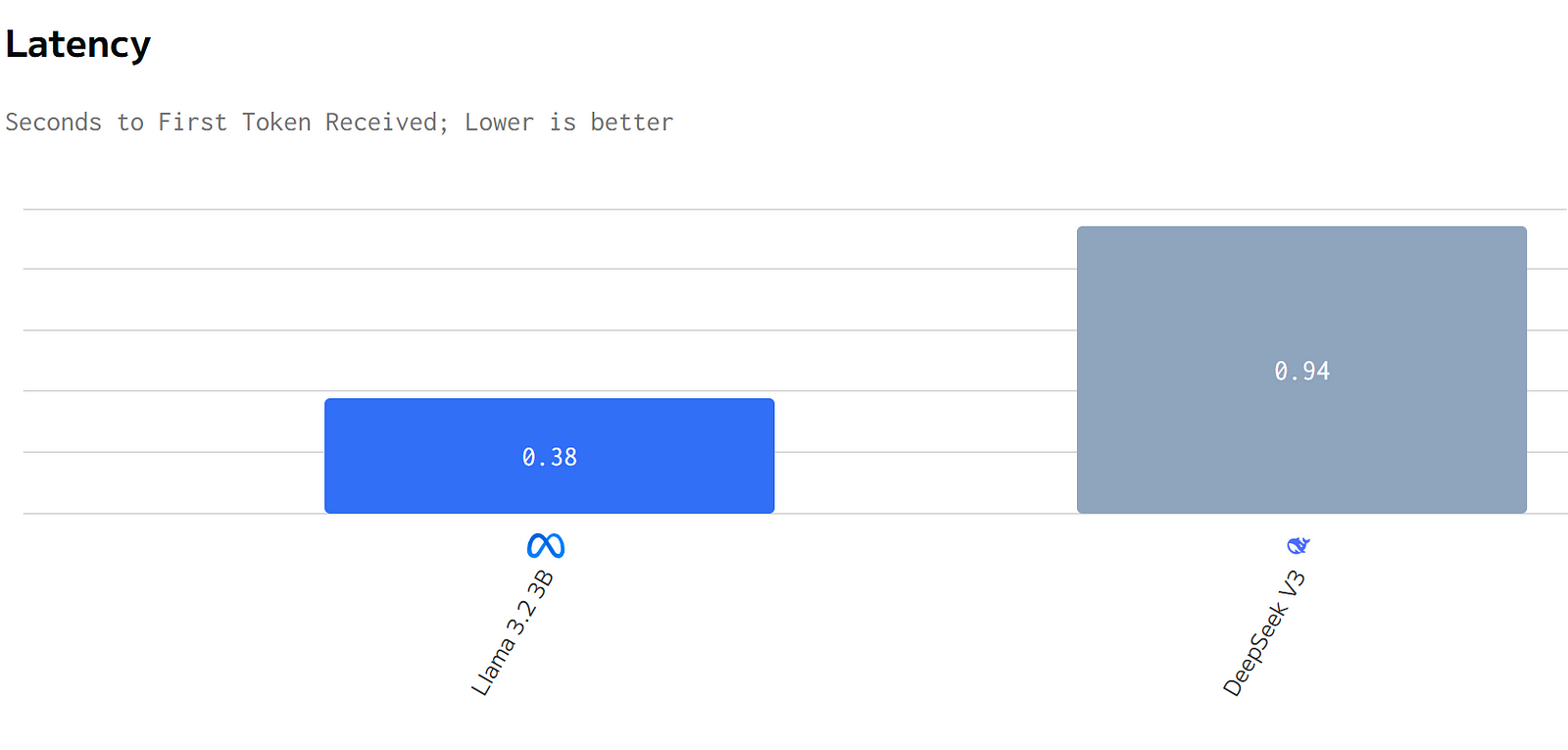

Speed Comparison

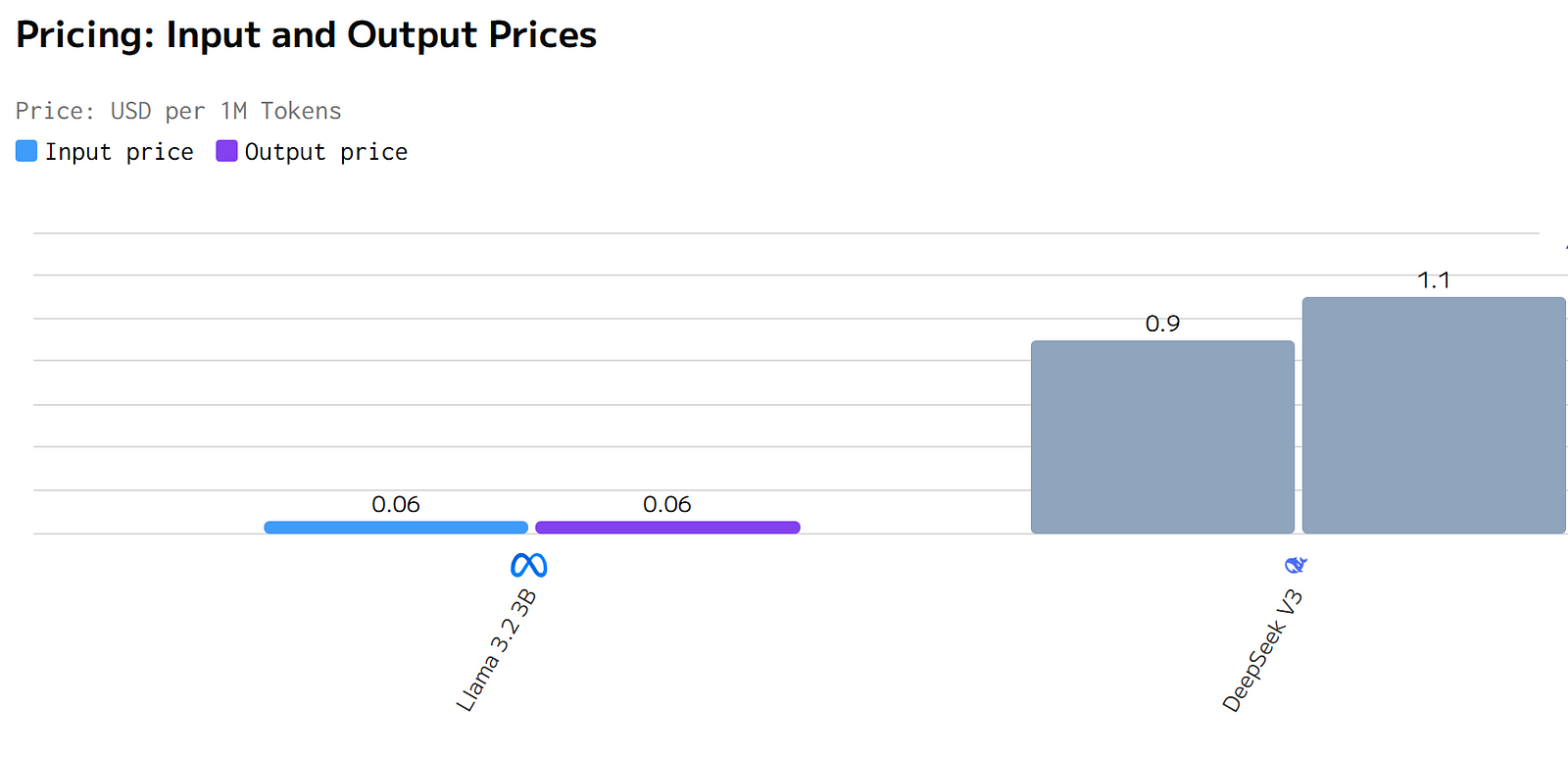

Cost Comparison

In summary, Llama 3 2–3B’s superior performance across these metrics makes it a more attractive option for developers and businesses looking to implement efficient, cost-effective, and high-performing language models.

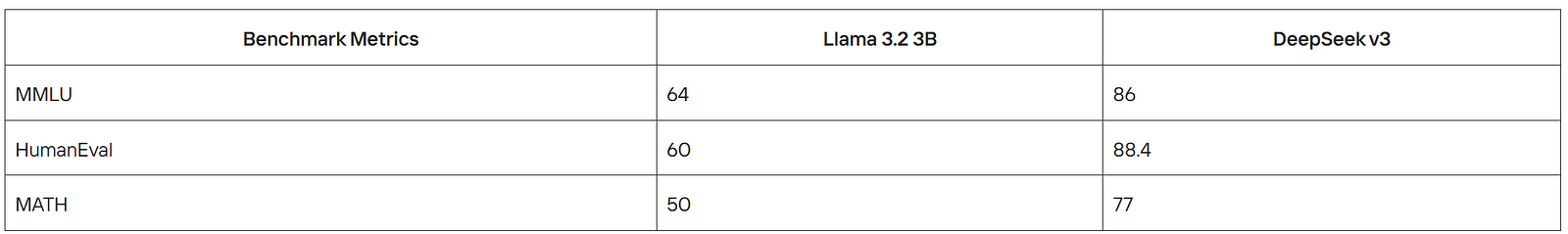

Benchmark Comparison

Now that we’ve established the basic characteristics of each model, let’s delve into their performance across various benchmarks. This comparison will help illustrate their strengths in different areas.

This table demonstrates that DeepSeek V3 outperforms Llama 3.2 3B across three key benchmark tests: MMLU, HumanEval, and MATH.DeepSeek V3 excels in handling complex tasks and applications that require high intelligence.

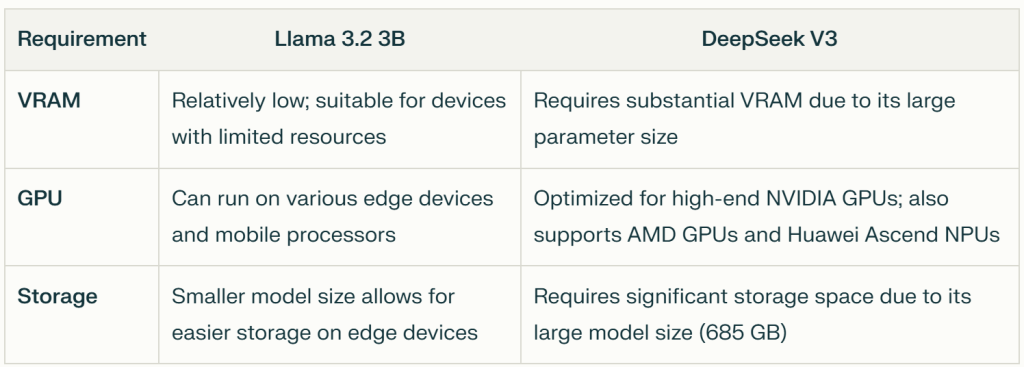

Hardware Requirements

In summary, Llama 3.2 3B is more resource-efficient and suitable for a wider range of devices, including those with limited resources, while DeepSeek V3 is more resource-intensive, requiring substantial VRAM and storage, and is optimized for high-performance GPUs.

Applications and Use Cases

Llama 3.2 3B:

On-Device AI: Ideal for local processing on mobile and edge devices, offering fast and private AI applications.

Personal Information Management: Suitable for applications requiring summarization, rewriting, and knowledge retrieval.

Multilingual Support: Provides strong multilingual text generation.

DeepSeek V3:

Complex Reasoning: Excels in tasks involving math, coding, and complex logical reasoning.

High-Performance AI: Suitable for cloud-based applications requiring high performance and reliability.

Synthetic Data Generation: Cost-effective for generating synthetic data at large scales.

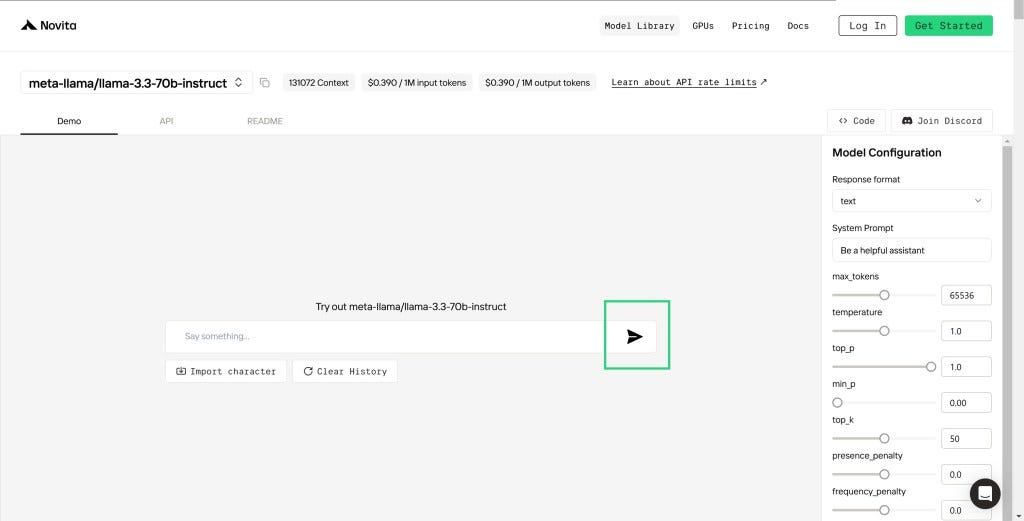

Accessibility and Deployment through Novita AI

Step 1: Log In and Access the Model Library

Log in to your account and click on the Model Library button.

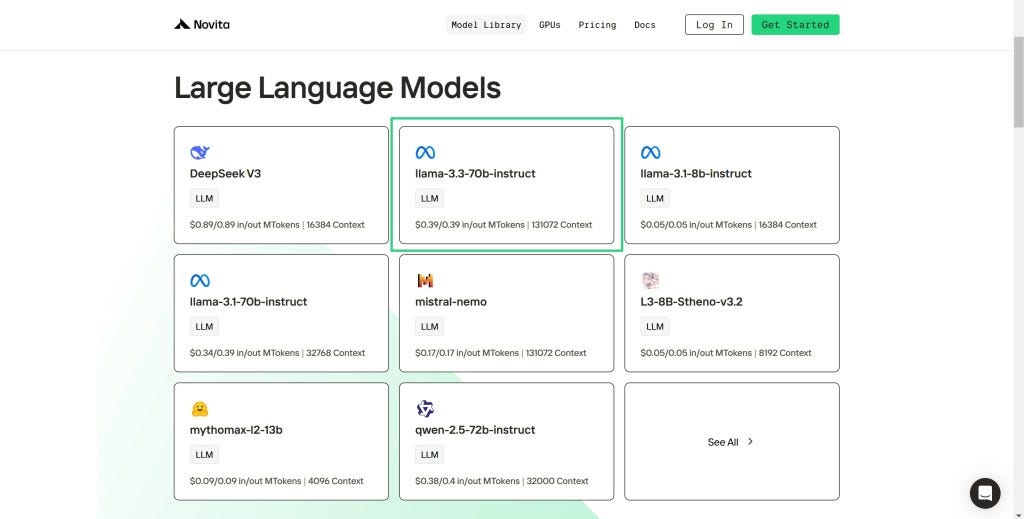

Step 2: Choose Your Model

Browse through the available options and select the model that suits your needs.

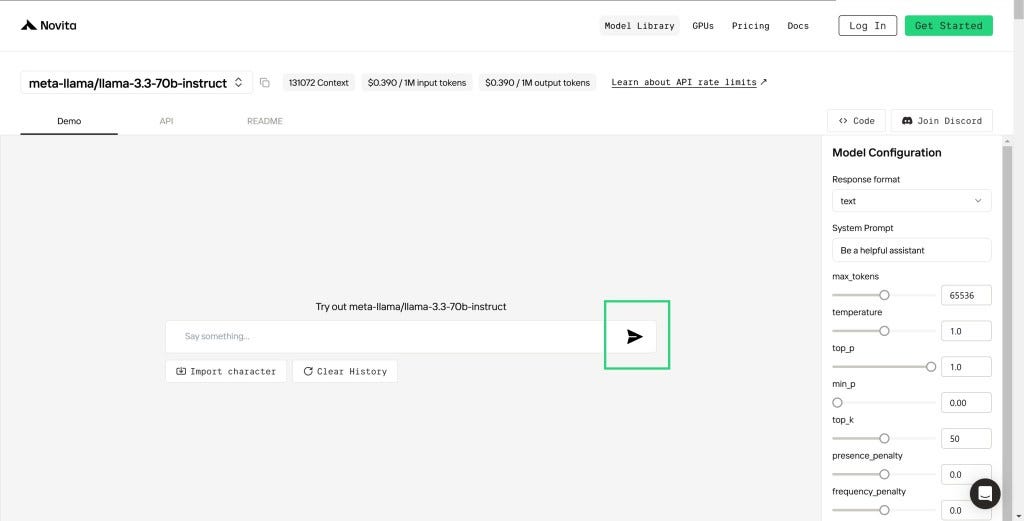

Step 3: Start Your Free Trial

Begin your free trial to explore the capabilities of the selected model.

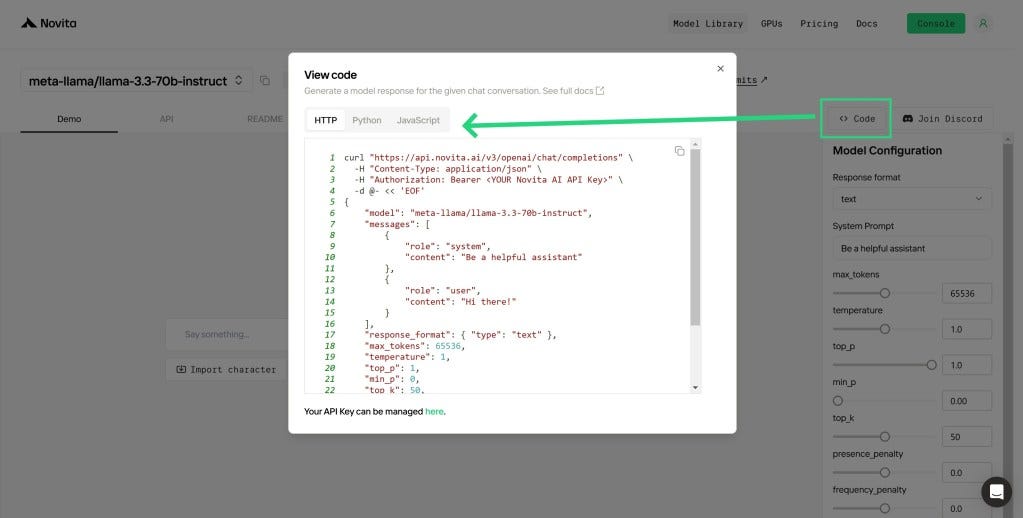

Step 4: Get Your API Key

To authenticate with the API, we will provide you with a new API key. Entering the “Settings“ page, you can copy the API key as indicated in the image.

Step 5: Install the API

Install API using the package manager specific to your programming language.

After installation, import the necessary libraries into your development environment. Initialize the API with your API key to start interacting with Novita AI LLM. This is an example of using chat completions API for pthon users.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

# Get the Novita AI API Key by referring to: https://novita.ai/docs/get-started/quickstart.html#_2-manage-api-key.

api_key="<YOUR Novita AI API Key>",

)

model = "deepseek/deepseek-v3"

stream = True # or False

max_tokens = 512

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": "Act like you are a helpful assistant.",

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "")

else:

print(chat_completion_res.choices[0].message.content)

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Conclusion

Llama 3.2 3B is a strong choice for applications requiring efficient on-device processing, while DeepSeek V3 excels in computationally intensive tasks that benefit from its massive scale and advanced architecture. The choice between the two depends on specific application requirements, resource constraints, and performance demands.

Frequently Asked Questions

Which model is better for mobile applications?

Llama 3.2 3B is better suited for mobile applications due to its small size, low hardware requirements, and focus on on-device processing.

Which model is better for complex coding tasks?

DeepSeek V3 is more suitable for complex coding tasks due to its superior performance in code generation and logical reasoning.

Can DeepSeek V3 be run locally?

Yes, DeepSeek V3 can be run locally using various open-source frameworks like vLLM, SGLang, and LMDeploy; however, this requires high-end hardware resources.

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.