Experience the next generation of AI development with Novita AI's latest integration of Meta's Llama 3.2 models. Our platform now offers a comprehensive suite of models designed to meet diverse development needs while maintaining cost-effectiveness and superior performance.

What's New with Llama 3.2

| Llama 2.0 (7B, 13B, 70B) | Llama 3.0 (8B, 70B) | Llama 3.1 (8B, 70B, 405B) | Llama 3.2 Multimodal (11B & 90B) | Llama 3.2 Lightweight Text Only (1B & 3B) | |

| Release Date | July 18, 2023 | April 18, 2024 | July 23, 2024 | Sep 25, 2024 | Sep 25, 2024 |

| Context Window | 4K | 8K | 128K | 128K | 128K |

| Vocabulary Size | 32K | 128K | 128K | 128K | 128K |

| Official Multilingual | English Only | English Only | 8 Languages | 8 Languages | 8 Languages |

| Tool Calling | No | No | Yes | Yes | Yes |

| Knowledge Cutoff | Sep 2022 | 2023, Mar (8B) Dec (70B) | Dec 2023 | Dec 2023 | Dec 2023 |

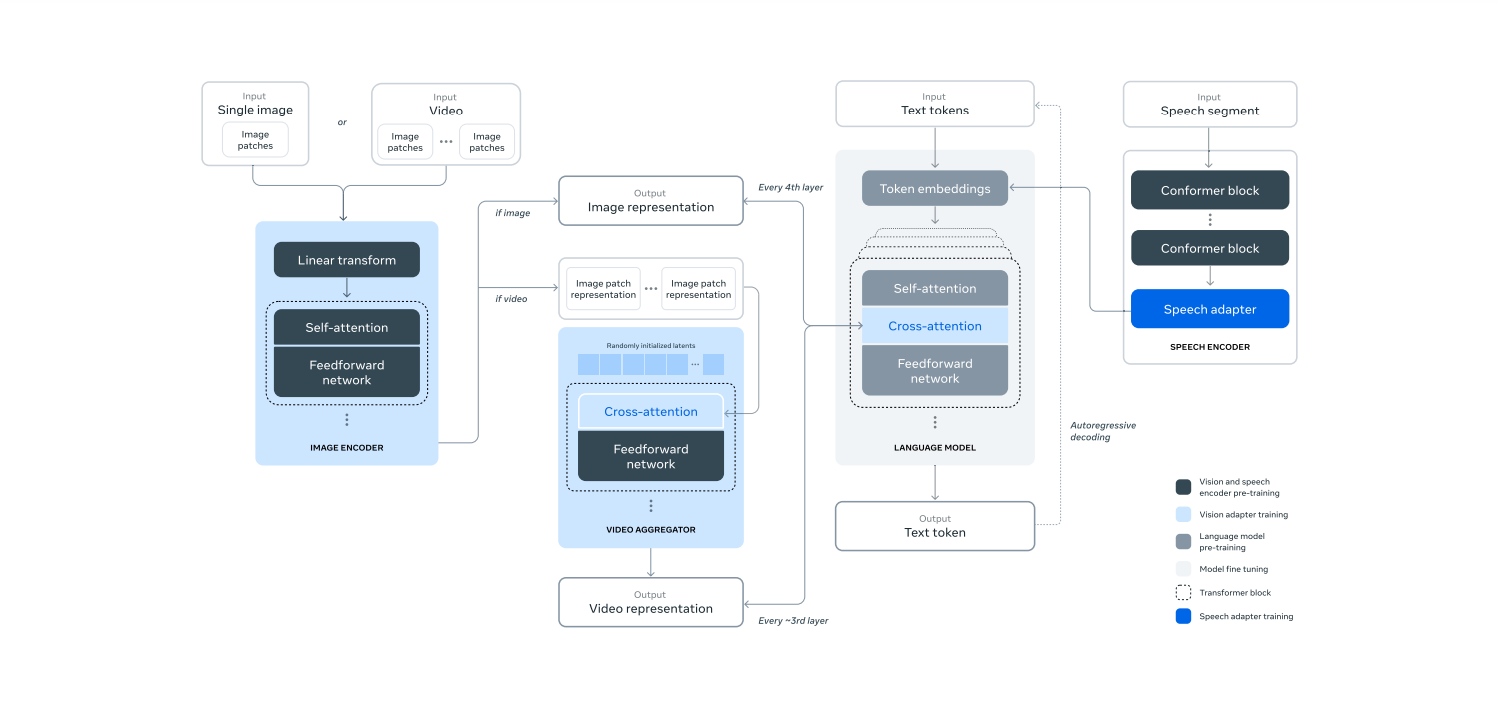

1) Multimodal Input in 11B and 90B Models

Source from Meta

Image Understanding: Recognizes objects, scenes, and drawings, along with OCR capabilities.

Captioning and QA: Generates captions and answers questions based on visual content.

Visual Reasoning: Analyzes equations, charts, and documents for enhanced visual reasoning.

2) Smaller Sizes in 1B and 3B Text-Only Models

New SLM (Small Language Model) Use Cases:

On-device summarization

Writing and translation

QA in multiple languages

Available Llama 3.2 Models on Novita AI

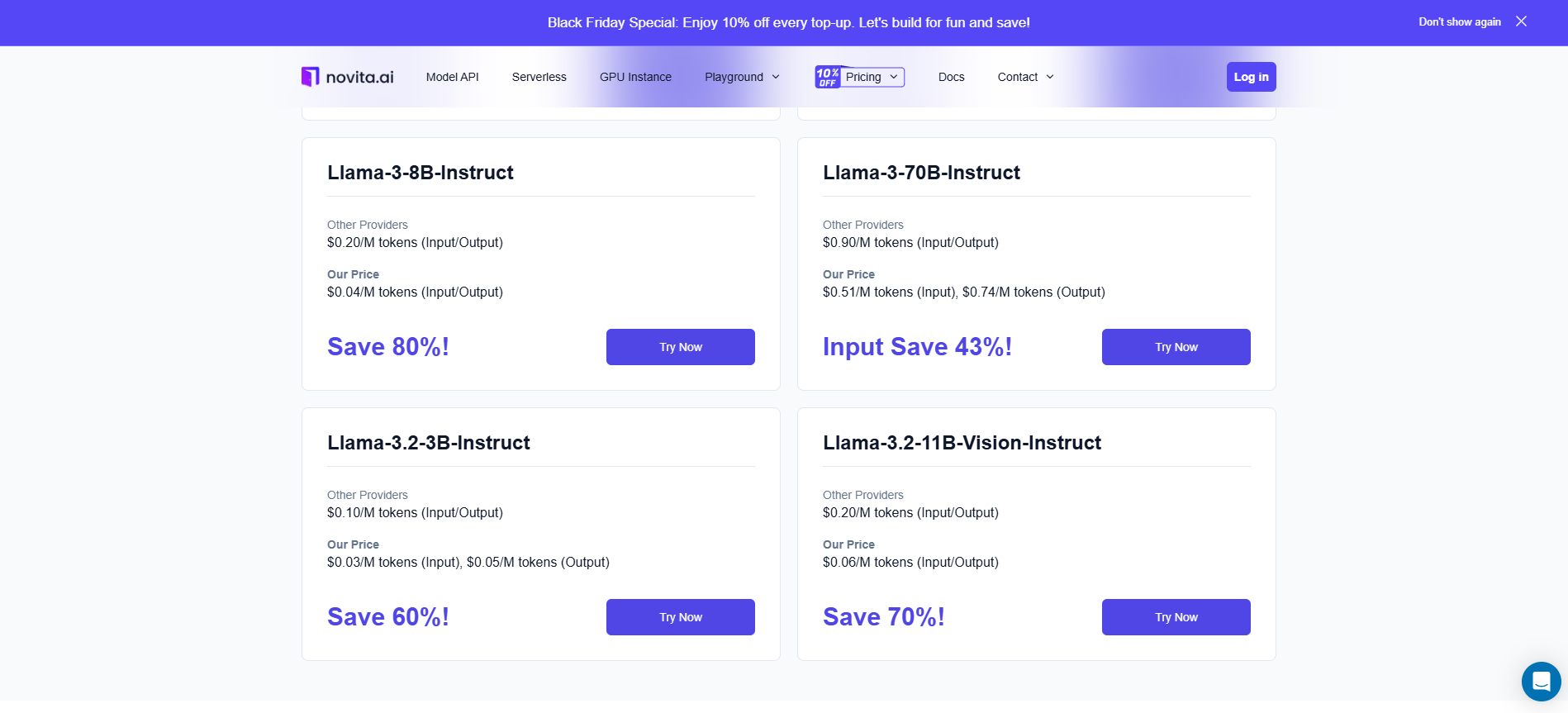

Novita AI proudly offers three powerful variants of Llama 3.2, each optimized for different use cases:

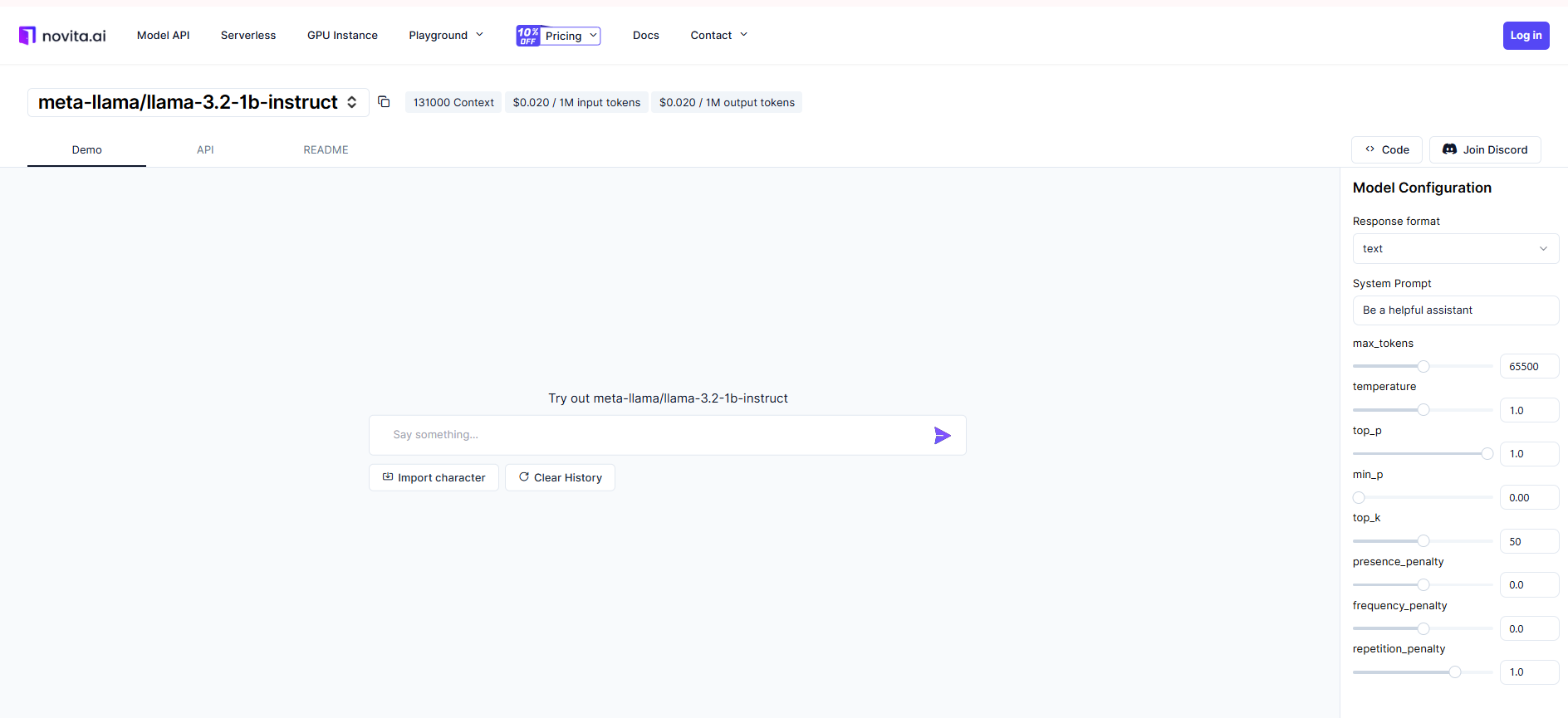

Llama 3.2 1B Instruct: Your Gateway to Efficient AI

Transform your development workflow with our most accessible model, featuring an impressive 131,000 token context window. At just $0.02/M tokens, this model delivers exceptional value for rapid prototyping and lightweight applications. Try Llama 3.2 1B Instruct Now

Llama 3.2 3B Instruct: Power Meets Performance

Unlock enhanced reasoning capabilities with our mid-tier model, offering a 32,768 token context length. With competitive pricing at $0.03/M input tokens and $0.05/M output tokens, it's perfectly positioned for medium-scale applications requiring robust performance. Try Llama 3.2 3B Instruct Now

Llama 3.2 11B Vision Instruct: Multimodal Excellence

Experience state-of-the-art multimodal processing with our advanced vision model. Supporting a 131,000 token context length at $0.06/M tokens, it excels in complex visual-linguistic tasks. Try Llama 3.2 11B Vision Instruct Now

Advancing Multimodal AI with an Open Source Foundation

Application of Llama 3.2 model

The Llama 3.2 vision models, featuring 11 billion and 90 billion parameters, provide robust multimodal capabilities for processing images and text. When integrated with the Novita AI Platform, this combination can unlock significant real-world applications such as:

Multimodal Use Cases

Interactive Agents: Develop AI agents capable of responding to both text and image inputs, offering an enhanced user experience.

Image Captioning: Create high-quality image descriptions for use in e-commerce, content creation, and digital accessibility.

Visual Search: Enable users to perform searches using images, improving search efficiency in e-commerce and retail settings.

Document Intelligence: Analyze documents containing both text and visuals, such as legal contracts and financial reports.

Industry-Specific Applications

The Llama 3.2 endpoints from Novita AI open up new possibilities across various industries:

Healthcare: Enhance medical image analysis to improve diagnostic accuracy and patient care.

Retail & E-Commerce: Transform shopping experiences with image and text-based searches and personalized recommendations.

Finance & Legal: Streamline workflows by analyzing graphical and textual content, optimizing contract reviews and audits.

Education & Training: Develop interactive educational tools that process both text and visuals to boost engagement.

Getting Started: Your Journey with Novita AI

Step 1: Select Your Model

Choose based on your specific requirements:

For prototyping: Visit our Llama 3.2 1B Instruct Demo for initial testing.

For production applications: Experiment with the Llama 3.2 3B Instruct model for enhanced capabilities.

For visual-linguistic tasks: Test multimodal features in our Llama 3.2 11B Vision Instruct Demo.

Or use our Python SDK to quickly integrate Llama models into your applications:

Step 2: Integrate and Deploy

Follow our straightforward integration process:

Sign up for a Novita AI account.

Access our comprehensive LLM API documentation.

Implement the API calls in your preferred programming language.

Test thoroughly in your development environment.

Example with Python Client

from openai import OpenAI

client = OpenAI(base_url="https://api.novita.ai/v3/openai",api_key="Your API Key",

)

model = "meta-llama/llama-3.2-11b-vision-instruct"stream = True # or Falsemax_tokens = 65500system_content = "Be a helpful assistant"temperature = 1top_p = 1min_p = 0top_k = 50presence_penalty = 0frequency_penalty = 0repetition_penalty = 1response_format = { "type": "text" }

chat_completion_res = client.chat.completions.create(model=model,messages=[

{"role": "system","content": system_content,

},

{"role": "user","content": "Hi there!",

}

],stream=stream,max_tokens=max_tokens,temperature=temperature,top_p=top_p,presence_penalty=presence_penalty,frequency_penalty=frequency_penalty,response_format=response_format,extra_body={

"top_k": top_k,

"repetition_penalty": repetition_penalty,

"min_p": min_p

}

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)

Example with JavaScript Client

import OpenAI from "openai";

const openai = new OpenAI({

baseURL: "https://api.novita.ai/v3/openai",

apiKey: "Your API Key",

});

const stream = true; // or false

async function run() {

const completion = await openai.chat.completions.create({

messages: [

{

role: "system",

content: "Be a helpful assistant",

},

{

role: "user",

content: "Hi there!",

},

],

model: "meta-llama/llama-3.2-3b-instruct",

stream,

response_format: { type: "text" },

max_tokens: 16384,

temperature: 1,

top_p: 1,

min_p: 0,

top_k: 50,

presence_penalty: 0,

frequency_penalty: 0,

repetition_penalty: 1

});

if (stream) {

for await (const chunk of completion) {

if (chunk.choices[0].finish_reason) {

console.log(chunk.choices[0].finish_reason);

} else {

console.log(chunk.choices[0].delta.content);

}

}

} else {

console.log(JSON.stringify(completion));

}

}

run();

Example with Curl Client

curl "https://api.novita.ai/v3/openai/chat/completions" \

-H "Content-Type: application/json" \

-H "Authorization: Bearer Your API Key" \

-d @- << 'EOF'

{

"model": "meta-llama/llama-3.2-3b-instruct",

"messages": [

{

"role": "system",

"content": "Be a helpful assistant"

},

{

"role": "user",

"content": "Hi there!"

}

],

"response_format": { "type": "text" },

"max_tokens": 16384,

"temperature": 1,

"top_p": 1,

"min_p": 0,

"top_k": 50,

"presence_penalty": 0,

"frequency_penalty": 0,

"repetition_penalty": 1

}

EOF

Step 3: Optimize and Scale

Maximize your implementation:

Monitor token usage and costs.

Refine your prompts for better efficiency.

Scale your application based on performance needs.

Utilize the extensive context length capabilities.

Ready to Transform Your AI Development?

Visit Novita AI today to begin building with Llama 3.2. Our team is ready to support your journey from experimentation to production deployment, ensuring you get the most out of these powerful models.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading