The field of artificial intelligence (AI) is changing quickly. New natural language processing (NLP) models are coming out all the time. This makes it important for developers and researchers to ask a key question: which AI model is the best fit for their projects?

In this detailed comparison, we will look at two top options in the NLP space: Anthropic’s Claude 3.5 and Meta's Llama 3.2. By reviewing their features, we aim to help you make smart choices when using these advanced AI models in real-world settings. Developers looking to simplify the process of building AI tools can explore seamlessly integrated solutions provided by Novita AI.

Key Features and Pricing

Choosing the best AI model means finding a good mix between cool features and your budget. It is important to know what Llama 3.2 and Claude 3.5 can do. This will help you pick the right tool for your project.

Key Features of Llama 3.2

Llama 3.2 stands out because it can effectively combine visual and text information. This model is good at understanding both images and language. It can do tasks like writing captions for images and answering questions about pictures.

Llama models come in different sizes to meet various needs. The smaller models are designed to work well on mobile devices and other devices with limited resources.

On the other hand, the larger Llama models have billions of parameters. They can handle more complex multimodal tasks, making them ideal for tough projects that need more computing power. This range in size and function shows how versatile the Llama model family is.

Key Features of Claude 3.5

| Feature | Claude 3.5 Sonnet | Llama 3.2 90B Vision Instruct |

| Input Context Window | 200K tokens | 128K tokens |

| Maximum Output Tokens | 8,192 tokens | Unknown tokens |

| Open Source | No | No |

| Release Date | October 22, 2024 | September 25, 2024 |

| Knowledge Cut-off Date | April 2024 | December 2023 |

Anthropic’s Claude 3.5 is a powerful language model known for its excellent understanding and generating of language.

One great feature of Claude 3.5 is its large context window. This larger window helps the model handle and remember information from long texts. This leads to clearer and more relevant answers, especially during back-and-forth conversations.

Whether used in chatbots that need to remember a lot or in tasks like summarizing complex texts, Claude 3.5 can keep context during long talks. This makes it a strong choice in the world of natural language processing.

Pricing Comparison

Pricing emerges as a pivotal factor in choosing between Llama 3.2 and Claude 3.5, as both models present distinct cost structures and deployment options.

| Model | Provider | Price per 1M Input Tokens | Price per 1M Output Tokens |

| Claude 3 Haiku | Anthropic | $0.250 | $1.250 |

| Claude 3 Sonnet | Anthropic | $3.000 | $15.000 |

| Claude 3 Opus | Anthropic | $15.000 | $75.000 |

| Claude 3.5 Sonnet | Anthropic | $3.000 | $15.000 |

| meta-llama/llama-3.2-3b-instruct | Novita AI | $0.030 | $0.050 |

| meta-llama/llama-3.2-11b-vision-instruct | Novita AI | $0.060 | $0.060 |

| meta-llama/llama-3.2-1b-instruct | Novita AI | $0.020 | $0.050 |

Comparing Benchmarks and Performance

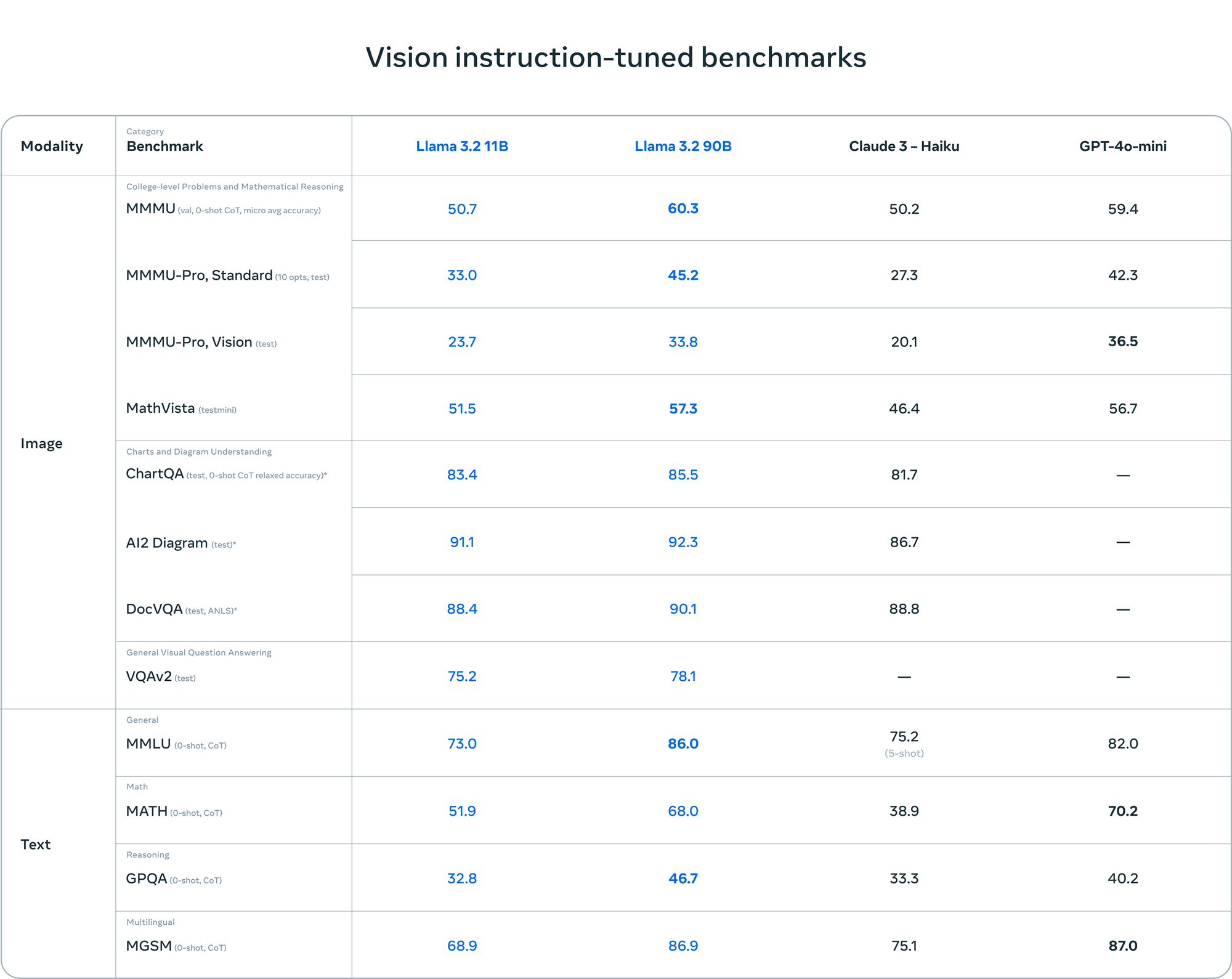

To fairly evaluate how Llama 3.2 and Claude 3.5 perform, we need to look deeper than just their features. We should focus on standardized benchmarks instead.

Benchmarks

Source from: Meta

Llama 3.2, especially excels in research, experimentation, and smaller deployments due to its open-source nature, making it accessible for various use cases without direct costs. On the other hand, Claude 3.5 targets commercial applications and large deployments with its token-based pricing model, ensuring scalability but may incur higher costs as usage increases.

When comparing Llama 3.2 and Claude 3.5 based on benchmarks and performance metrics, it's essential to delve into standardized tests that assess speed, accuracy, and efficiency for NLP tasks. its larger vision models, usually does very well on benchmarks about image understanding and multimodal reasoning. This shows its skill in tasks related to vision. On the other hand, Claude 3.5 often performs better in benchmarks that measure language understanding, text generation, and reasoning.

It’s important to remember that benchmark results should guide our choices, not decide for us. Choosing between Llama 3.2 and Claude 3.5 depends on matching specific project needs with the strengths shown in these benchmarks.

Performance Metrics: Speed, Accuracy, and Efficiency

Beyond benchmarks, practical performance focuses on speed, accuracy, and efficiency. Llama 3.2, especially its smaller models for mobile devices, usually excels in efficiency. This means it can provide faster inference times and use fewer computing resources, making it great for real-time tasks on phones.

On the other hand, Claude 3.5 may need more computational power but could give better accuracy in areas like text generation and understanding language. The choice between speed and accuracy depends a lot on what you are trying to do.

In the end, choosing between Llama 3.2 and Claude 3.5 involves finding a balance between how accurate you want to be and what your computing setup can handle, along with the speed you need for your project.

Model Architectures and Technology Stack

Looking at the model designs and technology behind each option helps us see what they can and cannot do. Llama 3.2 comes from Meta AI's knowledge and experience. It benefits from a strong support system created by the company. This includes tools like the Llama Stack for training and deploying models and Llama Guard, which improves safety and security.

Anthropic works on creating ethical and trustworthy AI systems with Claude 3.5. Details about its model design are not easy to find. However, Anthropic focuses on safety and making sure the AI aligns with human values. This means its model may be better suited for sensitive tasks or those that require ethical care.

Practical Applications in Development Projects

The choice between Llama 3.2 and Claude 3.5 goes beyond just theory. It focuses on how they perform in real-life situations. These advanced NLP models work well in different areas.

If you are working on chatbots, analyzing large data, creating interesting content, or interpreting images, it is very important to pick the right tool for your success. We will look at common uses for each model. This will show where each one does best and what things you should think about to help you decide.

Use Cases for Llama 3.2: From Text to Multimodal Solutions

Explore Llama 3.2 11B Vision Instruct Now

Llama 3.2 offers many exciting features for different industries. Its ability to mix text and visual reasoning makes it a top choice for:

Image Captioning: Automatically create descriptions for images.

Visual Question Answering: Answer questions about what is shown in images.

Document Analysis and Understanding: Pull and organize information from documents with both text and images.

Llama 3.2 is flexible enough to work on big servers as well as lighter mobile apps. This range makes it a strong tool for developers who want to build advanced multimodal products.

Use Cases for Claude 3.5: Enhancing Language Understanding

Claude 3.5 stands out because it understands language very well. This makes it great for applications that need careful text handling:

Advanced Chatbots and Conversational AI: It can create chatbots that talk and engage with people in detailed, meaningful ways.

Text Summarization and Information Extraction: It can turn long documents into short summaries while picking out important information accurately.

Code Generation and Assistance: It helps developers write and fix code by giving smart suggestions and handling repetitive tasks.

Choosing between Llama 3.2 and Claude 3.5 can be tricky. Each has its own strengths and limits. Your final choice should match your specific needs with the distinct approaches these models provide.

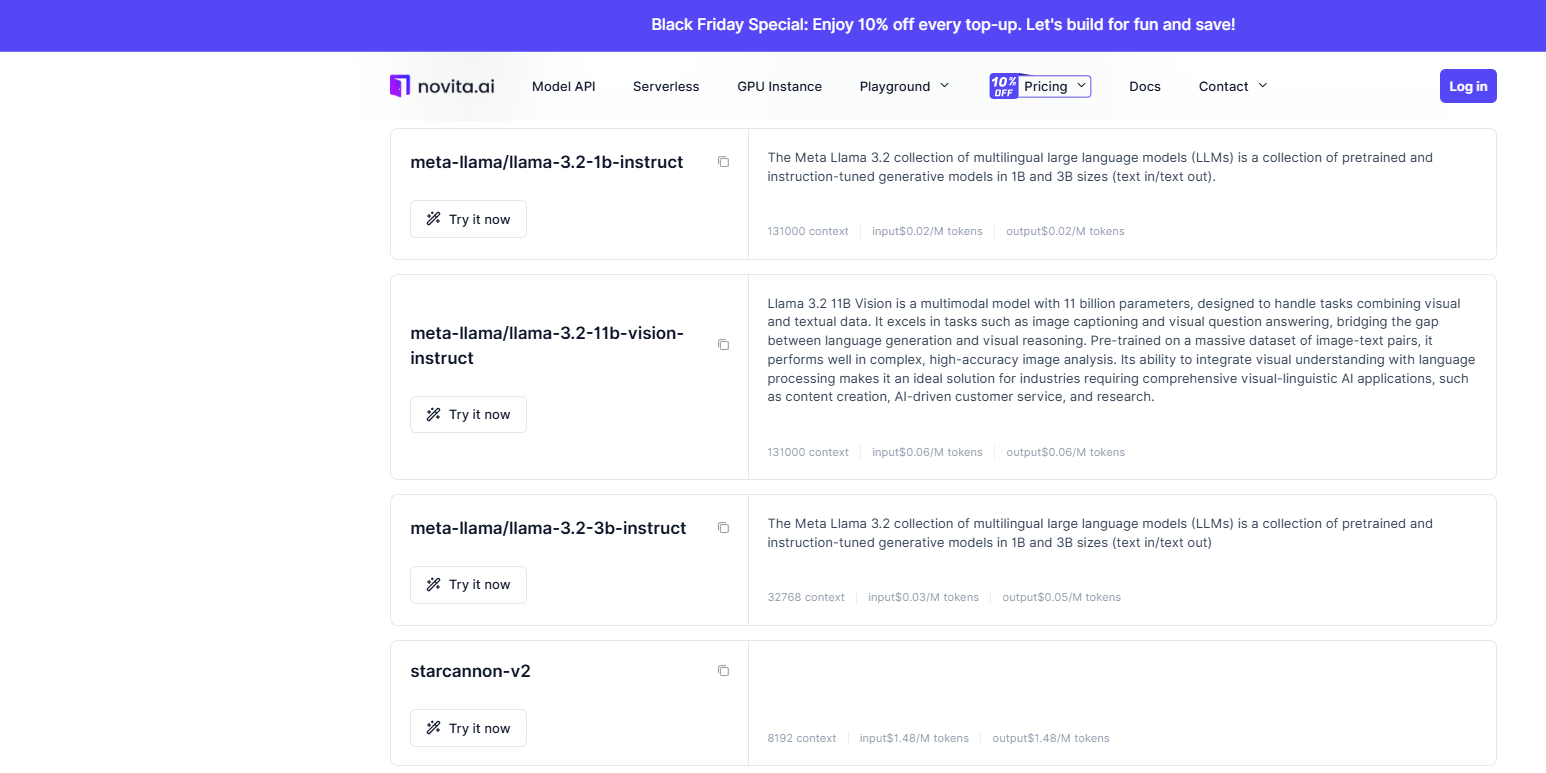

How to run Llama 3.2 with Novita AI

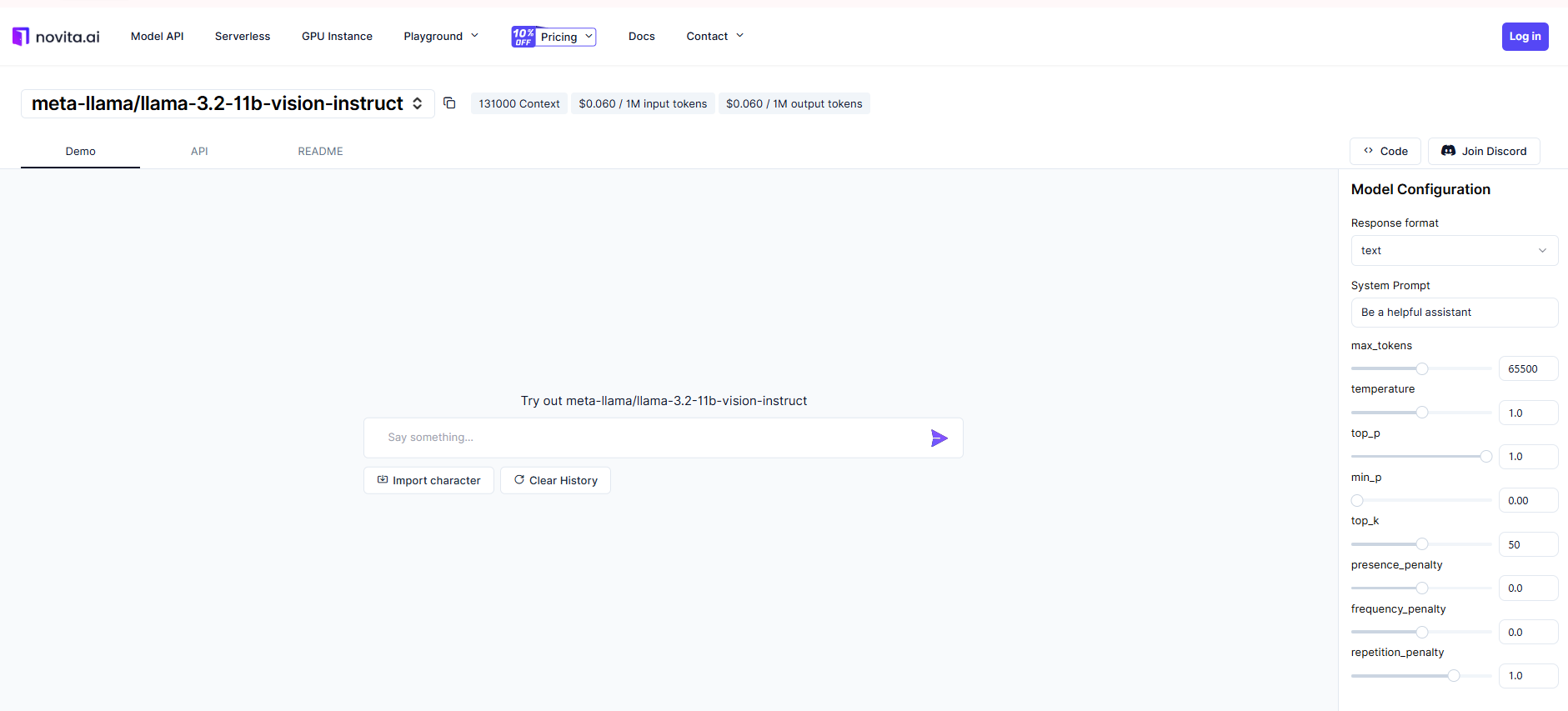

Developers can get started quickly with the LLM API Quick Start Guide and explore the LLM model at no cost in the Llama 3.2 11B Vision Instruct Demo. Whether you are building an AI-powered customer service chatbot, a smart language translation tool, or a resume editing tool, Novita AI’s API makes integration simple. This allows developers to focus on their main tasks while utilizing all the features of Llama 3.2, without worrying about the complexities of managing the system.

Before you officially integrate the Llama 3.2 API, you can give it a try online with Novita AI. Here’s how to get started with Novita AI’s Llama online:

Step 1: Select the Llama model that is desired for utilization and assess its capabilities.

Step 2: Enter the desired prompt into the designated field. This area is intended for the text or question to be addressed by the model.

Step 3: Get the model response for the given chat conversation.

from openai import OpenAI

client = OpenAI(

base_url="https://api.novita.ai/v3/openai",

api_key="Your API Key",

)

model = "meta-llama/llama-3.2-11b-vision-instruct"

stream = True # or False

max_tokens = 65500

system_content = """Be a helpful assistant"""

temperature = 1

top_p = 1

min_p = 0

top_k = 50

presence_penalty = 0

frequency_penalty = 0

repetition_penalty = 1

response_format = { "type": "text" }

chat_completion_res = client.chat.completions.create(

model=model,

messages=[

{

"role": "system",

"content": system_content,

},

{

"role": "user",

"content": "Hi there!",

}

],

stream=stream,

max_tokens=max_tokens,

temperature=temperature,

top_p=top_p,

presence_penalty=presence_penalty,

frequency_penalty=frequency_penalty,

response_format=response_format,

extra_body={

"top_k": top_k,

"repetition_penalty": repetition_penalty,

"min_p": min_p

}

)

if stream:

for chunk in chat_completion_res:

print(chunk.choices[0].delta.content or "", end="")

else:

print(chat_completion_res.choices[0].message.content)

Conclusion

Choosing between Llama 3.2 and Claude 3.5 comes down to your project needs and budget. Llama 3.2 has special features for text and other formats. Claude 3.5 aims to improve language understanding. Look at the benchmarks and performance for each model. If your project needs different types of uses, Llama 3.2 may be the best choice. However, if you need help with language tasks, Claude 3.5 could be a better fit. Think about what you need carefully before deciding. This will help you get the best results for your AI projects.

Frequently Asked Questions

What is the difference between Claude and Llama 3?

Claude 3.5 and Llama 3.2 are both strong language processing models. However, they have different strengths. Claude 3.5 is great at text-based tasks. It shows better language understanding in benchmarks. On the other hand, Llama 3.2, especially its bigger versions, is excellent with multimodal capabilities. It can handle both text and image data very well.

Is Llama better than Claude?

It really depends on what you need. If you are looking for help with language processing and understanding, Claude 3.5 might be the way to go. On the other hand, if you need multimodal capabilities, then Llama 3.2 with its vision models would work best for you.

In what scenarios would it be more beneficial to use Llama 3.2 over Claude 3.5, and vice versa?

The choice between Llama 3.2 and Claude 3.5 depends on your specific needs. If your project includes handling images as well as text, you should go with Llama 3.2 because of its strong multimodal features. On the other hand, if you need something that focuses more on understanding complex language or making ethical decisions, then Claude 3.5 is the better option.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.

Recommended Reading