Table of contents

- Key Highlights

- What Problems Can Function Calling Solve?

- What is Function Calling?

- Supported Models for Function Calling

- Frequently Asked Questions

Key Highlights

What it does: Execute real-time data retrieval, system operations, and automated workflows.

Which models support it: Llama 3 series, GPT series, Gemma 2, and Mistral nemo.

How to implement: Install APIs through Novita AI “Model Library”, then implement with Langchain framework.

Function calling is a technique that significantly enhances the capabilities of large language models (LLMs) by enabling them to interact with the external world. Instead of merely generating text, LLMs can utilize function calling to execute specific tasks, access real-time information, and perform complex operations. This article will explore the concept of function calling, its practical applications, and how models like Llama 3.3 70B are making it more accessible.

What Problems Can Function Calling Solve?

Real-Time Information Access

Check latest stock prices

Get current weather data

Access breaking news

System Interactions

Send emails

Post on social media

Query and write to databases

Workflow Automation

Data scraping and processing

Multi-step task execution

Complex analysis automation

Data Accuracy

Ensure information timeliness

Provide precise query results

Reduce outdated data errors

What is Function Calling?

At its core, function calling is the ability of an LLM to recognize when a specific task requires an external function or tool and then output structured data (typically in JSON format) to execute the function. This structured data includes the function’s name and any necessary arguments. Essentially, function calling acts as a bridge between the AI’s vast knowledge and tangible actions. It empowers AI agents or chatbots to perform specific tasks or access external data and services.

Supported Models for Function Calling

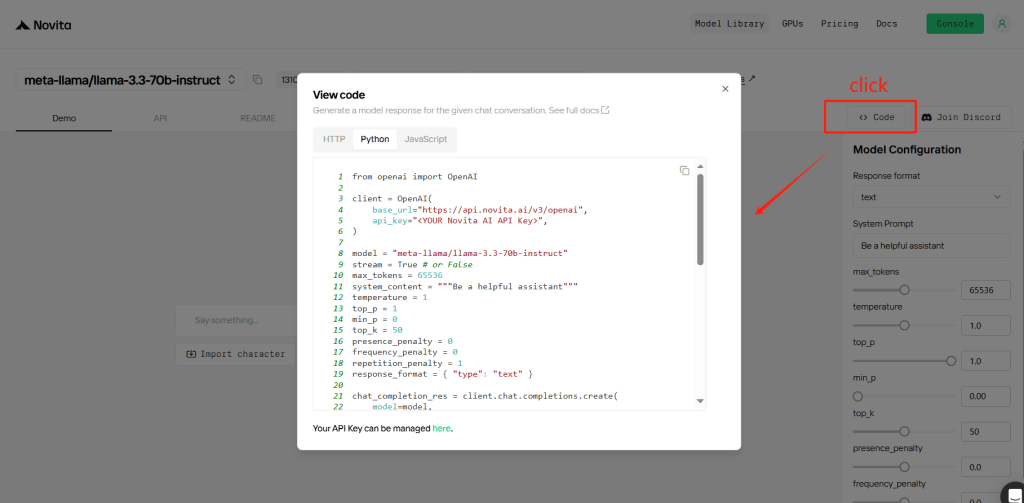

Many LLMs and platforms now support function calling. You can install API through the “Model Library” page of Novita AI, and implement function calling through langchain.

Llama 3.3: The 70 billion parameter version has shown strong performance in function calling tests by successfully identifying when and which functions to call based on user requests.

Mistral: Models like Mistral-Large-2 demonstrate success in function calling within environments like watsonx.ai.

Gemini: Google’s Gemini models also support function calling with various usage examples available.

How Does Function Calling Work?

Function Declaration: The process begins with defining reusable blocks of code known as functions, along with descriptions of their capabilities, inputs, and outputs.

Prompt Submission: The user submits a prompt to the LLM along with a set of function declarations. This informs the model about the available tools.

Model Analysis: The LLM analyzes the prompt and determines whether it needs to invoke any of the provided functions to fulfill the request.

Structured Output: If a function call is needed, the LLM generates structured output in JSON format that includes the name of the function and values for its parameters.

Function Invocation: The application or system uses this structured output to call the specified function while passing along the parameters.

Function Execution: The external service or API executes the function using the provided parameters.

Output Response: The external service sends a confirmation or result back to the AI.

Model Response: The LLM then uses this output to generate a natural language response for the user or for further processing.

- Note that the model does not directly call the function; rather, its structured output is used by an external program to do so.

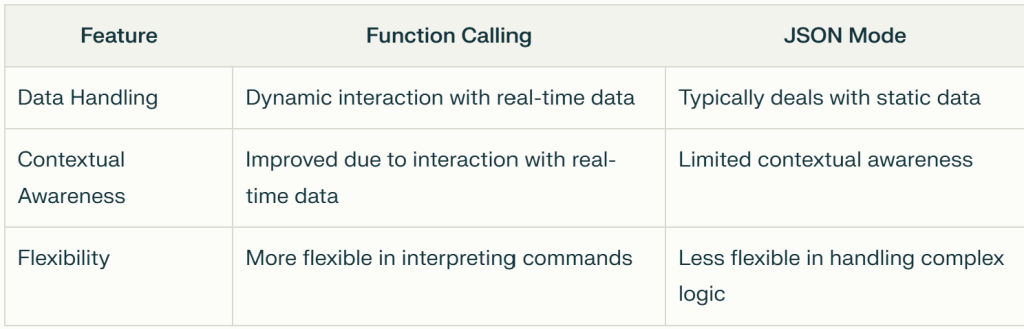

Function Calling vs. JSON Mode

Benefits of Function Calling

Increased Efficiency: Directly calling functions leads to faster processing times and reduced latency, crucial for applications requiring immediate action.

Enhanced Flexibility: Developers can easily update or modify functions without overhauling entire applications, enabling quicker adjustments to new requirements.

Scalability: Facilitates scalability by allowing new functions to be added without extensive changes to existing infrastructure.

Personalized Interactions: Allows for personalized user experiences; for example, accessing a user’s calendar to suggest meeting times that do not conflict with existing appointments.

Bridging AI and Real-World Actions: Enables AI to perform practical tasks like sending emails or text messages on behalf of users.

Complex Conversational Agents: Can create sophisticated chatbots that answer complex questions using external APIs and knowledge bases for relevant responses.

Practical Applications of Function Calling

Conversational Agents: Used in advanced chatbots that utilize external APIs for up-to-date information.

Natural Language Understanding: Extracts structured data from text for tasks like entity recognition and sentiment analysis.

API Integration: Enables LLMs to integrate with external APIs for fetching data or performing actions based on user input.

Financial Assistance: Builds AI financial advisors that access real-time financial data and provide personalized advice.

Support Ticket Automation: Automates support ticket assignments by processing tickets using context-aware rules.

Knowledge Retrieval: Helps retrieve information from knowledge bases by creating functions that summarize academic articles for answering questions and providing citations.

Multimodal Applications: Triggers functions based on images, videos, audio, and PDFs.

How to Use Llama 3.3 70B Function Calling via Novita AI

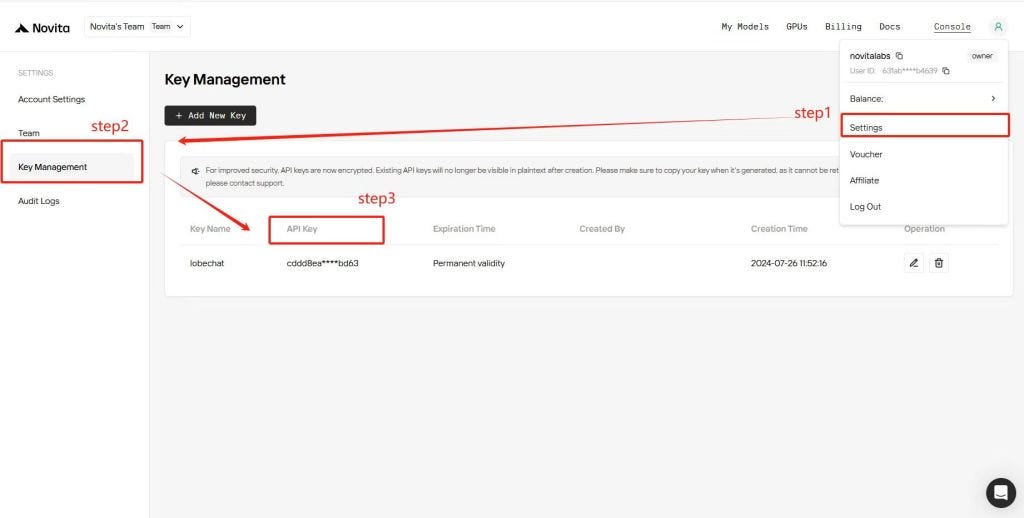

Step1: Get API Key and Install it!

Entering the “Key Management“ page, you can copy the API key as indicated in the image.

You can find “Model Library” page of Novita AI. Install the Novita AI API using the package manager specific to your programming language.

Upon registration, Novita AI provides a $0.5 credit to get you started!

If the free credits is used up, you can pay to continue using it.

Step2: Use Langchain to Implement the Function Calling

We’ll create a simple math application that can perform addition and multiplication operations.

💡 While this guide uses LangChain for convenience, implementing function calling doesn’t require any specific framework. The key is in designing the right prompts to make the model understand and correctly invoke functions. LangChain is used here simply to streamline the implementation.

Prerequisites

First, install the required packages:

pip install langchain-openai python-dotenv

Setting Up the Environment

Create a .env file in your project root and add your Novita AI API key:

NOVITA_API_KEY=your_api_key_here

Implementation Steps

1. Define the Tools

First, let’s create two simple mathematical tools using LangChain’s @tool decorator:

from langchain_core.tools import tool

@tool

def multiply(x: float, y: float) -> float:

"""Multiply two numbers together."""

return x * y

@tool

def add(x: int, y: int) -> int:

"""Add two numbers."""

return x + y

tools = [multiply, add]

2. Create the Tool Execution Function

Next, implement a function to execute the tools:

from typing import Any, Dict, Optional, TypedDict

from langchain_core.runnables import RunnableConfig

class ToolCallRequest(TypedDict):

name: str

arguments: Dict[str, Any]

def invoke_tool(

tool_call_request: ToolCallRequest,

config: Optional[RunnableConfig] = None

):

"""Execute the specified tool with given arguments."""

tool_name_to_tool = {tool.name: tool for tool in tools}

name = tool_call_request["name"]

requested_tool = tool_name_to_tool[name]

return requested_tool.invoke(tool_call_request["arguments"], config=config)

3. Set Up the LangChain Pipeline

Create a chain that uses Novita AI’s LLM to select and prepare tool calls:

from langchain_openai import ChatOpenAI

from langchain_core.output_parsers import JsonOutputParser

from langchain_core.prompts import ChatPromptTemplate

from langchain_core.tools import render_text_description

4. Create the Main Processing Function

Implement the main function that processes mathematical queries:

def process_math_query(query: str):

"""Process a mathematical query by using an LLM to select the appropriate tool and execute it."""

chain = create_chain()

message = chain.invoke({"input": query})

result = invoke_tool(message, config=None)

return message, result

5. Usage Example

Here’s how to use the implementation:

if __name__ == "__main__":

message, result = process_math_query(

"meta-llama/llama-3.3-70b-instruct",

"what's 3 plus 1132"

)

print(result) # Output: 1135

def create_chain():

"""Create a chain that uses the specified LLM model to select and prepare tool calls."""

model = ChatOpenAI(

model="meta-llama/llama-3.3-70b-instruct",

api_key=os.getenv("NOVITA_API_KEY"),

base_url="https://api.novita.ai/v3/openai",

)

rendered_tools = render_text_description(tools)

system_prompt = f"""\

You are an assistant that has access to the following set of tools.

Here are the names and descriptions for each tool:

{rendered_tools}

Given the user input, return the name and input of the tool to use.

Return your response as a JSON blob with 'name' and 'arguments' keys.

The `arguments` should be a dictionary, with keys corresponding

to the argument names and the values corresponding to the requested values.

"""

prompt = ChatPromptTemplate.from_messages(

[("system", system_prompt), ("user", "{input}")]

)

return prompt | model | JsonOutputParser()

In conclusion, function calling is rapidly transforming how AI systems interact with their environment, enabling more practical, efficient, and user-friendly applications. Models like Llama 3.3 70B are paving the way for easier access to this powerful technology, opening up numerous possibilities for AI development.

Frequently Asked Questions

What is function calling in the context of LLMs?

Function calling is a technique that allows large language models to recognize when a specific task requires an external function or tool and generate structured data to execute that function.

What are the main benefits of using function calling?

Key benefits include increased efficiency in processing tasks, enhanced flexibility for developers to update functions easily, scalability for adding new functionalities without extensive changes, and personalized user interactions.

What languages does Llama 3.3 support?

English, French, German, Hindi, Italian, Portuguese, Spanish, and Thai

Novita AI is an AI cloud platform that offers developers an easy way to deploy AI models using our simple API, while also providing the affordable and reliable GPU cloud for building and scaling.