The RTX 4090’s double-precision performance is ideal for computing and ML-AI applications. Rent GPU Instances for Greater Cost-Effectiveness.

Key Highlights

Exceptional Performance: The GeForce RTX 4090 offers outstanding double-precision performance, ideal for demanding AI, ML, and research tasks.

High-Performance Computing: With its advanced Tensor Cores and Ada Lovelace architecture, it handles complex simulations and large datasets efficiently.

Cost Justification: Though it’s pricey, the RTX 4090’s superior performance justifies the investment for those with significant computing needs.

Cloud Rental Option: Renting GPUs via cloud platforms provides a flexible, cost-effective way to access advanced technology without high upfront costs.

Introduction

The new Ge-Force RTX 4090 from NVIDIA is getting a lot of attention, and it’s easy to see why. It’s well-known for its gaming power, but now it’s catching the eye of professionals across various fields.

This GPU stands out for its remarkable double-precision and single-precision performance. It’s great at handling tough calculations needed for things like scientific research and AI. If you’re looking for a cost-effective solution, renting a GPU instance could be the way to go for businesses.

Understanding Double Precision Performance in GPUs

Double precision performance in GPUs means that a graphics card can do calculations with more accuracy. NVIDIA GPUs, like the RTX 4090, use Tensor Cores and the Ada Lovelace architecture.

They are very good at handling double-precision tasks. This is important for things like scientific simulations and deep learning. These tasks need careful calculations.

By using the double precision features and cache of GPUs, users can speed up their work and get faster results in high-performance computing. It is essential to understand this to make the most of these strong GPUs.

The Significance of Double Precision for Professional Applications

Precision is everything in fields like AI, machine learning (ML), and deep learning (DL). You need accurate results, and that means powerful hardware is a must.

Double-precision floating point (FP64) is crucial here, ensuring minimal errors.

The RTX 4090 shines in this area. It handles complex calculations with impressive accuracy. This means it speeds up research and development in AI, ML, and DL. For those pushing the boundaries of technology, it’s a real game-changer.

RTX 4090 in High-Performance Computing (HPC)

The RTX 4090 is a standout in high-performance computing (HPC). Its advanced capabilities make it an excellent choice for tackling demanding tasks. It has great double precision skills and features fourth-generation tensor cores.

Built on NVIDIA’s Ada Lovelace architecture, this GPU gives amazing performance for tough simulations and heavy calculations. The large memory bandwidth and many CUDA cores make the RTX 4090 a top pick for HPC tasks that need high precision and speed.

Advantages of RTX 4090 for Parallel Processing in HPC

Massive Core Count: The RTX 4090 comes with many CUDA cores. This large number helps it process tasks efficiently, speeding up computing for HPC jobs.

Tensor Cores for AI Acceleration: It has advanced Tensor Cores that enhance performance for AI and machine learning. This makes it a top pick for high-performance computing needs.

High Memory Bandwidth: With its significant memory bandwidth, the RTX 4090 ensures fast data access. It reduces slowdowns in large-scale HPC tasks, keeping everything running smoothly.

Performance Metrics of RTX 4090 in HPC Applications

Peak Floating-Point Performance: The RTX 4090 shows impressive TFLOPS in both single and double-precision tasks. This solid performance makes it a standout in HPC work.

Memory Throughput: Thanks to its large memory bandwidth, the RTX 4090 handles big datasets efficiently. This means quicker data processing and better overall performance in HPC tasks.

AI and Tensor Performance: The RTX 4090 excels in AI benchmarks. Its Tensor Cores boost performance for machine learning and deep learning, making it a powerful tool in HPC environments.

RTX 4090’s Role in Artificial Intelligence and Deep Learning

Advancements in artificial intelligence or AI are closely linked to strong hardware. The RTX 4090 has fourth-generation Tensor Cores, which are made for this purpose.

These cores speed up the matrix multiplications needed for deep learning algorithms. This helps AI models train and make decisions faster.

The better processing power is great for researchers and developers working on exciting AI projects. This includes areas like natural language processing, computer vision, and robotics. The RTX 4090 helps them explore new levels in AI.

Accelerating AI Model Training with RTX 4090

Training AI models, especially deep learning ones, can be a real time-sink and demand a lot of computing power. That’s where the RTX 4090 steps in. It’s built to handle these demanding tasks with ease, making the whole process smoother.

With its impressive computing power, it helps speed things up and gets the job done faster.

One important part is the fourth-generation Tensor Cores. These special units are made to speed up the matrix multiplications that are essential in deep learning. With a fast memory connection, it can cut down the time needed to train AI models.

This speed-up allows for faster development. Researchers can work with bigger datasets, try out more complex models, and improve AI models more quickly.

Enhancements in Tensor Cores and Their Impact on DL Performance

The fourth-generation Tensor Cores in the RTX 4090 are very important for speeding up deep learning tasks. These special processors have seen major changes in their design, which leads to better performance:

Increased Throughput: The new Tensor Cores can do more calculations at once than earlier versions. This helps them work with matrix operations much faster.

Sparsity Support: A big improvement is their ability to manage sparsity, meaning they can work well with matrices that have many zero values. This speeds up some deep-learning tasks.

Improved Precision: The fourth-generation Tensor Cores provide better support for different levels of precision. This includes FP16, BF16, and TF32 formats, which gives developers more options to make their deep learning models run faster and more accurately.

These upgrades lead to real changes in how deep learning works. Training time for complex models is greatly reduced. Also, handling larger datasets becomes easier, creating new opportunities in AI research and development.

Leveraging RTX 4090 for Competitive Advantage

In a world where new technology drives change, the GeForce RTX 4090 can help you stay ahead. It boasts tough tasks in areas like AI, simulation, and data analysis. This means that businesses and researchers can solve problems and create new ideas much faster than before.

The RTX 4090 speeds up product development, making prototyping and simulations faster. This means organizations can analyze data quickly, stay ahead of competitors, and uncover new opportunities. It helps them become leaders in their industries.

Gain an Edge in Research and Development

When it comes to research and development, having a powerful GPU like the GeForce RTX 4090 can change the game. It’s not just about speed — it makes everything flow more smoothly.

Both researchers and creators will find it helps them turn their new ideas into reality and innovate more easily. This powerful tool can help push boundaries and advance research.

Its speed can process complex calculations quickly. This allows researchers to work on ideas faster, test their thoughts better, and make discoveries sooner.

In areas where simulation is very important, the RTX 4090 can offer advanced ray tracing capabilities that lead to better and more detailed simulations involving the CPU.

This can give important information that leads to more innovation. Researchers in AI and deep learning can also use its power to train and improve complex models more quickly.

This can help find breakthroughs in fields like natural language processing and computer vision.

Key Trends in Double-Precision Performance of the RTX 4090

As the RTX 4090 enhances AI and ML workloads, its double-precision (FP64) performance drives several key trends:

Superior Double-Precision Efficiency: The RTX 4090 handles complex models with improved computational power and energy efficiency, enabling efficient processing of large datasets.

Edge Computing with High Precision: Its double-precision capabilities allow edge devices to process AI models locally, reducing latency and improving real-time accuracy.

Automated Optimization: The RTX 4090 enables precise automation of model optimization and hyperparameter tuning, minimizing manual intervention.

Cloud Computing Advancements: With its double-precision power, the RTX 4090 strengthens GPU-accelerated cloud services, offering high-precision computing without heavy infrastructure costs.

High Cost of the RTX 4090

The RTX 4090 comes with a high price tag. This reflects its advanced double-precision features and top performance in AI and ML. The cost can be a big concern for those on a budget.

Cost vs. User Needs: Justifying the Investment

Sure, the RTX 4090 is expensive. But it delivers outstanding double-precision performance and excels in AI and ML tasks. If you’re someone who needs top-tier computing power, the investment could be well worth it.

The improvements in productivity and efficiency it brings can make the steep cost justifiable. In the end, for users with demanding needs, the RTX 4090 can be a valuable choice despite its high initial expense.

Renting GPU Instance: A Cost-Effective Solution

Using cloud services to rent GPU instances is a smart way to save money for people who need powerful computing for a short time. It avoids spending a lot of money upfront on costly hardware.

This option also allows users to adjust the resources they use based on changing needs. Renting GPU instances means users can use the latest technologies, like NVIDIA RTX 4090, for special tasks without having to worry about long-term costs.

This method helps make better use of the budget while also improving workflow efficiency when handling tough computational jobs.

Economical Aspects of Cloud-Based GPU Usage

Renting GPUs through cloud platforms provides significant flexibility, access to the latest technology, and cost savings:

Pay-As-You-Go Model: With cloud services, you pay only for what you use. This means you’re not stuck with expensive hardware that sits idle. It’s perfect if your needs change often.

Reduced Overhead Costs: Moving your GPU tasks to the cloud means less physical gear to manage. You save on things like electricity, cooling, and upkeep.

Optimized Resource Utilization: Cloud platforms give you tools to track and analyze your GPU use. This way, you can use your resources wisely, cut down on wasted time, and keep costs in check.

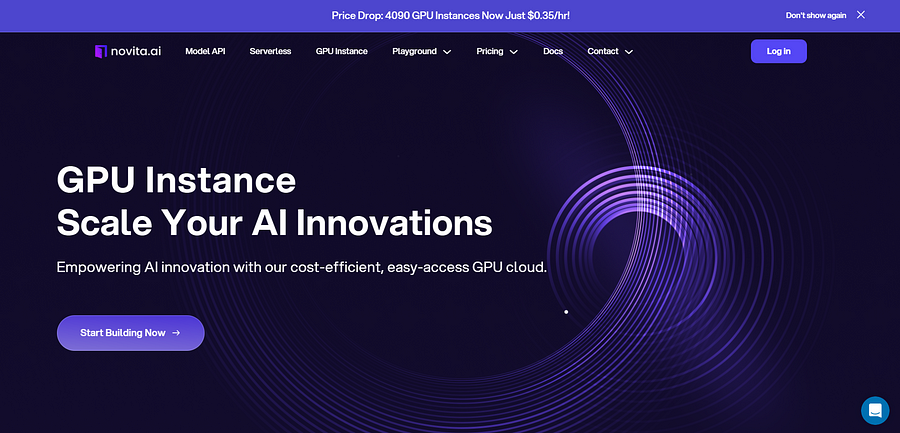

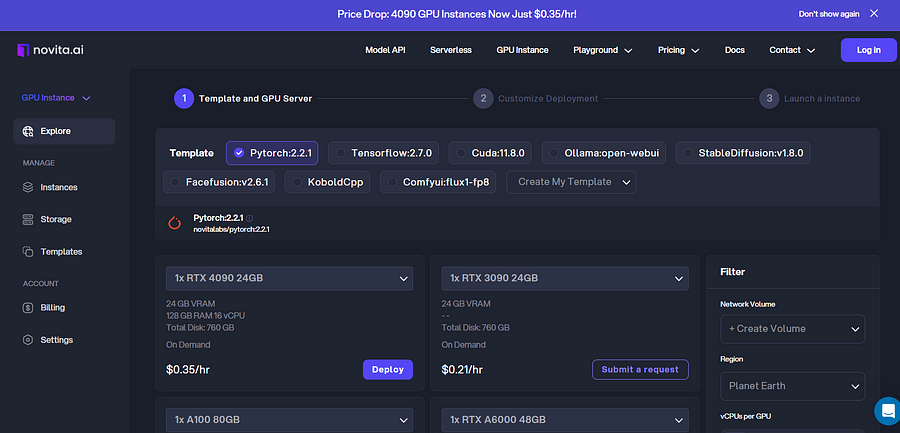

Novita AI GPU Instance: Convenience and High Cost-Effectiveness

Novita AI GPU Instance is easy to use and saves money, making it a great choice for many computing jobs. They have advanced features and work well for AI projects and tasks that need strong performance.

By using NVIDIA GPUs and the latest technologies, Novita AI GPU instances run smoothly and help you save costs. This mix of convenience and low prices makes Novita AI GPU instances a leading option for efficient computing.

Novita AI GPU Instance has key features

Novita AI offers a GPU cloud service that users can utilize while working with the PyTorch Lightning Trainer. This service provides several benefits:

Cost Efficiency: Users can expect significant savings, potentially reducing cloud costs by up to 50%. This is especially advantageous for startups and research institutions with limited budgets.

Instant Deployment: Users can quickly deploy a Pod, which is a containerized environment designed for AI workloads. This streamlined process allows developers to start training their models with minimal setup time.

Customizable Templates: The Novita AI GPU instance includes customizable templates for popular frameworks like PyTorch, enabling users to select the right configuration for their specific needs.

High-Performance Hardware: The service grants access to high-performance GPUs such as the NVIDIA A100 SXM, RTX 4090, and A6000, each equipped with substantial VRAM and RAM, ensuring efficient training of even the most demanding AI models.

Conclusion

The RTX 4090 marks a major upgrade in GPU tech. It offers amazing double-precision performance and has advanced features perfect for AI and HPC tasks. Yes, its price is high, and that might be a concern. But for those who need top performance, this GPU can be worth the investment.

If the cost feels too steep, renting GPU instances through cloud platforms might be a better option. This approach is flexible and budget-friendly. It gives you access to the latest technology without a huge upfront expense. Plus, it helps you make the most of your budget and resources.

FAQs

Is the RTX 4090’s double precision performance good for AI and ML?

Absolutely. The RTX 4090 shines in AI and ML. It’s a powerhouse with its strong computing abilities, plenty of video memory, and smart design. These features make it a top choice for tasks in these fields.

Which applications will benefit most from the RTX 4090’s improved double-precision performance?

Applications like scientific computing, financial modeling, and AI algorithms that require complex mathematical calculations and precision benefit most from the improved double precision performance of the RTX 4090. This GPU ensures faster and more accurate computations in these demanding fields.

What are the benefits of renting a GPU instance over buying an RTX 4090?

Renting a GPU instance is a smart choice. It offers flexibility and exposes you to the latest technology while avoiding high upfront expenses.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.