Natural Language Processing (NLP) is a branch of artificial intelligence dedicated to facilitating interactions between computers and humans using natural language.

Key Highlights

NLP and LLM are both technologies that bridge the gap between human language and machine understanding in the field of artificial intelligence.

NLP focuses on analyzing and manipulating human language based on defined rules and structures, enabling machines to comprehend grammar, syntax, and context.

LLMs, on the other hand, are large language models that learn to predict and generate language with human-like fluency and adaptability, fueled by massive amounts of text data.

While NLP excels at structured tasks like sentiment analysis and machine translation, LLMs are great at generating human-quality text and understanding complex contexts.

Both NLP and LLMs have their own strengths and weaknesses, with NLP focusing on accuracy and LLMs being more adaptable but potentially carrying over biases from their training data.

The integration of NLP and LLMs can enhance AI performance, enabling more accurate and contextually relevant language processing and generation.

Understand NLP and LLM Respectively

What is NLP(Natural Language Process)

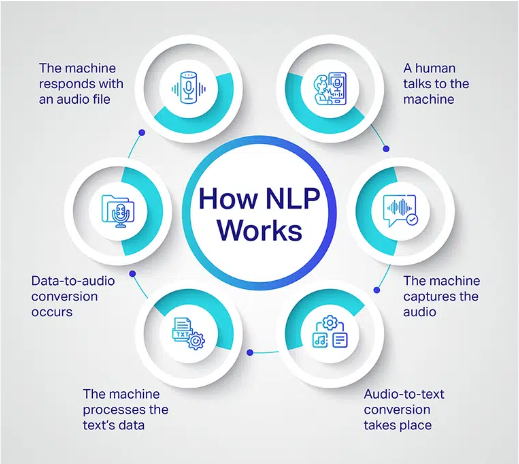

Natural Language Processing (NLP) is a subset of artificial intelligence (AI) that emphasizes the interaction between humans and computers using natural language. The main goal of NLP is to interpret, decode, and comprehend human language in a useful way.

NLP intersects with computer science, AI, and computational linguistics to tackle the intricacies of human language, such as context recognition, structural analysis, and semantic understanding. NLP algorithms identify patterns in data, transforming unstructured linguistic data into a structured format that computers can process and react to effectively.

NLP has a wide range of real-world applications including voice recognition, language translation, sentiment analysis, and entity recognition. It powers many everyday technologies, enhancing our interaction with digital systems.

What is LLM(Large Language Model)

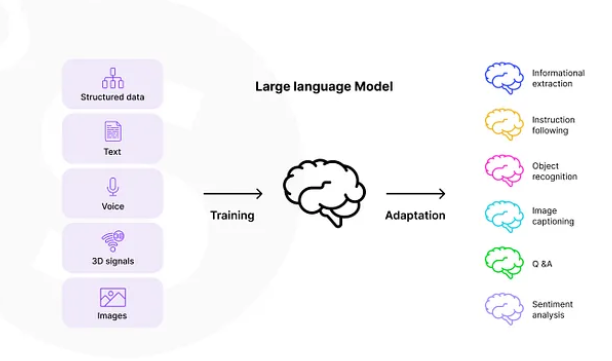

Large Language Models (LLMs) are advanced machine learning models designed to understand and produce text that resembles human writing. They function by predicting the next word or sentence based on the preceding text, enabling them to generate text that is coherent and contextually appropriate.

LLMs represent an advancement over previous NLP models, facilitated by improvements in computing power, the availability of vast data sets, and advancements in machine learning methodologies. These models are trained on extensive text data, often sourced from the internet, which helps them learn language patterns, grammar, world facts, and even develop reasoning capabilities.

The key capability of LLMs is their ability to process detailed instructions and produce text that closely mimics human writing. This has led to their widespread use in various applications, particularly in the latest AI chatbots that are transforming the way humans interact with machines. Other uses for LLMs include generating summaries, translating languages, creating original content, and powering automated customer support systems.

6 Key Differences Between NLP and LLM

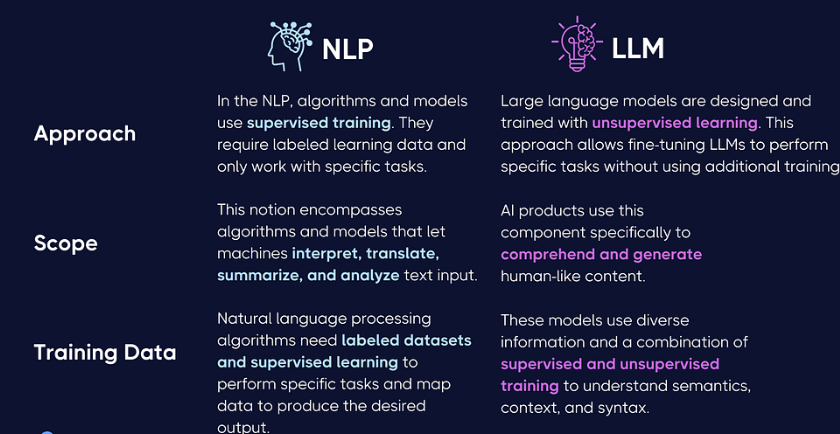

Scope

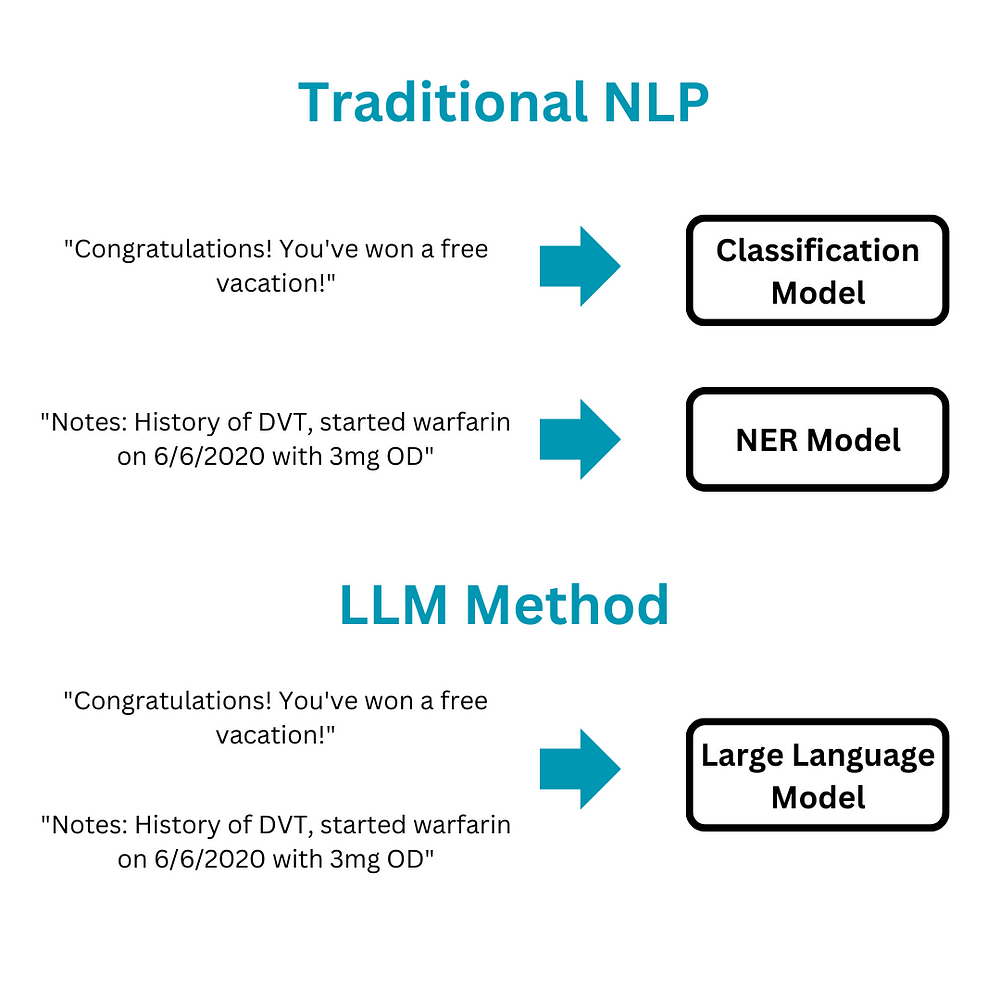

NLP includes a wide array of models and techniques for processing human language, with Large Language Models (LLMs) serving as a specialized category within this field. Practically, LLMs cover a similar range of tasks as traditional NLP technologies due to their extensive training on varied datasets and their sophisticated grasp of language patterns.

LLMs are highly adaptable due to their ability to comprehend and generate text that mimics human interaction, which makes them applicable for a multitude of tasks that previously depended on specific NLP models. For instance, while traditional NLP might use distinct models for entity recognition and text summarization, an LLM can handle both tasks using a single model framework. However, it is important to recognize that despite their versatility, LLMs may not always be the most efficient or optimal choice for every NLP task, particularly when a task demands highly specialized solutions.

Techniques

NLP employs a diverse array of techniques, from rule-based systems to more advanced machine learning and deep learning methods. These methods are utilized for a variety of tasks including part-of-speech tagging, named entity recognition, and semantic role labeling, among others.

Conversely, Large Language Models (LLMs) predominantly utilize deep learning to identify patterns in textual data and to predict text sequences. They are built on a type of neural network architecture called the Transformer, which incorporates self-attention mechanisms to assess the significance of each word within a sentence. This feature enables LLMs to comprehend context more effectively and produce contextually appropriate text.

Performance on Language Tasks

Large Language Models (LLMs) have demonstrated exceptional performance, often surpassing other model types across a range of NLP tasks. They are capable of producing text that is not only contextually accurate but also coherent and creatively human-like. As a result, LLMs have found applications in various areas including chatbots, virtual assistants, content generation, and language translation.

Nevertheless, LLMs come with notable drawbacks. They require substantial data and significant computational resources for training. Additionally, they are susceptible to producing content that may be incorrect, unsafe, or biased, reflecting the data on which they were trained. Without explicit instructions, these models often do not grasp wider contextual or ethical considerations.

On the other hand, NLP includes a broader spectrum of techniques and models that might be more suitable for specific tasks or scenarios. Traditional NLP models are frequently capable of addressing natural language challenges more accurately and with fewer computational demands than LLMs.

Resources

LLMs require extensive data and computational power to operate efficiently. This is because LLMs are tasked with understanding and deducing the underlying logic of the data, a process that is both complex and resource-demanding. LLMs train on vast datasets and possess a significant number of parameters, ranging into the billions or even hundreds of billions for the most advanced models. Currently, the cost of training a new LLM is prohibitively high for most organizations.

In contrast, most NLP models can be trained on smaller, task-specific datasets. Additionally, many NLP models that are pre-trained on extensive text datasets are available, allowing researchers to employ transfer learning techniques to enhance new model development. When it comes to computational demands, simpler NLP models like topic modeling or entity extraction use just a fraction of the resources needed for training and operating LLMs. While more complex neural network-based models also require greater computational power, they are generally more affordable and simpler to train than LLMs

Adaptability

LLMs are exceptionally versatile due to their ability to grasp the underlying logic in the data, enabling them to generalize and adapt to new scenarios or datasets. This capability makes LLMs particularly effective at making precise predictions about unfamiliar data.

Conversely, traditional NLP algorithms often lack this level of flexibility. Although NLP models can handle a diverse array of languages and dialects, they may falter when encountering new challenges or tasks, or when dealing with nuances of language and cultural references not covered in their training.

Ethical and Legal Consideration

Ethical and legal considerations are crucial in the deployment of both LLM and NLP technologies. For LLMs, these concerns mainly pertain to data usage. Given that LLMs require substantial amounts of structured data, there are significant concerns regarding privacy and data security. It is vital for entities that develop or employ LLMs to establish robust data governance frameworks and adhere to applicable data protection regulations.

Additionally, the safety of AI systems utilizing LLMs is a critical issue. The rapid advancements in LLM capabilities, coupled with the industry’s ambition to achieve artificial general intelligence (AGI), pose significant societal and potentially existential risks. There is widespread concern among experts that LLMs could be exploited by malicious actors for cybercrimes, undermining democratic processes, or causing AI systems to act contrary to human welfare.

For NLP, the ethical and legal issues are somewhat less complex but nonetheless important. NLP’s use in processing and analyzing human language means it must navigate concerns related to consent, privacy, and the potential replication of biases from training data. For instance, using NLP to analyze social media content could raise questions about user consent and privacy. Moreover, biases present in the training data can lead to biased outputs from NLP systems.

LLM and NLP: Using Both For Enhancing AI Performance

Integrating NLP with LLMs represents a major advancement in creating sophisticated language processing systems. This combination harnesses NLP’s precise capabilities alongside LLM’s broad contextual understanding, significantly enhancing the efficiency and effectiveness of AI applications across various industries.

Enhanced Accuracy and Contextual Understanding

Combining NLP’s specialized processing capabilities with LLM’s extensive contextual knowledge enhances both the accuracy and pertinence in language-related tasks.

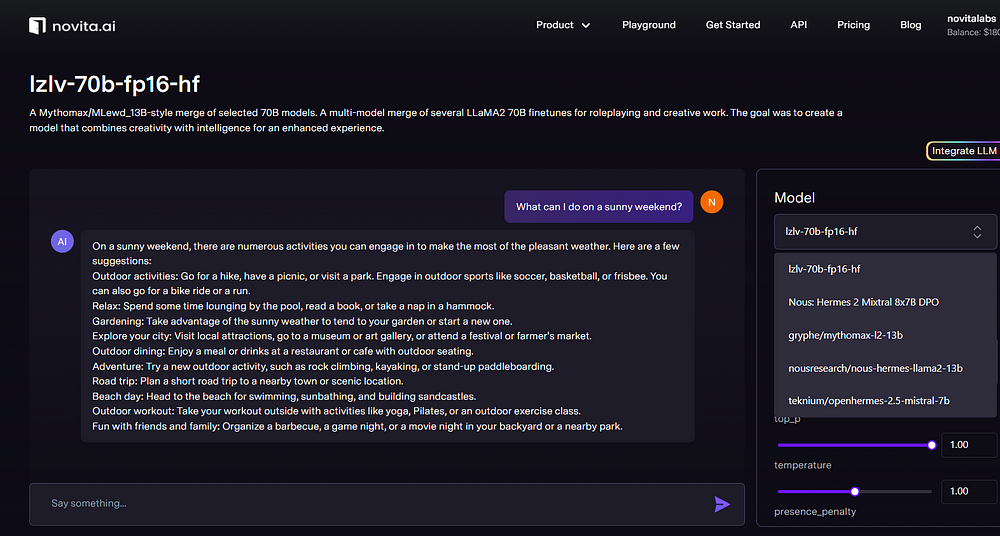

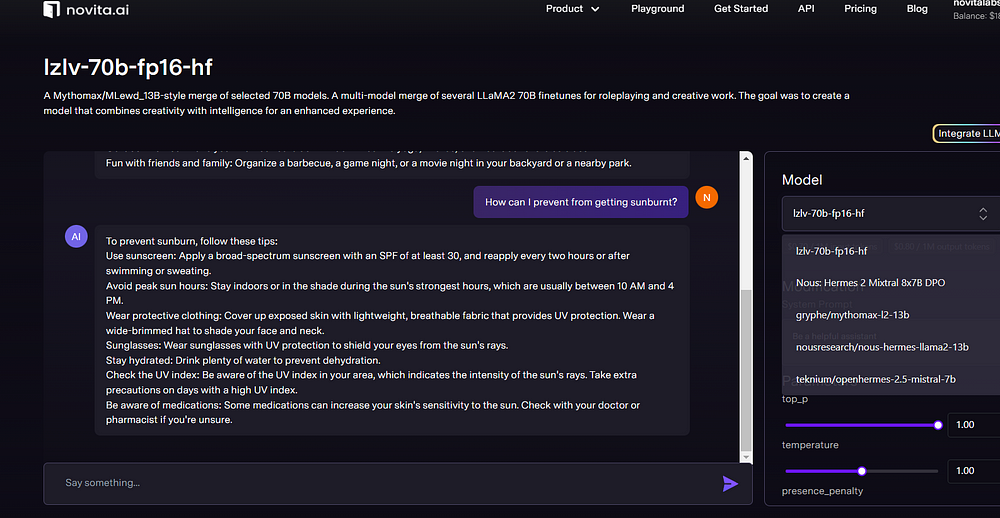

Here is an example of novita.ai LLM(API Offered) combined with NLP:

Resource Optimization.

The precision of NLP in handling specific tasks complements the resource-heavy demands of LLMs, leading to more scalable solutions and improved management of computational resources.

Increased Flexibility and Adaptability

Integrating these technologies boosts the flexibility and adaptability of AI applications, allowing them to better respond to changing needs.

Predicting the Future of NLP and LLM Collaboration

The future of NLP and LLM collaboration is poised to introduce new capabilities and applications, significantly influencing our engagement with AI technologies:

Advanced AI Assistants

The merging of NLP and LLMs is expected to produce AI assistants with deeper comprehension and enhanced responsiveness to complex human interactions.

Innovations in Content Creation

The integration of NLP’s linguistic precision and LLM’s creative abilities is anticipated to yield more advanced tools for automated content creation.

Enhanced Language Processing in Robotics

This collaborative effort is likely to greatly improve the language processing capabilities of robots, facilitating more natural and effective human-robot interactions.

Conclusion

In the ever-evolving landscape of AI and language processing, the synergy between NLP and LLM is proving to be a game-changer. While NLP focuses on understanding human language, LLM excels in generating human-like text. The distinct characteristics of each, when combined, offer unprecedented potential for enhancing AI performance. As we gaze into the future, the collaboration between NLP and LLM is set to revolutionize automated content creation and elevate language comprehension in robotics. This dynamic partnership is poised to shape the future of AI technologies, ushering in a new era of innovation and advancement.

Frequently Asked Questions

Is NLP required for LLM?

NLP is not required for LLM, but it can greatly enhance the performance and capabilities of LLMs. Combining NLP with LLMs can improve the accuracy and contextuality of language understanding and generation, enhancing the overall user experience.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.