In the rapidly evolving landscape of AI development, the integration of powerful language models into familiar development environments has become crucial. This article explores how developers can leverage the Novita AI API within gptel, an Emacs-based LLM client. By combining Novita AI’s advanced models with gptel’s seamless Emacs integration, developers can significantly enhance their AI-powered workflows without leaving their preferred text editor.

What is gptel

gptel is a versatile LLM chat client tailored for Emacs users. It supports multiple models and backends, functioning seamlessly across any Emacs buffer. With its uniform interface and in-place usage, gptel embodies the Emacs philosophy of extensibility and accessibility. Under the hood, gptel relies on Curl if available, providing robust HTTP capabilities, but also supports url-retrieve for environments without external dependencies. This dual compatibility ensures gptel can be set up in a wide range of Emacs configurations.

Features of gptel

gptel comes packed with features designed to maximize productivity:

Async and fast operations: Streams responses directly into Emacs.

Multi-LLM support: Choose from various models such as Mythomax, Llama, and Qwen.

Customizable workflows: Modify gptel’s operations using a simple API.

Context-aware interactions: Add regions, buffers, or files dynamically to queries.

Markdown/Org markup support: Format LLM responses effectively.

Multi-modal support: Work with text, images, and documents.

Conversation management: Save, resume, and branch conversations as needed.

Interactive query tweaks: Preview and modify prompts before sending them.

Introspection capabilities: Inspect and modify queries before sending them to ensure accuracy and relevance.

Editable conversations: Go back and edit previous prompts or LLM responses during a conversation. These edits are fed back to the model, allowing for dynamic adjustments.

How to Download gptel

Installing gptel is straightforward:

- Using Emacs package manager:

Run

M-x package-install RET gptel RET.For a stable version, add MELPA-stable to your package sources.

2. Straight:

- Add

(straight-use-package 'gptel)to your configuration.

(Optional) Install markdown-mode for better formatting and enhanced chat interactions.

3. Manual Installation:

Clone the repository from GitHub.

Run

M-x package-install-file RETon the repository directory.

4. Using Doom Emacs:

In

packages.el

(package! gptel)In

config.el

(use-package! gptel :config (setq! gptel-api-key "your key"))“your key” can be the API key itself, or (safer) a function that returns the key. Setting

gptel-api-keyis optional, you will be asked for a key if it’s not found.

5. Using Spacemacs:

- In your

.spacemacsfile, addllm-clienttodotspacemacs-configuration-layers.

(llm-client :variables llm-client-enable-gptel t)

Access Novita AI API on gptel

Integrating Novita AI’s API with gptel unlocks advanced LLM capabilities:

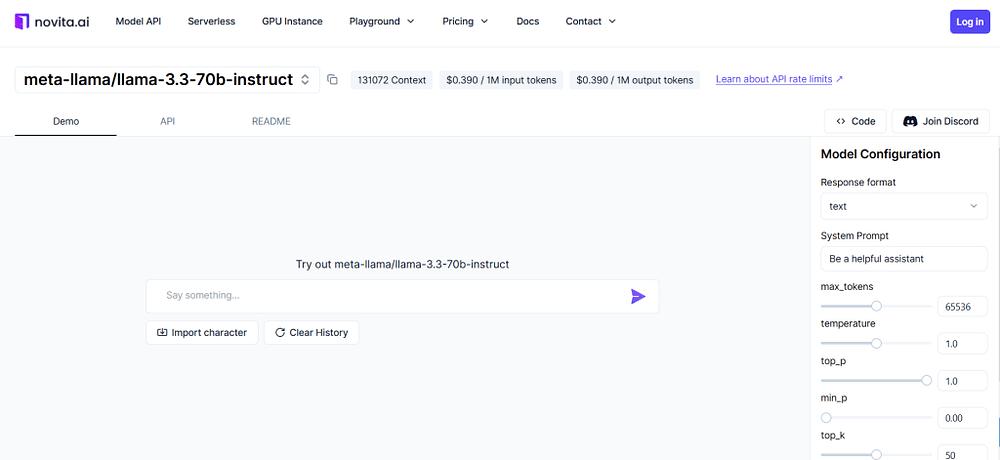

Test various LLMs in Novita AI’s LLM Playground at no cost.

Navigate to Novita AI’s key management page to retrieve the LLM API key for use with gptel.

Register Novita AI as a backend:

(gptel-make-openai "NovitaAI"

:host "api.novita.ai"

:endpoint "/v3/openai"

:key "your-api-key"

:stream t

:models '(

gryphe/mythomax-l2-13b

meta-llama/llama-3-70b-instruct

meta-llama/llama-3.1-70b-instruct))

4. Replace "your-api-key" with your Novita AI API key.

5. Set Novita AI as the default backend:

(setq

gptel-model 'gryphe/mythomax-l2-13b

gptel-backend

(gptel-make-openai "NovitaAI"

:host "api.novita.ai"

:endpoint "/v3/openai"

:key "your-api-key"

:stream t

:models '(

mistralai/Mixtral-8x7B-Instruct-v0.1

meta-llama/llama-3-70b-instruct

meta-llama/llama-3.1-70b-instruct)))

How to Use gptel

gptel’s commands are flexible and intuitive:

- General queries: Use

M-x gptel-sendto send text up to the cursor. The response will be inserted below, and you can continue the conversation seamlessly.

2. Dedicated chat buffers:

Start a new session or switch to an existing one using

M-x gptel.Use

C-c RETto send prompts interactively.Save and restore your chat sessions effortlessly, enabling consistent workflows.

3. Modify behavior dynamically: Run C-u M-x gptel-send to adjust chat parameters such as the LLM provider, backend, or system message for specific tasks.

4. Enhanced Org mode features:

Branch conversations using Org headings for structured interactions.

Use

gptel-org-set-propertiesto declare chat settings under specific headings, creating reproducible LLM notebooks.

5. Multi-modal support:

Add text, image, or document files to the conversation context with

gptel-add-fileor via the transient menu. This enables richer, context-aware queries.Manage context dynamically by including or removing regions, buffers, or files as needed.

Conclusion

By combining gptel with the Novita AI API, developers can access powerful, flexible tools for AI development. Whether you’re refining workflows or exploring new model capabilities, gptel and Novita AI’s API make the process seamless and efficient. Ready to get started? Explore the LLM Playground today!

Frequently Asked Questions

Can I use gptel for non-standard workflows?

Yes, gptel provides the gptel-request function, allowing custom workflows beyond gptel-send.

How do I enable auto-scrolling?

Add this to your configuration:(add-hook 'gptel-post-stream-hook 'gptel-auto-scroll)

How can I change prompt/response formatting?

Customize gptel-prompt-prefix-alist and gptel-response-prefix-alist for specific modes.

Does gptel support media?

Yes, gptel supports multi-modal models for handling text, images, and documents.

How can I configure chat buffer-specific options?

You can set chat parameters like the model, backend, and directives on a per-buffer basis using the transient menu. Call C-u M-x gptel-send to access these settings interactively.

What is the transient menu, and how can I use it effectively?

The transient menu allows you to dynamically tweak gptel’s behavior, including context, input/output redirection, and query preferences. Access it by calling C-u gptel-send or gptel-menu. For advanced usage, consult the GitHub repository for examples and documentation. Can I use gptel for non-standard workflows? Yes, gptel provides the gptel-request function, allowing custom workflows beyond gptel-send.

How do I enable auto-scrolling?

Add this to your configuration:(add-hook 'gptel-post-stream-hook 'gptel-auto-scroll)

How can I change prompt/response formatting?

Customize gptel-prompt-prefix-alist and gptel-response-prefix-alist for specific modes.

Does gptel support media?

Yes, gptel supports multi-modal models for handling text, images, and documents.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.