Key Highlights

AI training :Artificial intelligence training is the process of making computer programs gain intelligence through steps.

Machine learning: Making computers more intelligent by having them automatically learn data models.

Deep learning: is a machine learning method that simulates neural networks in the human brain.

Which GPU is better suited for AI training: the Tesla A10, which uses less power and always has more computing power.

The downside of using the Tesla A10: The Tesla A10 costs about $2,800 to purchase.

Advantages of GPU Instance: Low cost, easy expansion and maintenance.

Introduction

The development of artificial intelligence has increased the demand for powerful GPUs. The two top picks are NVIDIA’s Tesla A10 and GeForce’s RTX 4090. These GPUs are great for AI tasks, including training deep neural networks and accelerating inference workloads in large data centers.

This blog will serve as an introduction to the comparison of their performance in AI training (Tesla A10 vs RTX 4090), specifically in deep learning, to help you find the best GPU for your AI needs. We will take into consideration their specifications, performance, and overall suitability for data center AI applications, with a focus on the powerful NVIDIA RTX 4090 and its impressive throughput capabilities and massive 24GB GDDR6X VRAM, making it a strong contender for deep learning applications on GPU servers.

Overview of Artificial Intelligence training

Simply put, Artificial intelligence training is the process of making a computer program gain intelligence through steps, including the use of computer vision. Constant tweaking of the process allows you to get an AI model that can make decisions or perform tasks with little to no intervention. AI model training includes knowledge of machine learning and deep learning.

What is Artificial intelligence Training?

The “training” principle of training AI is mainly based on machine learning and deep learning. AI training involves the following key steps

Data collection

Data preprocessing

Model selection

Model training

Among them, data collection and preprocessing are the foundation, and model training is the core.

What are the machine learning and deep learning?

Machine learning: is an artificial intelligence training method that makes computers more intelligent by having them automatically learn data models.

Deep learning: is a machine learning method that simulates human brain neural networks, which realizes deep learning and understanding of data through the combination of multiple layers of neural networks.

Deep learning

Deep learning is also a way for computers to learn data automatically, so deep learning is a branch of machine learning.

The best GPU for AI training

In the model training stage, AI will learn through a large amount of data and algorithms to improve the prediction accuracy and generalization ability of the model. The process usually requires the use of acceleration devices such as GPUs or TPUs and requires a lot of computing resources and time.

Therefore, we need a powerful GPU, such as the Tesla A10 or RTX 4090, to smoothly conduct AI model work, especially for large language models like Llama 2. With the increasing demand for faster and more efficient training, choosing the best GPU for AI training is crucial. The Tesla A10 and RTX 4090 are both top contenders, with the ability to handle large language models thanks to their high batch size and state-of-the-art model implementations.

Additionally, the use of batch processing can greatly improve training throughput and should be considered when selecting the best GPU for Artificial intelligence learning. When using multiple A10s within a single instance, the combined VRAM of 48 GB can efficiently train large models like Llama-2-chat 13B, making it a cost-effective option compared to using a more expensive A100-powered instance.

How many series of graphics cards does Nvidia have?

Nvidia has created the following five series of graphics cards, and different graphics card lines correspond to different use cases.

GeForce Series: Game

Tesla Series: Artificial intelligence learning

RTX A Series: Picture design

Jetson series: Robot

DRIVE series: smart cars

Which GPU is suitable for Artificial intelligence learning?

According to Tom’s Hardware, the most popular graphics card in 2024 will be RTX4090.

Ranking of the most popular Gpus

However, The main users of the RTX series of graphics cards are professional gamers. It was not originally designed for AI trainers. Most companies now use the Tesla A10 for AI model training.

Tesla A10 vs RTX 4090

Therefore, Tesla A10 vs RTX 4090 which will win?

Price comparison: According to data released by Amazon, the price of the Tesla A10 is about $2,800. The RTX4090 is priced at $1,600. The RTX4090 wins in price comparison.

Graphics architecture: Both are based on the Ampere architecture, but the RTX 4090 is also optimized for gaming with ray tracing and DLSS support.

Computing power: The Tesla A10 supports FP32, FP16, and INT8 operations, making it more suitable for AI training and reasoning tasks than the RTX4090.

Power consumption comparison: Tesla A10 typically has higher power consumption limits to support continuous loads. The RTX 4090 has high power consumption.

The results of Tesla A10 vs RTX 4090

After comparing Tesla A10 with RTX 4090, it is found that the Tesla A10 is more suitable for Artificial intelligence learning. It has more computing power with its higher core count and consumes less energy.

However, the A10 is expensive and the use case is simple, so this A10 is not suitable for personal use. For some small businesses and individual developers using RTX is sufficient to meet the needs. Additionally, the Tesla A10 has a slight edge in memory bandwidth, making it even more efficient for handling massive datasets in AI training.

Besides, the newer RTX 4090 Ti may give the Tesla A10 a run for its money with its similar memory stats and use of the advanced Ampere microarchitecture, including 336 third-gen tensor cores. The inclusion of tensor cores in the RTX 4090 Ti allows for even faster AI model training, with a boost clock of 1.41 GHz delivering up to 38.7 TFLOPs of FP16 tensor core performance.

It is important to note that the RTX 4090 Ti is not yet released, so the results of the Tesla A10 vs RTX 4090 Ti are based on speculation and comparison with the RTX 3090 Ti. Based on our aggregate benchmark results, the RTX 4090 Ti is expected to outperform the Tesla A10 by a significant margin, making it the new benchmark for AI model training.

Tesla A10 vs RTX 4090 GPU instances

It is now popular for many enterprises to use GPU instances for training AI. GPU instances are GPU virtual resources. Using GPU instances for AI training allows users to perform better AI training.

Why choose to use GPU instances?

- Lower cost: Using GPU instances does not require developers to invest a lot of upfront hardware costs. GPU virtual resources are purchased based on time and demand. The price is much lower than buying local GPUs.

Advantages of GPU instance

- Easy to expand: Users can choose the number of memory and processor cores required by the graphics card on one page according to their needs. Users do not have to worry about hardware limitations and can expand as needed.

- Less maintenance: Because virtual GPU users can bear less risk of equipment damage, in addition, if the commonly used virtual GPU cannot be used, it can be replaced quickly.

How to use GPU Instances?

Step 1: Register and log in at Novita.ai.

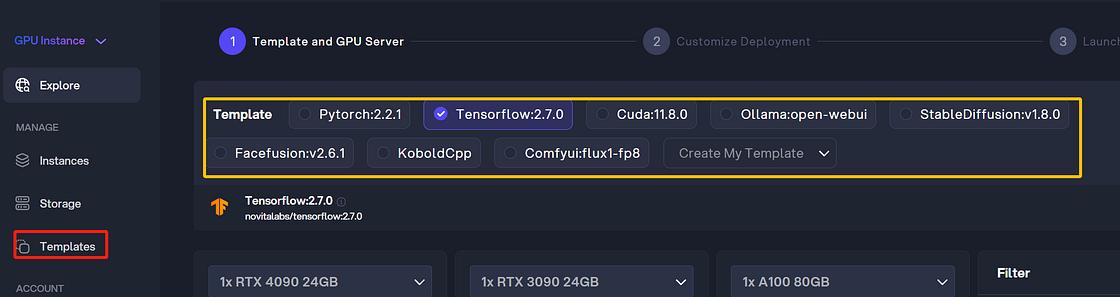

Step 2: to find the GPU Instances

Tutorial on using GPU cloud

Step 3: Start Building now

Step 4: Select the GPU Instances you need according to your needs, currently Novita.ai provides RTX 4090, RTX 3090, A100, RTX A6000 and L40, and other graphics card resources.

Step 5: You can also set up templates, and the next time you use the Novita.ai platform, you can directly use the template without having to re-set it.

Tutorial on using GPU cloud

Step 6: For some additional features, this platform provides GPU storage, LLM API and AI graph API in addition to GPU instances service.

FAQs

Is the nvidia GeForce rtx 4090 good for AI?

There is no doubt that the answer is appropriate. The reasons are as follows: Powerful computing power, High capacity video memory and Optimized architecture.

Is A100 better than RTX 4090?

The A100 excels in professional environments where artificial intelligence and data processing require unmatched computing power, while the RTX 4090 excels in personal computing, delivering superior graphics and gaming experiences.

What is the best alternative to RTX 4090?

When looking for an alternative to the RTX 4090, you can consider its availability, price and performance, some say the RTX 4090 is the most powerful graphics card available, but others say it may not be worth it for most gamers.

Is GeForce good for AI?

Some say NVIDIA GeForce RTX GPUs are best suited for AI PCS because they use the same technology that powers the world’s leading AI innovations.

Originally published at Novita AI

Novita AI is the All-in-one cloud platform that empowers your AI ambitions. Integrated APIs, serverless, GPU Instance — the cost-effective tools you need. Eliminate infrastructure, start free, and make your AI vision a reality.