Dive into the comprehensive LLM benchmarks guide for key benchmarks and industry insights. Visit our blog for more details.

Key Highlights

Understand the purpose and importance of LLM benchmarks in machine learning

Explore the key components and types of benchmarks used in evaluating LLMs

Discover the benchmarking models for different LLM applications, such as code generation and natural language understanding

Get in-depth comparisons of popular LLM models, including GPT series and BERT variants

Learn about the role of leaderboards in benchmarking LLMs and how they influence LLM development

Explore the challenges and limitations of current benchmarks and the future of more inclusive and comprehensive benchmarks

Introduction

As more large language models (LLMs) enter the market, it becomes essential for organizations and users to efficiently explore this expanding ecosystem and identify the models that align with their specific needs. A practical approach to facilitate this decision-making process is by understanding benchmark scores.

This guide explores the notion of LLM benchmarks, discusses the most prevalent benchmarks and their components, and highlights the limitations of relying exclusively on benchmark scores as the sole indicator of a model’s performance.

What are LLM Benchmarks

An LLM benchmark is a standardized assessment tool designed to evaluate the performance of AI language models. It typically includes a dataset, a set of questions or tasks, and a method for scoring. Models are tested against these benchmarks and typically receive scores ranging from 0 to 100, which reflect their performance.

Why are They Important

Benchmarks are crucial for organizations, including product managers, developers, and users, as they offer a clear, objective measure of an LLM’s capabilities. By utilizing a uniform set of assessments, benchmarks simplify the process of comparing different models, making it easier to choose the most suitable one for specific needs.

Moreover, benchmarks are invaluable to LLM developers and AI researchers because they provide a quantitative framework to gauge what good performance looks like. Benchmark scores highlight both the strengths and weaknesses of a model. This insight enables developers to benchmark their models against competitors and make necessary enhancements. The clarity provided by well-designed benchmarks encourages transparency within the LLM community, fostering collaboration and speeding up the overall progress of language model development.

Popular LLM Benchmarks

Here’s a selection of the most commonly used LLM benchmarks, along with their pros and cons.

ARC

The AI2 Reasoning Challenge (ARC) is a question-answer (QA) benchmark specifically crafted to evaluate an LLM’s knowledge and reasoning capabilities. The ARC dataset includes 7,787 multiple-choice science questions with four options each, covering content suitable for students from 3rd to 9th grade. These questions are categorized into Easy and Challenge sets, each designed to test different types of knowledge such as factual, definitional, purposive, spatial, procedural, experimental, and algebraic.

ARC is intended to offer a more robust and challenging assessment compared to earlier QA benchmarks like the Stanford Question and Answer Dataset (SQuAD) or the Stanford Natural Language Inference (SNLI) corpus. These earlier benchmarks primarily assessed a model’s ability to identify correct answers from a given text. In contrast, ARC questions demand reasoning over distributed evidence — information necessary to answer a question is integrated throughout a passage, compelling a language model to leverage its understanding and reasoning skills rather than relying on mere memorization of facts.

Pros and Cons of the ARC Benchmark

Pros

The ARC dataset is varied and demanding, pushing AI vendors to enhance QA abilities by synthesizing information from multiple sentences, rather than merely retrieving facts. Cons

Limited to science questions, restricting its applicability across broader knowledge domains.

Cons

- The construction of the corpus is not fully transparent, and the dataset contains numerous errors.

TruthfulQA

While large language models (LLMs) can generate coherent and articulate responses, accuracy remains a challenge. The TruthfulQA benchmark addresses this by evaluating the ability of LLMs to produce truthful responses, particularly focusing on reducing the models’ tendencies to produce fabricated (“hallucinated”) answers.

LLMs may deliver inaccurate answers due to several factors: insufficient training data on specific topics, training on error-ridden data, or training objectives that inadvertently favor incorrect answers, termed “imitative falsehoods.”

The design of the TruthfulQA dataset encourages models to select these imitative falsehoods rather than truthful responses. It judges the truthfulness of an LLM’s answers based on their alignment with factual reality. The benchmark discourages non-committal answers like “I don’t know” by also assessing the informativeness of responses.

Comprising 817 questions across 38 domains, including finance, health, and politics, TruthfulQA evaluates models through two tasks. The first involves generating responses to questions, scored from 0 to 1 by human evaluators based on accuracy. The second task involves a true/false decision for a set of multiple-choice questions. The results from both tasks are combined to form the final score.

Pros and Cons of TruthfulQA

Pros

Features a varied dataset, providing a comprehensive test across multiple knowledge areas.

Actively works against the propensity of LLMs to generate false information, thus promoting accuracy.

Cons

- The focus on general knowledge means it might not effectively gauge truthfulness in specialized fields.

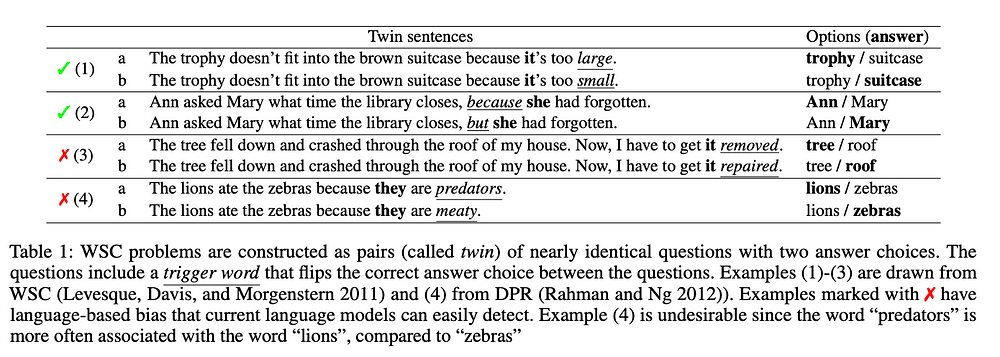

WinoGrande

WinoGrande is a benchmark designed to assess the commonsense reasoning capabilities of large language models (LLMs) and is an extension of the Winograd Schema Challenge (WSC). It presents a series of pronoun resolution challenges, featuring pairs of nearly identical sentences that differ based on a trigger word, each requiring the selection of the correct pronoun interpretation.

The WinoGrande dataset significantly expands on the WSC with 44,000 well-crafted, crowdsourced problems. To enhance the complexity of the tasks and to minimize biases such as annotation artifacts, the AFLITE algorithm, which builds on the adversarial filtering approach used in HellaSwag, was employed.

Pros and Cons of the WinoGrande Benchmark

Pros

- Features a large, crowdsourced dataset that has been carefully curated through algorithmic intervention to ensure a higher degree of challenge and fairness.

Cons

- Despite efforts to eliminate bias, annotation artifacts — patterns that inadvertently hint at the correct answer — still exist within the dataset. The large size of the corpus makes it challenging to completely eradicate these biases with AFLITE.

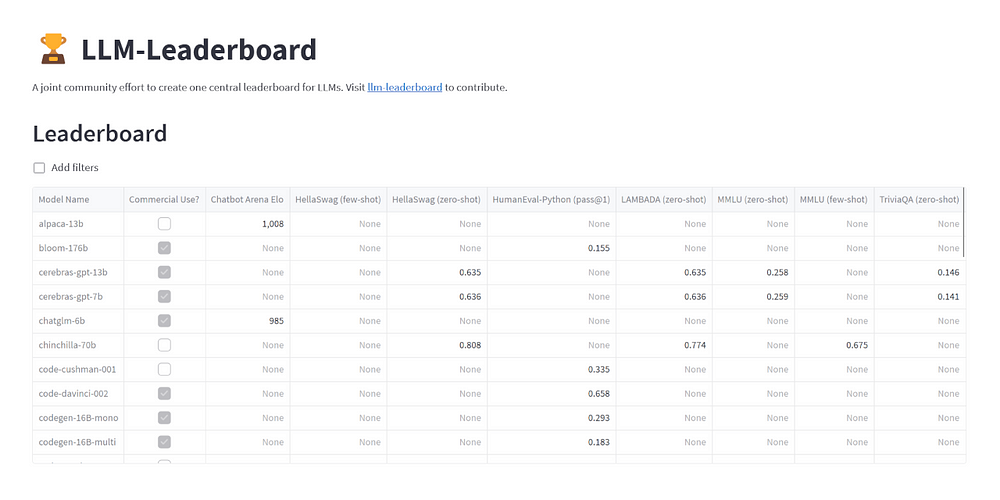

What are LLM leaderboards?

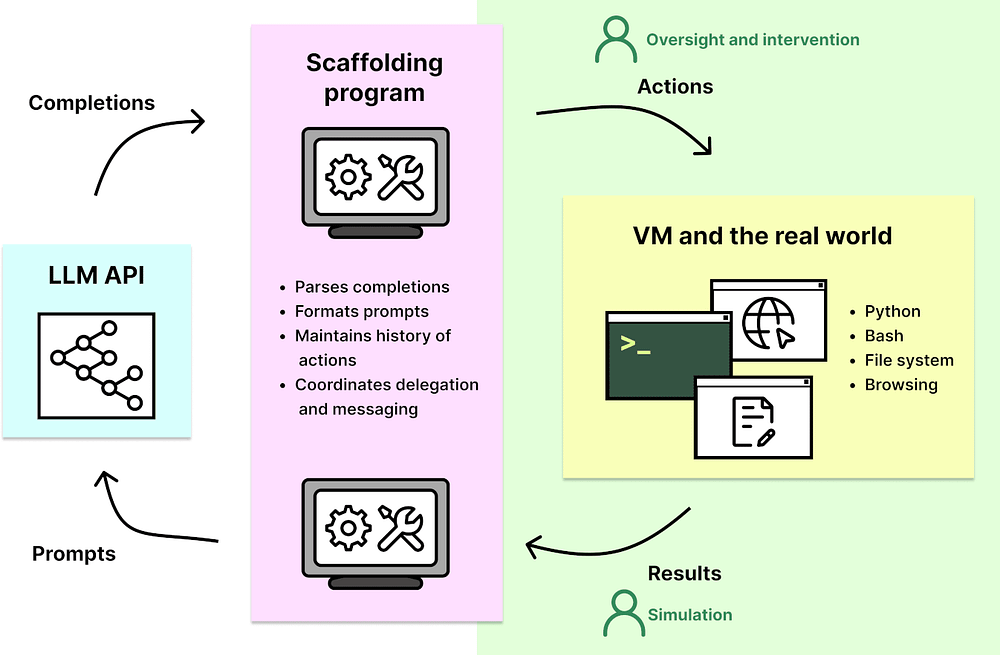

While understanding the implications of various benchmarks for an LLM’s performance is crucial, it’s equally important to compare how different models stack up against each other to identify the most suitable one for specific needs. This is where LLM leaderboards become valuable.

An LLM leaderboard is a published ranking that lists the performance of various language models across specific benchmarks. Benchmark developers often maintain their own leaderboards, but there are also independent leaderboards that provide a broader evaluation by comparing models across multiple benchmarks.

A prime example of such independent leaderboards can be found on HuggingFace, which assesses and ranks a diverse range of open-source LLMs based on six major benchmarks: ARC, HellaSwag, MMLU, TruthQA, WinoGrande, and GSM8K. These leaderboards offer a comprehensive overview of model capabilities, facilitating informed decisions when choosing a language model.

How Leaderboards Influence LLM Development

Leaderboards have a significant influence on the development and improvement of LLMs. Here are some key ways in which leaderboards impact LLM development:

Performance Comparison: Leaderboards provide a platform for developers and researchers to compare the performance of different LLMs and gain insights into their relative strengths and weaknesses.

Incentive for Improvement: Leaderboards create a competitive environment that encourages LLM developers to continuously improve their models’ performance and capabilities.

Community Collaboration: Leaderboards foster collaboration and knowledge sharing within the AI community. Developers can learn from the top-performing models and collaborate to address common challenges and improve benchmark scores.

Community-Driven Benchmarks: Leaderboards often incorporate community-driven benchmarks, allowing developers and users to contribute their own tasks and evaluations to create more comprehensive and inclusive benchmarks.

Top Performing Models on Current Leaderboards

The current leaderboards showcase the top-performing models on various benchmarks. These models have demonstrated exceptional performance and capabilities in their respective language tasks. Here are some examples of top-performing models on current leaderboards:

GPT-4: GPT-4, the latest iteration of the GPT series, has consistently maintained a top position on multiple benchmarks, showcasing its advanced language generation abilities.

novita.ai is a one-stop platform for limitless creativity that gives you access to 100+ APIs, including LLM APIs. Novita AI provides compatibility for the OpenAI API standard, allowing easier integrations into existing applications.

What are the Problems with LLM Benchmarking?

While LLM benchmarks are useful for assessing the capabilities of language models, they should be used as guides rather than definitive indicators of a model’s performance. Here’s why:

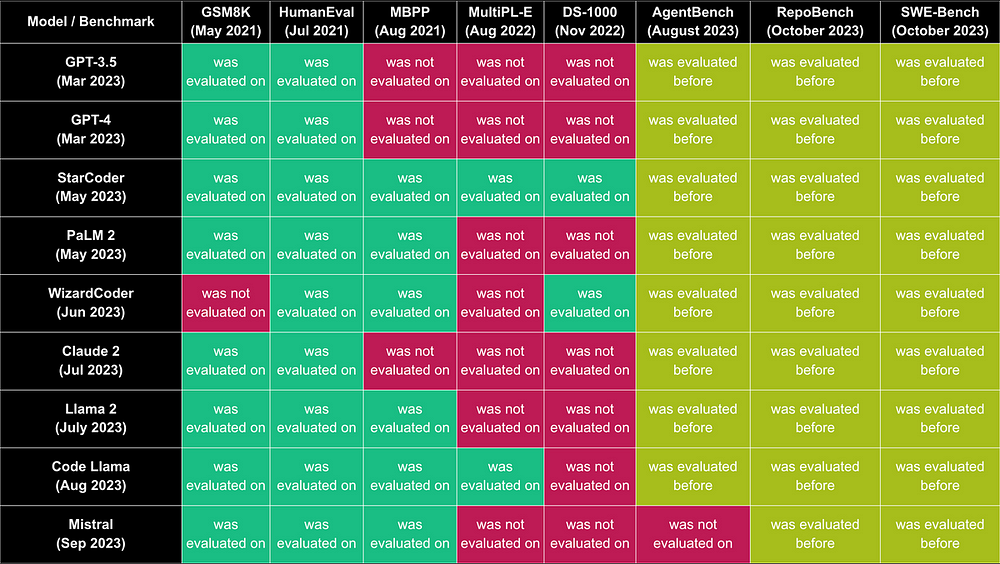

Benchmark Leakage: Models may be trained on the same data used in benchmarks, leading to overfitting where they appear to perform well on benchmark tasks without truly mastering the underlying skills. This can result in scores that do not accurately reflect a model’s real-world capabilities.

Mismatch with Real-World Use: Benchmarks often don’t capture the complexity and unpredictability of real-world applications. They test models in controlled environments, which can differ significantly from practical settings where the model will actually be used.

Limitations in Conversational AI Testing: For conversation-based LLMs, benchmarks like MT-Bench may not fully represent the challenges of real conversations, which can vary widely in length and complexity.

General vs. Specialized Knowledge: Benchmarks typically use datasets with broad general knowledge, making it difficult to gauge a model’s performance in specialized domains. Thus, the more specific a use case, the less relevant a benchmark score might be.

Conclusion

In conclusion, understanding and utilizing LLM benchmarks are crucial for evaluating and improving language models. These benchmarks provide a standardized framework for comparison and development, driving innovation and progress in the field of natural language processing. By delving into the nuances of different benchmark types, metrics, and models, researchers and developers can enhance the performance and applicability of LLMs. Despite challenges like bias and fairness, the future promises more inclusive and comprehensive benchmarks, integrating real-world scenarios and ethical considerations. Stay informed about emerging trends and actively contribute to the evolution of LLM benchmarking practices for a more advanced and ethically sound AI landscape.

Frequently Asked Questions

How often do benchmarks get updated, and why?

Benchmarks are updated periodically to reflect evolving standards and advancements in machine learning. As new models and techniques emerge, benchmarks need to be updated to provide accurate evaluations and keep pace with the latest developments in the field.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.