Unlock the potential of Large Language Model (LLM) APIs for advanced text generation and analysis. Let us guide you through harnessing the power of LLM APIs for your business needs.

Introduction

In recent years, the fluency of Large Language Models (LLMs) in generating text has reached unprecedented levels. These models, accessed through APIs, serve as a bridge for incorporating them into various applications. The beauty of this approach is that companies no longer require extensive computational resources for training or running LLMs. Instead, developers can utilize these APIs to streamline workflows, leading to innovative products across diverse industries.

As LLMs continue to advance, ensuring equitable access to these innovations becomes increasingly important. While the models themselves are impressive, their true power lies in the simplicity of accessing their outputs.

What are LLM APIs?

LLM APIs have revolutionized the digital realm, offering unprecedented computational power for text manipulation, analysis, and generation. These Application Programming Interfaces (APIs) act as bridges, facilitating smooth interactions between software systems and Large Language Models (LLMs).

If you’ve been hearing about free LLM APIs, you might be curious about how they work. Essentially, these complimentary interfaces provide developers with initial access to LLMs at no cost. It’s like getting a sneak peek. However, there’s often a catch: the free tier comes with limitations, typically in terms of LLM tokens, which are the smallest units of text the model can process or generate. Each token contributes to the overall usage quota, which might be restrictive in the free tier. It’s akin to having only a few chips when you’re craving the entire bag.

Novita AI LLM offers you uncensored, unrestricted conversations through powerful Inference APIs. With Cheapest Pricing and scalable models, Novita AI LLM Inference API empowers your LLM incredible stability and rather low latency in less than 2 seconds. LLM performance can be highly enhanced with Novita AI LLM Inference API.

Get more information in our blog: Novita AI LLM Inference Engine: the largest throughput and cheapest inference available

Understanding LLM Tokens

Understanding LLM tokens is crucial. These tokens can range from single characters to entire words, and they accumulate as you run your code through an LLM API. Exceeding your token limit can result in unexpected expenses, so efficient management is key. Tokens are akin to gasoline; running out unexpectedly would be less than ideal, right?

Now, let’s delve into the technical aspect for a moment. Interacting with an LLM model API involves making HTTP requests in JSON format. While it might sound complex, it’s quite straightforward once you’re accustomed to it. Your request specifies the input and any parameters, such as the model’s temperature, which affects the randomness of the output. A higher temperature can lead to more creative, but potentially less coherent, text, while lowering it produces text more aligned with the model’s training data.

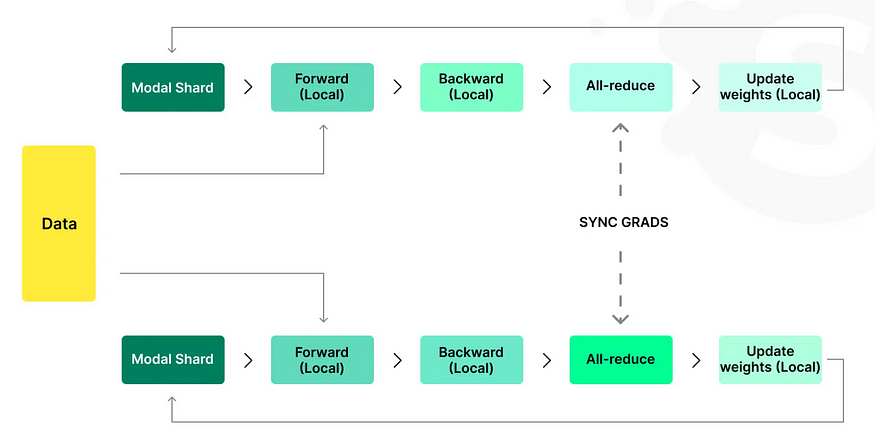

Introduction to Autoregressive LLMs

Autoregressive LLMs introduce an additional layer of complexity. Unlike feed-forward models, autoregressive ones predict tokens sequentially, typically resulting in more nuanced and coherent text. However, this approach comes with a trade-off: increased processing time. Each token’s prediction relies on its predecessors, necessitating computational patience. Nevertheless, the quality of the output usually justifies the wait, especially when applications require contextual understanding or human-like text generation.

When considering various LLM APIs, differences often arise in the types of models available, pricing structures, and specific features offered. Some APIs cater to specific industries like healthcare or finance with specialized options, while others provide multi-language support. Ultimately, the choice depends on your specific needs.

Evolution of LLM APIs

The progression of LLM APIs marks a substantial leap forward in AI and natural language processing. Initially, language models were constrained in their scope and complexity, grappling with context comprehension and producing text that sounded natural. However, improvements in machine learning algorithms, computational capabilities, and data accessibility have propelled LLMs to greater sophistication.

Presently, cutting-edge models like GPT-4 from OpenAI and LLaMA from Meta are leading the charge in artificial intelligence. They excel at a multitude of language-related tasks, exhibiting remarkable accuracy and human-like fluency. Their integration across various industries is reshaping how businesses engage with both data and clientele. Key features defining the current landscape of LLM APIs include:

Profound contextual comprehension.

Capacity to generate creative and coherent content.

Flexibility in adapting to diverse languages and dialects.

The trajectory suggests that LLM APIs will increasingly offer personalized and contextually-aware interactions, further bridging the gap between language processing and human communication styles.

Core Components of LLM APIs

Exploring the fundamental components of LLM APIs offers valuable insights into their capabilities, unveiling their immense potential across diverse applications. This section delves into the architecture and critical attributes that characterize LLM APIs, elucidating how they operate to process and generate language at an advanced level.

Architecture Overview

The architecture of large language model APIs, like the OpenAI API, is intricate and multi-dimensional. It’s engineered to manage the complexities of human language, ensuring the delivery of nuanced responses. At its essence, the architecture generally comprises the following components, creating a resilient tech stack for generative AI:

Deep neural networks: frequently in the form of transformer models, serve as the core of LLM APIs. Their primary function is to comprehend language context and produce responses.

Data Processing Layer: This layer manages the preprocessing of input data and the post-processing of model outputs, encompassing tasks like tokenization, normalization, and other linguistic processing methods.

Training Infrastructure: To train these models on extensive datasets, a strong infrastructure is necessary, typically involving high-performance computing resources and sophisticated algorithms to facilitate efficient learning.

API Interface: Serving as the portal for user interaction with the LLM, the API interface determines the process of making requests, receiving data, and structuring responses.

Security and Privacy Protocols: Due to the sensitive nature of data, LLM APIs incorporate strong security and privacy protocols to safeguard user data and maintain compliance with regulations.

Scalability and Load Management: Ensuring consistent performance amidst fluctuating loads is crucial for large language models. Thus, their architecture should feature scalability solutions and load-balancing mechanisms to handle varying demands effectively.

Key Features and Capabilities

LLM APIs boast a diverse array of features and capabilities, empowering them as formidable tools for natural language processing:

Contextual Understanding: These APIs excel at maintaining context throughout conversations, facilitating coherent and relevant interactions.

Multi-Lingual Support: Many LLM APIs demonstrate proficiency in handling multiple languages, making them versatile assets for global applications.

Customizability: They offer the flexibility to be fine-tuned or tailored to specific domains or industries, enhancing their accuracy and applicability in specialized contexts.

Content Generation: LLMs exhibit the ability to generate original content, ranging from articles to emails, based on given prompts.

Sentiment Analysis: Capable of analyzing text for sentiment, they provide valuable insights for customer service and market analysis.

Language Translation: Advanced models deliver high-quality translation services, effectively bridging language gaps in real-time.

Question Answering: LLM APIs adeptly answer questions, furnish information, and aid in decision-making processes.

Continuous Learning: Many models undergo continuous learning and improvement, adapting to new data and evolving language trends.

How to set up and use LLM APIs

Adopting LLM APIs represents a strategic advancement toward sophisticated language processing capabilities. This section outlines the primary setup steps and underscores essential considerations in selecting the most fitting large language model API for your business.

Initial Setup and Configuration

Commence by outlining your requirements for an LLM API, encompassing language tasks like content generation, customer interaction, or analytics that you seek assistance with.

It’s crucial to assess the existing technical infrastructure to ensure compatibility with an LLM API, encompassing server capabilities and network readiness. The selected LLM API should harmonize with both linguistic necessities and technical expectations, offering comprehensive language support and tailored functionalities.

Subsequently, the company should initiate the registration process with the chosen API provider to obtain access credentials, a standard procedure to commence utilizing their services. Setting up the API involves configuring language preferences, input-output formats, and other pertinent parameters.

How to Choose your right LLM APIs

Choosing the right API for a large language model is pivotal for achieving language processing objectives. Companies must be aware of key considerations when making this decision:

Performance and Accuracy: The performance, response accuracy, and speed of different APIs are crucial factors. Conducting a pilot test can offer valuable insights into their effectiveness.

Customization and Flexibility: Assess whether the API provides customization options. Some APIs enable model training on specific datasets or tuning for specialized tasks.

Scalability: Evaluate the API’s capacity to handle varying levels of demand. It’s essential to select an API that can scale according to business needs.

Support and Community: Choose APIs with dependable support and an active user community. This can be vital for gaining insights, sharing best practices, and staying updated with developments.

Language and Feature Set: Confirm that the API supports languages and dialects relevant to your audience. Additionally, review the feature set to ensure it meets your language processing requirements.

Integrating with Existing Systems

Integrating LLM APIs with existing systems entails a strategic process aimed at ensuring compatibility, efficiency, and optimal performance.

Assessment and Planning: Begin by conducting a thorough evaluation of your systems to determine the most suitable approach for integrating the LLM API. Plan the integration to complement and enhance your current operations effectively.

Compatibility Check: It’s essential to verify that company systems are technically compatible with the LLM API. This involves assessing software compatibility, data formats, and network requirements to guarantee seamless integration.

Modular Integration: Adopting a modular integration approach facilitates smoother integration, minimizing disruptions to existing systems and streamlining updates and maintenance processes.

Data Synchronization: Establish robust mechanisms for data exchange between existing systems and the LLM API to ensure data integrity and consistency throughout the integration process.

Security and Privacy Concerns

Maintaining responsible LLM API integration entails prioritizing user privacy and data security for companies. Adhering to legal requirements fosters trust and integrity in their relationships with users.

Understanding Data Security in API Interactions

Data security encompasses safeguarding both the data transmitted between the API and your systems and the data processed by the LLM API.

Data Encryption: It’s critical to encrypt all data transmitted to and from the API of a large language model. Employing secure protocols such as HTTPS is vital for safeguarding data during transit.

Access Controls: Implementing stringent access controls can restrict interactions with the API to authorized parties and specific conditions. This entails effectively managing authentication and authorization mechanisms. For example, requiring an API key as a secure token can ensure authorized access.

Data Anonymization: Companies should anonymize the data they send to the LLM API. Removing or obfuscating sensitive information can greatly mitigate privacy risks.

Regular Security Audits and Compliance Checks: Conducting routine security audits aids in evaluating the resilience of your systems. Moreover, ensure adherence to pertinent data protection laws and standards based on your region and industry.

Vendor Security Assessment: Evaluate the security measures and policies of the vendor when utilizing a third-party LLM API. Understanding their approach to data security is paramount.

Best Ways for Maintaining User Privacy

Preserving user privacy holds equal significance when employing LLM APIs. Adhering to best practices helps guarantee responsible handling of user data:

Data Minimization: Only transmit the minimum information required for the LLM API to execute its function. Refrain from sharing sensitive or extraneous user data.

User Consent: Obtain explicit consent before gathering and processing user data. This is particularly critical when dealing with sensitive information. Provide transparency regarding the purposes for which their data will be utilized.

User Data Rights: Honor user rights concerning their data, including the rights to access, rectify, and erase their data.

Privacy by Design: Implement robust default privacy settings and minimize data exposure to enhance user privacy.

How to Handle Error

Efficient error management improves API performance, resulting in a more seamless user experience. Through the adoption of effective debugging practices, developers can enhance the reliability and efficiency of LLM APIs. These practices aid in identifying and resolving issues, thereby ensuring smooth operation within your systems.

Common Errors and Their Resolutions

Errors encountered in LLM API interactions can vary, ranging from simple configuration mistakes to more intricate issues, such as those related to data processing and model responses.

Authentication Errors commonly occur due to incorrect API key or credentials. Verifying the validity and proper configuration of the API key in the request can resolve this issue.

Data Format Errors occur when the data sent to the API does not match the expected format. Reviewing data formats and structures according to API documentation can prevent these errors.

Rate Limiting Errors occur when the number of requests exceeds the API’s allowed limit. Monitoring request rates and implementing rate-limiting handling in the code can address this issue.

Response Handling Errors necessitate developers to ensure their code accurately interprets and manages the response data.

Model-Specific Errors may arise from nuances within the language model itself, such as misunderstandings of context or generating irrelevant responses. Often, adjusting the model inputs and parameters can mitigate these issues.

How to Debug

Effectively debugging issues is essential for developers to swiftly identify and resolve problems.

Refer to API Documentation: Always consult the API documentation initially, as it often contains details about error codes and their interpretations.

Implement Logging and Monitoring: Introduce comprehensive logging and monitoring for API interactions. This provides valuable insights into error occurrences.

Test with Diverse Scenarios: Conduct tests using various input scenarios to understand API behavior and uncover potential issues.

Utilize Debugging Tools: Employ debugging tools and features offered by the API or third-party applications to trace and diagnose issues effectively.

Leverage Community and Support Forums: These platforms serve as valuable resources for solutions and advice from experienced developers.

Make Incremental Changes: When troubleshooting, implement incremental changes and thoroughly test each alteration. This aids in pinpointing the root cause of errors.

Cost Management and Optimization

These factors contribute to the sustainable and efficient utilization of LLM APIs. Organizations should embrace cost-effective usage strategies to streamline their spending on these technologies. Beyond financial prudence, this approach aligns the utilization of these tools with strategic objectives.

Pricing Models

LLM API pricing models vary depending on the provider and the services offered. Understanding these models is essential for budgeting and financial planning. Let’s explore the most common ones:

Pay-Per-Use: Charges are determined by the number of requests or data processed. This model suits businesses with fluctuating usage patterns.

Subscription-Based: Some providers offer plans with fixed costs for a specific number of requests or usage levels. It can be cost-effective for consistent, high-volume use.

Tiered Pricing: Providers often employ tiered pricing structures based on usage levels. Higher tiers may offer cost savings for large-scale operations.

Custom Pricing: Custom pricing models may be available for enterprise-level clients or unique use cases. They can be tailored to specific requirements and usage patterns.

Tips for Cost-Effective API Usage

Effective cost management while utilizing LLM APIs necessitates diligent monitoring practices, crucial for maximizing the investment’s value while maintaining expense control.

Efficient Data Utilization: Streamline the data transmitted to the API. By reducing unnecessary or redundant data requests, costs can be minimized.

Usage Monitoring: Gain insights into consumption patterns to select the most cost-effective pricing model.

Request Optimization: Implement batch processing or consolidate requests to decrease the total number of API calls made.

Performance Evaluation: Evaluate the cost-performance ratio to ensure that the API delivers value commensurate with the expenses incurred.

Stay Informed on Updates: Remain current with any alterations in pricing models or new plans, as they may offer enhanced value for your usage patterns.

Leverage Free Tiers: Take advantage of free tiers provided by many providers for development, testing, or low-volume tasks.

Conclusion

LLM APIs provide advanced capabilities and exceptional adaptability across various industries. This guide has emphasized the criticality of data security in this context, highlighting the necessity for robust measures. Additionally, we’ve discussed the importance of optimizing performance and effectively managing costs, essential for leveraging these technologies to their fullest potential.

As language models continue to advance, they will offer increasingly accurate and versatile applications. Businesses that integrate LLM APIs into their processes are making forward-thinking decisions.

Originally published at novita.ai

novita.ai**, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation, cheap pay-as-you-go, it frees you from GPU maintenance hassles while building your own products. Try it for free.