Key Highlights

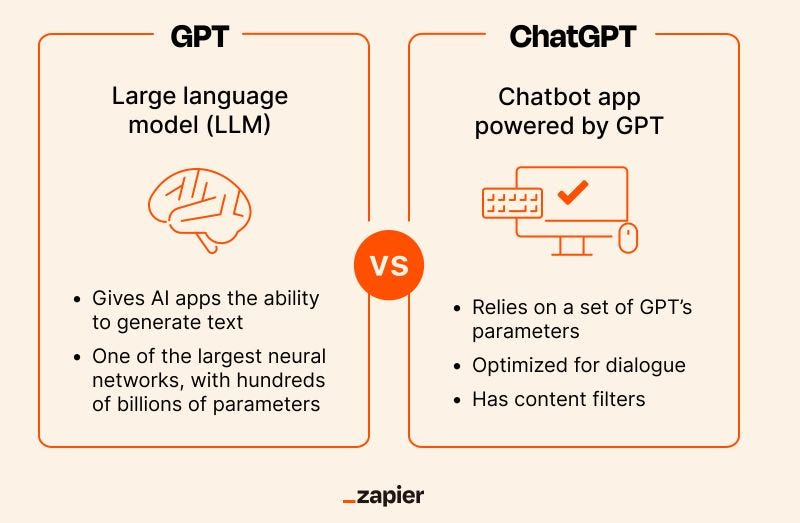

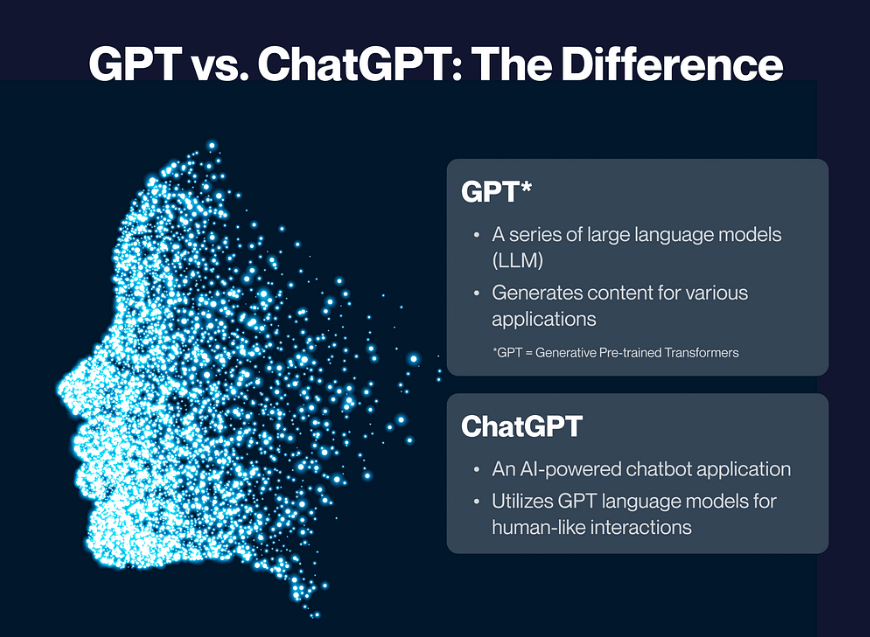

Large Language Models (LLMs) Generative Pre-trained Transformer (GPT) models are both types of AI models that utilize natural language processing and machine learning techniques.

LLMs are trained on vast amounts of text data and can perform tasks such as summarization, translation, content generation, and chatbot support.

GPT models, specifically OpenAI’s ChatGPT, are a specific type of LLM that use a transformer architecture to generate human-like text responses.

Both LLMs and GPT models have their strengths and limitations, and understanding their differences can help in choosing the right model for specific applications.

LLMs require an extensive training and fine-tuning process, while GPT models are pre-trained and can be fine-tuned for specific tasks.

LLMs excel in text generation and understanding, while GPT models have a specific focus on generating text responses in a conversational manner.

Introduction

Large Language Models (LLM) and Generative Pre-trained Transformer (GPT) models are revolutionizing artificial intelligence (AI) and natural language processing (NLP). Understanding the difference between LLM and GPT, two commonly used acronyms in the realm of AI and NLP, is crucial for grasping their distinct capabilities and applications in various industries. While both excel in text generation, they differ in their underlying architectures and performance metrics. Delving deeper into these models’ nuances will shed light on how they are reshaping the landscape of AI and machine learning.

What Is Large Language Models (LLM)

Large Language Models (LLMs) refer to a broad category of language models that are designed for various natural language processing tasks. GPT models fall under this category as a specific type of LLM. The term “LLM” encompasses any extensive language model used in this field.

Key Features of LLM

LLMs possess several key features that make them powerful tools in natural language processing and AI applications. These features include:

Scalability: Large Language Models (LLMs) are notable for their scalability, with sizes ranging from smaller variants to extremely large versions like GPT-3. The size of an LLM greatly influences its capabilities.

Variety of Architectures: Unlike GPT models that utilize the Transformer architecture, LLMs can be built using various architectures, including recurrent neural networks (RNNs) and convolutional neural networks (CNNs).

Wide-ranging Applications: LLMs are adaptable to numerous NLP tasks such as sentiment analysis, text summarization, and language translation, showcasing their broad applicability in addressing diverse challenges.

Data-Driven Learning: LLMs are trained on extensive datasets, including texts from books, articles, and websites, allowing them to learn and replicate complex language patterns and nuances.

Ethical Challenges: LLMs encounter issues like biases and ethical concerns since the data they train on may reflect existing biases in human language. These challenges prompt ongoing debates about responsible AI use and the behavior of models.

What is Generative Pre-trained Transformer (GPT)

Generative Pre-trained Transformer, commonly known as GPT, is a series of natural language processing (NLP) models created by OpenAI. These models are engineered to produce and comprehend text that resembles human language, responding to the input provided to them. GPT-3, which is the latest and most prominent version, stands as the largest model in this series to date.

Key Features of GPT

GPT models excel in their ability to generate coherent and contextually relevant text, a fundamental feature called text completion. Key characteristics of GPT include:

Pre-training: GPT models undergo extensive pre-training on vast datasets from the internet to learn language structures, grammar, semantics, and context.

Transformer Architecture: Built on the Transformer framework, GPT models efficiently process sequences of data. This architecture allows them to account for the context of each word in a sentence during text generation.

Fine-Tuning: Post pre-training, GPT models can be fine-tuned for specific tasks or industries, enhancing their performance in areas like language translation, text completion, or question answering.

Large-Scale: For instance, GPT-3 is a massive model with 175 billion parameters, making it one of the largest language models in existence. Its extensive size significantly boosts its capability for text generation.

Human-Like Text Generation: Known for producing text closely mimicking human writing, GPT models are adept at composing essays, answering queries, and even crafting poetry, often making it difficult to distinguish between human and machine output.

Comparative Analysis: LLM vs. GPT

Now that we have a solid grasp of what GPT and LLM entail, let’s proceed with a comparative analysis to examine the differences and similarities between GPT and LLM.

Training Data and Scale

GPT

GPT models are distinguished by their large scale, with GPT-3, for instance, pre-trained on 570GB of diverse text data such as internet text, books, and articles. This vast amount of training data is crucial for its advanced language generation capabilities.

LLM

LLMs cover a wide spectrum of models varying in scale and data used for training. They range from smaller models like GPT-2, which has 1.5 billion parameters, to much larger ones like GPT-3, with 175 billion parameters. The training data for LLMs is generally similar to GPT’s but varies according to each model’s specific design and objectives.

Key Difference

The main difference in training data and scale is that GPT-3 represents a specific instance within the broader category of LLMs, positioned at the higher end of the scale spectrum.

Architecture and Functionality

GPT

GPT models utilize the Transformer architecture, which is adept at processing data sequences, making it highly effective for various NLP tasks. These models are particularly renowned for text generation and completion.

LLM

LLMs employ diverse architectures, including Transformers, RNNs, and CNNs, tailored for scalability and flexibility depending on the model’s goals. LLMs support a broader range of NLP tasks beyond text generation.

Key Difference

The critical distinction in architecture and functionality is that GPT models are exclusively built on the Transformer architecture and are primarily recognized for their text generation prowess, whereas LLMs incorporate multiple architectures and a broader scope of applications.

Use Cases and Applications

GPT

GPT models, such as GPT-3, are acclaimed for producing text that closely resembles human writing, used in content creation, answering questions, language translation, chatbots, and creative writing. GPT-3 has shown exceptional proficiency in understanding and generating natural language.

LLM

Being a more extensive category, LLMs find use in various applications such as sentiment analysis, text summarization, language translation, text classification, and more. They can be customized for particular sectors like healthcare, finance, and customer service, addressing industry-specific needs.

Key Difference

While GPT models are highly valued for their text generation skills, LLMs are utilized for a wider array of NLP tasks, highlighting their versatility.

Ethical and Societal Implications

GPT

The large-scale use of GPT models has sparked ethical debates over biases, misinformation, and potential misuse, particularly with GPT-3’s ability to produce human-like text, raising questions about AI’s responsible use in content creation.

LLM

Ethical concerns with LLMs also involve issues of bias and privacy, extending to the responsible use of AI across different applications. Given their extensive use across various industries, it’s crucial to consider ethical issues tailored to each application’s specific context.

Key Difference

The ethical and societal implications associated with GPT models and LLMs are similar, with both raising concerns about biases and responsible AI usage. The specific concerns may vary based on the application and scale of the model.

Existing Applications of LLM and GPT in Various Industries

Large Language Models (LLMs) and Generative Pre-trained Transformer (GPT) models have found numerous applications in various industries. Let’s find out respectively.

Existing Applications of LLM

In recent years, numerous large language models have shown remarkable capabilities in a variety of natural language processing tasks. Here are some prominent examples:

BERT (Bidirectional Encoder Representations from Transformers): Created by Google, BERT is a pre-trained transformer model known for its proficiency in understanding contextual nuances. It has set new benchmarks in sentiment analysis, question-answering, and named entity recognition.

RoBERTa (Robustly Optimized BERT Pretraining Approach): An enhanced version of BERT developed by Facebook, RoBERTa utilizes advanced pre-training methods and larger datasets, which have led to superior results across multiple benchmarks.

GPT-2, GPT-3, and GPT-4 (Generative Pre-trained Transformer): Developed by OpenAI, the GPT series of models are powerful language models that excel at generating human-like text. They are pretrained on vast amounts of text data and can be fine-tuned for various applications, such as conversation, translation, and summarization.

ALBERT (A Lite BERT): This streamlined version of BERT employs parameter-sharing techniques to reduce the total number of parameters, which conserves memory and computational resources while maintaining robust performance.

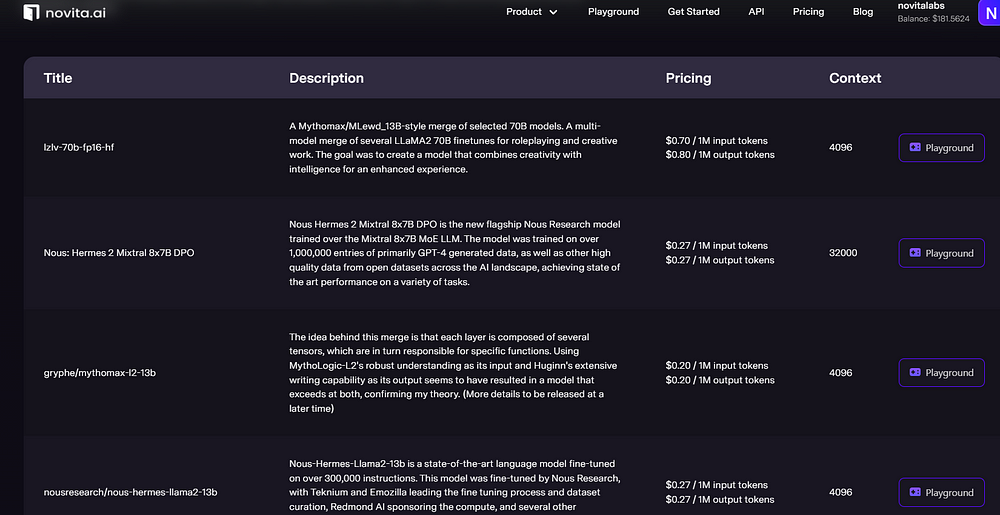

Chat-completion by Novita.ai: This LLM Chat APIs empower you to engage in conversations on any topic of your choice. They are unrestricted, rule-free, and uncensored for your conversations.

Existing Applications of GPT

In addition to the large language models mentioned earlier, there are several general pre-trained transformers designed for a variety of tasks, including computer vision, speech recognition, and reinforcement learning. Some notable examples include:

Vision Transformer (ViT): ViT is a transformer model initially pre-trained for computer vision tasks. It processes images as sequences of patches, utilizing the transformer’s powerful capabilities for tasks such as image classification.

DETR (Detection Transformer): DETR applies the transformer framework to object detection and image segmentation, directly modeling the relationships between regions of an image and object classes, thereby eliminating the need for traditional techniques like anchor boxes or non-maximum suppression.

Conformer: Conformer blends the transformer architecture with convolutional neural networks (CNNs) to enhance speech recognition tasks. It demonstrates excellent performance in automatic speech recognition (ASR) and keyword spotting.

Swin Transformer: Designed for computer vision, the Swin Transformer adopts a hierarchical structure that allows for efficient image processing, making it adept at handling high-resolution images and scaling to larger datasets.

Perceiver and Perceiver IO: These versatile transformer models can process multiple data types, including images, audio, and text. They feature a unique attention mechanism that efficiently handles large volumes of input, making them adaptable to various applications.

Conclusion

In conclusion, understanding the distinctions between Large Language Models (LLM) and Generative Pre-trained Transformer (GPT) Models is crucial for leveraging their capabilities effectively. While LLMs offer specific features tailored to their evolution, GPT models excel in generative content creation. Their comparative analysis sheds light on the architectural variances and application scopes. The future of LLM and GPT holds promising trends in AI advancements, with ethical considerations and data privacy being paramount. Overcoming challenges in implementing these technologies necessitates addressing biases and ensuring fair AI models, underscoring their pivotal role in shaping the future of AI research and machine learning.

Frequently Asked Questions

What Makes GPT Models Unique Compared to Other LLMs?

GPT models, including OpenAI’s ChatGPT, are unique compared to other large language models (LLMs) due to their use of a transformer architecture and attention mechanism.

How Do LLM and GPT Models Impact the Future of Work?

These AI models can automate tasks, enhance productivity, and provide intelligent assistance in various industries, including content creation, customer support, and data analysis.

Originally published at novita.ai

novita.ai, the one-stop platform for limitless creativity that gives you access to 100+ APIs. From image generation and language processing to audio enhancement and video manipulation,cheap pay-as-you-go , it frees you from GPU maintenance hassles while building your own products. Try it for free.